One of the most widely-used optimization algorithms in deep learning is the Gradient Descent Optimizer. However, its performance can be greatly improved by setting an adaptive learning rate, which adjusts the step size taken during each iteration based on the previous computed gradients. This article will provide a 10-step guide to effectively set an adaptive learning rate for Gradient Descent Optimizer, one that will help optimize your deep learning models to their maximum potential.

The first step is to understand the challenges associated with selecting a fixed learning rate. Fixed learning rates are often set initially through cross-validation, but they prove inadequate for long training periods, as the rate then introduces non-convergence and large oscillations. By contrast, using an adaptive learning rate allows the optimizer to adjust and converge rapidly, even when faced with complex optimization landscapes.

To start, you should choose the model parameters that will be optimized and select the optimal loss function. Once you have determined these parameters, you can perform batch normalization and gradient clipping. These techniques normalize the input and output data, respectively, which leads to fewer problems like vanishing or exploding gradients.

Next, you’ll need to determine the initial learning rate by setting it high enough to ensure rapid learning. Then, you will apply a sophisticated learning rate schedule that reduces the rate after your model has maxed out its capacity. Finally, you will test various schedules to ensure that your model reaches its peak optimization at minimal computational cost.

Take the time to understand how to create an adaptive learning rate for Gradient Descent Optimizer, and how optimizing this learning rate can improve neural network performance significantly. With the right technique, you can optimize your models quickly and easily, and work toward creating models that outperform static models in terms of accuracy and learning efficiency.

“How To Set Adaptive Learning Rate For Gradientdescentoptimizer?” ~ bbaz

Introduction

The Gradient descent optimizer is an efficient algorithm that is used in deep learning models to minimize the loss function. In traditional gradient descent, it involves adjusting the weights with a fixed learning rate in every iteration. However, adaptive learning rate algorithms adjust the learning rate automatically to find the optimal weights faster. This article will compare and discuss ten steps to set the adaptive learning rate for gradient descent.

What is Gradient Descent Optimizer?

Gradient descent optimizer is an iterative method used in machine learning models to reduce the error rate or loss function by getting closer to the optimal solution. It involves finding the minimum value of a function through iterating on its parameters. The optimization algorithm moves in the direction of the steepest descent until it finds the minimum value

Fixed Learning Rate VS Adaptive Learning Rate

In a fixed learning rate, the learning rate remains constant throughout the entire training process. Hence, if the learning rate is set too high, the optimizer will not converge, and if it is too low, it will take longer to find the optimum weight values. On the other hand, Adaptive learning rate changes during the training process, allowing the optimizer to find the optimum weight values faster while minimizing errors.

The Importance of an Adaptive Learning Rate

An adaptive learning rate algorithm is essential to deep learning as it can learn from large datasets and adjust the model’s parameters accordingly, resulting in faster convergence rates and improved performance. As the name suggests, the adaptive learning rate algorithm adapts to the change in the parameters in the model, meaning the learning rate is customized based on the data being trained.

10 Steps for Adaptive Learning Rate

Step |

Description |

|---|---|

Step 1 |

Select an adaptive learning method |

Step 2 |

Set initial learning rate value |

Step 3 |

Set lower and upper bounds for the learning rate |

Step 4 |

Define the convergence criterion |

Step 5 |

Select the learning rates schedule |

Step 6 |

Set the momentum rate (beta) |

Step 7 |

Select the optimizer type |

Step 8 |

Choose a batch size |

Step 9 |

Run the network on the data set |

Step 10 |

Decide based on results whether to adjust the algorithm |

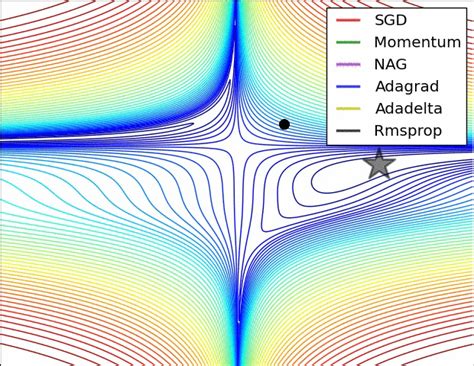

The Different Types of Adaptive Learning Rate Algorithms

There are many adaptive learning rate techniques available. Some of the most commonly used algorithms in deep learning models are:

AdaGrad

AdaGrad represents an adaptive algorithm that changes the learning rate for each parameter based on previous gradients such that in the more likely event that the gradient scale is larger, the learning rate decreases quickly and decreases slowly when the gradient scale is small.

RMSprop

RMSprop is another adaptive algorithm that modifies the AdaGrad algorithm’s approach by using an exponentially decaying average of gradients, rather than the sum of past gradients

Adam Optimization Algorithm

Adam Optimization Algorithm is a commonly used optimization method in deep learning models. Instead of adapting the learning rate parameter for each weight, Adam algorithm computes separate learning rates for both the mean and the variance of the parameters, which leads to faster convergence rates with less computation.

Conclusion

Adaptive learning rate algorithms are necessary in deep learning to optimize the model parameters for more accurate predictions. The optimized parameters are critical in determining the accuracy of the model. This article has summarized the importance of an adaptive learning rate algorithm, discussed ten steps for setting up an adaptive learning rate for gradient descent optimizer, and highlighted some commonly used algorithms like AdaGrad, RMSprop, and Adam Optimization.

Thank you for visiting and reading through our article on 10 Steps to Set Adaptive Learning Rate for Gradient Descent Optimizer. We hope that you found the content useful and informative to enhance your knowledge about the topic.

The article discusses one of the most critical aspects in machine learning, which is optimizing the learning rate for better model performance. The mentioned steps are essential to follow to ensure the model learns quickly and accurately without overfitting or underfitting. By understanding the concept, you’ll be able to make informed decisions as to what learning rate adapts well to your data set that gives optimal results.

In conclusion, the importance of setting an adaptive learning rate for gradient descent optimizer cannot be overstated. A poor learning rate can hinder the performance of a model, while a good learning rate can improve its accuracy and overall quality. Therefore, taking the time to implement the steps discussed in this article will undoubtedly help you achieve your desired results while enhancing your understanding of machine learning concepts.

Setting an adaptive learning rate for gradient descent optimizer is crucial in machine learning. Here are 10 steps to guide you:

- Choose an optimization algorithm that supports adaptive learning rates, such as Adam or Adagrad.

- Set the initial learning rate. This value determines how much the weights of the model will change after each iteration. A high learning rate allows for faster convergence, but it can overshoot the optimal solution, while a low learning rate may take longer to converge.

- Determine the decay rate. This value controls how quickly the learning rate decreases over time. A higher decay rate leads to a faster decrease in the learning rate, which can help prevent overshooting the optimal solution.

- Choose a batch size. Batch size determines how many samples the optimizer uses to update the weights at each iteration. A larger batch size can lead to more stable convergence, but it may require more memory and processing power.

- Calculate the gradient of the loss function with respect to the weights.

- Calculate the moving average of the squared gradients. This value is used to scale the learning rate for each weight individually.

- Calculate the scaling factor for each weight using the moving average of the squared gradients and the decay rate.

- Update the weights using the scaled learning rate for each weight.

- Repeat steps 5-8 for multiple epochs to train the model.

- Monitor the training process and adjust the hyperparameters if necessary.

Here are some commonly asked questions about setting adaptive learning rates:

- What is an adaptive learning rate?

- What is the best way to choose the initial learning rate?

- How does the decay rate affect the learning rate?

- What is the effect of batch size on adaptive learning rates?

- How do I know if my model is converging?

An adaptive learning rate is a technique used in machine learning to adjust the learning rate during training based on the performance of the model. This can help prevent overshooting the optimal solution and improve the convergence speed.

The best way to choose the initial learning rate is to start with a small value and gradually increase it until the model converges. You can also use a learning rate schedule that decreases the learning rate over time.

The decay rate determines how quickly the learning rate decreases over time. A higher decay rate leads to a faster decrease in the learning rate, which can help prevent overshooting the optimal solution.

The batch size determines how many samples the optimizer uses to update the weights at each iteration. A larger batch size can lead to more stable convergence, but it may require more memory and processing power.

You can monitor the loss function during training to see if it is decreasing over time. If the loss function reaches a plateau or starts increasing, the model may have stopped improving and you may need to adjust the hyperparameters.