As information continues to grow and expand, the need for effective text comparison algorithms also becomes more prominent. These algorithms provide a systematic and efficient way of analyzing any variations, similarities, or differences between two or more documents. However, there are several text difference algorithm techniques that can help in comparing documents.

One of the techniques is the Longest Common Subsequence (LCS) algorithm, which searches for the longest subsequence that appears in both documents. This approach focuses on sequence alignment, and it’s useful for identifying differences between two texts.

Another technique to highlight is the Levenshtein Distance algorithm, commonly known as the edit distance algorithm. This method measures the minimum number of operations necessary to transform one string into another—this is mostly used to identify the number of insertions, deletions, and substitutions required to make the two strings identical.

The Ratcliff/Obershelp algorithm is a text difference technique that compares two documents based on the number of common substrings they share. It identifies common substrings and then evaluates them by assigning a score to each identified substring; the highest score signifies greater similarity in the compared documents.

The Jaccard Distance algorithm is another effective way to compare two texts based on their similarity. This approach involves measuring the number of items (terms/words) shared by two sets of data obtained from the original documents. The more items compared documents share, the higher the similarity score.

The Cosine Similarity algorithm is a technique commonly used to determine the similarity between multiple documents based on term frequency-inverse document frequency (TF-IDF). This approach quantifies the equality of words between two documents by calculating the cosine of the angle formed between them.

In conclusion, comparing texts is becoming increasingly important in today’s world, where vast amounts of data continuously flood the internet. As such, the use of text difference algorithms cannot be overemphasized. These techniques are designed to provide efficient and accurate results, effectively saving time and resources.

“Text Difference Algorithm” ~ bbaz

Introduction

When it comes to comparing two or more documents, text difference algorithms come in handy. These algorithms analyze the similarities and differences between two texts and provide a comparison report. In this article, we will explore 10 different text difference algorithm techniques that you can use to compare documents.

Method 1: Longest Common Subsequence (LCS)

LCS compares two strings and identifies the longest sequence of characters that appear in both strings. This technique is widely used in version control systems like Git and SVN to track changes between two versions of a codebase.

Method 2: Levenshtein Distance

Levenshtein Distance measures the number of single character edits (insertions, deletions, or substitutions) needed to transform one string to another. This algorithm is mainly used in spell-checking applications.

Method 3: Jaccard Index

The Jaccard index calculates the similarity between two sets of data. In the context of text comparison, this technique is used to compare word frequency between two documents.

Method 4: Cosine Similarity

Cosine similarity measures the cosine of the angle between two vectors projected in a multidimensional space. In text comparison, each document is represented as a vector, and the angle between the vectors determines their similarity.

Method 5: Ratcliff/Obershelp Algorithm

This algorithm recursively finds the longest common subsequence between two strings, making it ideal for comparing larger texts. The comparison result is shown as a percentage of the total number of characters in the input strings.

Method 6: FuzzyWuzzy

FuzzyWuzzy uses Levenshtein distance to compare two strings but with added features to handle non-exact matches. It can handle spelling mistakes, word transpositions, synonyms, and other fuzziness.

Method 7: Token Sort Ratio

This method sorts words in the input strings alphabetically and then applies a ratio calculation to compare them. This approach is ideal for comparing product names or titles that have different variations of the same words.

Method 8: N-Gram

N-gram splits text into smaller chunks (usually words) and then calculates the frequency of these chunks in each document. The similarity between two texts is then determined based on the overlap of their n-gram frequencies.

Method 9: SimHash

SimHash creates a numerical hash value for each document, which represents its overall content. Two documents with similar content will have similar hash values.

Method 10: Latent Semantic Analysis (LSA)

LSA uses singular value decomposition (SVD) to reduce the dimensions of the vector space created from the input documents. This technique helps identify topics and keywords in the documents and compares their similarity.

Comparison Table of 10 Text Difference Algorithm Techniques

| Algorithm | Pros | Cons |

|---|---|---|

| LCS | Fast and efficient, good for tracking code changes. | Does not handle fuzzy matches very well. |

| Levenshtein Distance | Robust and handles spelling errors well. | Can be slow for large texts. |

| Jaccard Index | Efficient and performs well with large datasets. | Not suitable for comparing long documents. |

| Cosine Similarity | Good for identifying keyword matches and understanding the context of the text. | Can be impacted by the length of the input texts. |

| Ratcliff/Obershelp Algorithm | Accurate and handles long texts well. | Can be slow for very large texts. |

| FuzzyWuzzy | Handles spelling errors, word transpositions, and other fuzzy matches well. | Performance can be affected by the number of fuzzy matches. |

| Token Sort Ratio | Good for comparing product names and titles that have different variations of the same words. | May not be effective with long texts. |

| N-Gram | Does not require exact matching of words, good for identifying similarities in meaning. | Performance can be impacted by the size of the n-grams and the number of documents. |

| SimHash | Works well for identifying duplicate text content, regardless of variations or alterations. | May not handle complex documents well. |

| LSA | Great for identifying topics and subtle differences in meaning between texts. | May be computationally expensive and requires a lot of input text data. |

Conclusion

In conclusion, there are many different text difference algorithm techniques to choose from. Each of them has its pros and cons, and the best approach depends on the specific task at hand. Overall, determining the right algorithm requires an understanding of the text data, its structure, and any potential challenges that could arise from such data. By carefully choosing the right algorithm, you can produce accurate document comparisons and improve your overall analysis of text data.

Thank you for taking the time to read about the 10 Text Difference Algorithm Techniques to Help Compare Documents. We hope that you found this article informative and that it shed some light on the different ways in which documents can be compared. Whether you are in the field of computer science or not, being able to find differences between two or more documents is a valuable skill that can greatly benefit you in various areas of work and research.

As we have discussed in this article, there are several techniques that can be used to compare documents, including the Levenshtein distance, Ratcliff/Obershelp similarity, and Longest Common Subsequence. These techniques rely on algorithms and mathematical calculations to determine the differences between texts, and each has its own strengths and weaknesses.

We hope that you gained a better understanding of these techniques and how they work. In today’s world where vast amounts of text are created and shared every day, the ability to quickly and accurately compare documents can be incredibly useful. We encourage you to try using some of these techniques in your own work and see how they can help you to be more efficient and effective in your tasks.

- What are text difference algorithm techniques?

- Why do we need to compare documents using text difference algorithm techniques?

- What is the importance of text difference algorithm techniques in document comparison?

- What are the different types of text difference algorithm techniques?

- How do text difference algorithm techniques work?

- Levenshtein Distance Algorithm

- Longest Common Subsequence Algorithm

- Longest Common Prefix Algorithm

- Greedy String Tiling Algorithm

- Smith-Waterman Algorithm

- Wagner-Fisher Algorithm

- Bitap Algorithm

- Rabin-Karp Algorithm

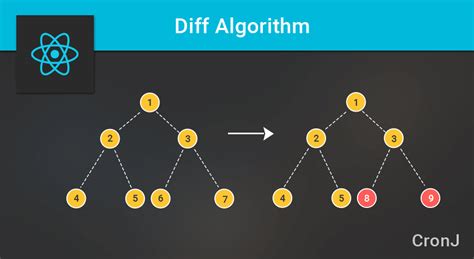

- Diff Algorithm

- Patience Diff Algorithm

Levenshtein Distance Algorithm is a popular algorithm that measures the difference between two texts using the minimum number of operations required to transform one text into another. It is commonly used in spell-checking and data entry applications.

The Longest Common Subsequence Algorithm compares two texts and finds the longest subsequence that is common to both texts. This algorithm is used in software development and text analysis.

The Longest Common Prefix Algorithm finds the longest common prefix of two texts. It is used in search engines and data analysis applications.

The Greedy String Tiling Algorithm is used to find the longest contiguous substring that is common to two texts. This algorithm is used in plagiarism detection and text comparison applications.

The Smith-Waterman Algorithm is a dynamic programming algorithm that is used for local alignment of two sequences. It is commonly used in bioinformatics and DNA sequencing applications.

The Wagner-Fisher Algorithm is used to calculate the edit distance between two texts. It is commonly used in natural language processing and data analysis applications.

The Bitap Algorithm is a string matching algorithm that uses bitwise operations to find the closest match for a given pattern in a text. It is used in search engines and data analysis applications.

The Rabin-Karp Algorithm is a string matching algorithm that uses hashing to find the closest match for a given pattern in a text. It is commonly used in plagiarism detection and data analysis applications.

The Diff Algorithm is a simple algorithm that compares two texts and finds the differences between them. It is commonly used in version control systems and software development applications.

The Patience Diff Algorithm is an advanced algorithm that is used to find the differences between two texts that have been edited by multiple people. It is commonly used in collaborative writing and software development applications.