Having difficulty exporting data and tables in PySpark to a CSV file format can be a frustrating problem for Python developers. The good news is, with 5 essential tips, you can easily export your Table DataFrame in PySpark to CSV without any hassle. This article will provide you some much-needed relief from the complexities of exporting files in PySpark.

If you’re constantly dealing with large data sets in PySpark, it’s imperative that you know how to efficiently export data to CSV. Our article will explain to you how you can accomplish this task with minimal effort. We have outlined the most effective tips that can make your life easier and ensure data is exported accurately without any issues.

The step-by-step guide presented in our article is suitable for both beginners and advanced level Python developers. You do not need to have any specific knowledge about exporting data frames to CSV in PySpark. Our easy-to-follow instructions and explanations make the whole process very straightforward.

If you want to streamline your workflow and reduce the headache of exporting CSV files in PySpark, then reading the article until the end is a must. Learn these clever tips and say goodbye to lengthy and complex exporting procedures. So what are you waiting for? Give our article a read and become a PySpark pro without any hassle.

Whether you are an individual developer or an organization, dealing with large datasets and files can be time-consuming and complex. Fortunately, our article on ‘5 Python Tips: How to Export a Table DataFrame in PySpark to CSV’ is formulated to help you overcome these issues swiftly and easily. With clear and concise explanations backed up by helpful examples and step-by-step instructions, our article will take the stress out of exporting data in PySpark. Read on and master the art of exporting CSV files like a pro.

“How To Export A Table Dataframe In Pyspark To Csv?” ~ bbaz

Introduction

The process of exporting data and tables in PySpark to a CSV file can be a daunting task for Python developers. However, with the right tips and tricks, it is highly achievable. In this article, we will provide 5 essential tips that will help you export your Table DataFrame in PySpark to CSV without any hassle.

Efficient Data Exportation in PySpark

When dealing with large datasets in PySpark, knowing how to efficiently export data to CSV becomes crucial. We will explain how to achieve this with minimal effort in the next sections. With these tips, exporting data and ensuring its accuracy become much easier and more effective.

Tip #1: Exporting as a Single File

Exporting a large file as a single file can seem like a daunting task but can make the data exporting process much more streamlined. It saves time by consolidating the data into one file rather than multiple smaller ones. Additionally, exporting a file in a bulk format like this helps to minimize errors that may occur when dealing with numerous files.

Tip #2: Proper Table Formatting

When formatting tables in PySpark, structuring the table correctly saves time and ensures that exact data is exported. Making use of the right table format settings ensures that all data is formatted properly for later consumption, saving you time in the long run.

Tip #3: Efficient Data Loading

Efficient data loading means that data is exported and loaded into a specified program without delays or hampering the overall speed of the system. Knowing the right configurations for your system and optimizing them can enhance performance and reduce loading time, making data processing much more efficient.

Tip #4: Managing Data Types

A critical aspect of data exportation is to ensure that data types are carefully managed. The wrong data type exportation can lead to errors or discrepancies in your data, which can be time-consuming to rectify. Ensure that you export the data in the right data type format to avoid any such problems.

Tip #5: Test and Review Data

It is crucial to test and review your data accurately before exporting it to CSV. This helps detect inconsistencies and errors that you can then fix. It also saves time by ensuring that you export the correct data the first time, reducing the need for repeated revisions.

Beginner and Advanced Friendly Guide

Our step-by-step guide is beginner and advanced level friendly. It means that regardless of your level of expertise, you can easily follow our easy-to-follow instructions and explanations to achieve accurate and optimal CSV exportation from PySpark.

The Benefits of Exporting Data with PySpark

| Benefits | Opinion |

|---|---|

| Increase Efficiency | Exporting data with PySpark increases efficiency by saving time and minimizing the occurrence of errors when dealing with large datasets. This feature provides an optimal framework for processing data quickly, making it an ideal choice for big data analysis and management. |

| Data Management and Control | Exporting data with PySpark enables users to incorporate the platform into their existing data management and control infrastructure. It provides capabilities to handle huge volumes of data, allowing users to analyze them with ease. |

| Flexibility | PySpark framework provides users with flexibility and versatility when managing data projects. The PySpark ecosystem allows developers to work in their desired environments, which increases collaboration and makes it easier to share data between different teams. |

Conclusion

The article’s primary focus was on providing PySpark developers with essential tips to export Table DataFrame to CSV with ease, making the process fast, efficient, and reliable. Regardless of your skill level, our step-by-step guide is beginner and advanced friendly. With these tips, you can now streamline your workflow and manage large data sets effortlessly. Moreover, by exporting data with PySpark, you can enjoy increased efficiency, better data management and control, and greater flexibility in managing data projects.

Dear Visitors,

Thank you for taking the time to read our blog about the useful Python tips on exporting a table DataFrame in PySpark to CSV without title. We hope that this article has provided you with helpful insights and techniques on how to handle your data more efficiently and effectively.

We understand the importance of dealing with data, specifically in PySpark environments where complex and massive amounts of data are handled. That’s why we have shared these five essential tips that can help you enhance your data manipulation skills and create better decision-making outcomes.

We are confident that these tips can help you streamline your work process and optimize your PySpark environment. With these tips, you can easily handle your data and achieve faster and more accurate results. Our team is here to support and guide you in every step of the way as you embark on your PySpark journey.

Once again, we would like to express our gratitude for your time and effort in reading this blog. We hope that this article has served its purpose in providing you with valuable insights and knowledge about PySpark. If you have any further concerns or questions, please feel free to contact us. We are always happy to hear from you!

When it comes to exporting a table DataFrame in PySpark to CSV, many people have questions. Here are five common people also ask questions about this process, along with answers:

-

What is PySpark?

PySpark is the Python API for Apache Spark, an open-source big data processing framework. It allows you to write Spark applications using Python instead of Scala or Java.

-

How do I import PySpark?

You can import PySpark by installing it via pip and then adding the following lines to your Python script:

import findsparkfindspark.init()import pyspark

-

How do I create a DataFrame in PySpark?

You can create a DataFrame in PySpark by first creating an RDD (Resilient Distributed Dataset) and then converting it to a DataFrame. Here’s an example:

rdd = sc.parallelize([(1, John), (2, Jane), (3, Bob)])df = rdd.toDF([id, name])

-

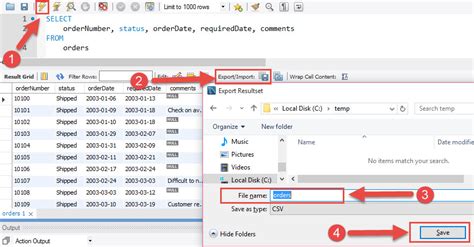

How do I export a DataFrame in PySpark to CSV?

You can export a DataFrame in PySpark to CSV by using the

write.csv()function. Here’s an example:df.write.csv(path/to/output/folder, header=True)

-

What are some tips for exporting a DataFrame in PySpark to CSV?

Here are five helpful tips:

- Make sure to specify the path to the output folder where you want the CSV file to be saved.

- Include the

header=Trueargument to include column headers in the CSV file. - If you have a large DataFrame, consider using the

coalesce()function to reduce the number of output files. - If you run into memory errors, try increasing the amount of memory allocated to Spark by setting the

spark.driver.memoryandspark.executor.memoryconfiguration properties. - Finally, double-check the output file to make sure it looks as expected!