Goal

- To research the Aadhar knowledge utilizing Hadoop to extract significant data for the aim of higher decision-making by the central and state authorities.

Mission Overview

The worlds largest democracy, India is the second largest nation when it comes to inhabitants, with 1.3 billion inhabitants. Amongst these, 99% of grownup inhabitants enrolled for Aadhar, the distinctive id offered by the Authorities of India for various functions. The federal government maintains the Aadhar associated knowledge in digital format. https://data.uidai.gov.in/uiddatacatalog/dataCatalogHome.do web site gives the entry to Aadhar card associated knowledge set. The Public can entry a number of the sources of those knowledge and so they can analyze to extract helpful information and generate reviews.

The information set covers greater than 99% grownup inhabitants of our nation. So the quantity of information generated by Aadhar could be very big. Equally, all the info collected for this distinctive id will not be in structured knowledge. It additionally consists of unstructured and semi-structured knowledge. Additionally, the enrollment continues to be within the course of. The processing velocity of this knowledge era is excessive. Subsequently, theses traits come underneath the massive knowledge idea.

The aim of Hadoop is storing and processing great amount of the info. So this project makes use of the Hadoop for processing Aadhar knowledge. The enter knowledge is processed utilizing MapReduce after which result’s loaded into Hadoop Distributed File System (HDFS). Finalreports generated utilizing Tableau (Enterprise Intelligence Software program).

Proposed System

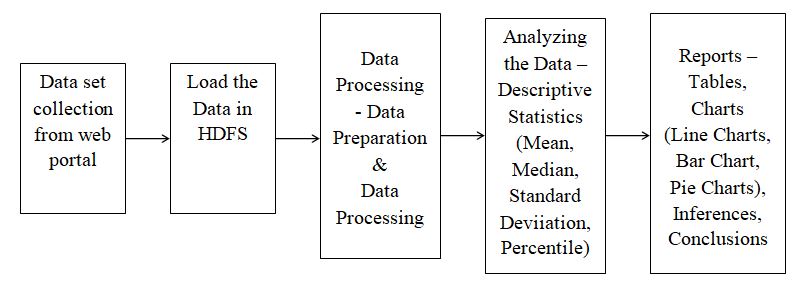

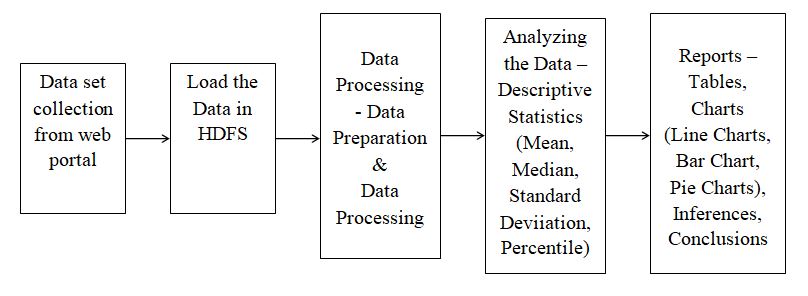

The proposed system concentrates on analyzing Aadhar associated knowledge utilizing Hadoop for the aim of higher determination making by the Authorities of India. The proposed system structure is proven within the determine.

Step 1: Information Preparation

Information Choice: The required knowledge set is collected from the federal government net portal.

Information Loading: The collected knowledge set loaded into Hadoop Distributed File System atmosphere.

Information Pre processing: The collected knowledge set may encompass lacking values and noisy knowledge. If evaluation is carried out on this knowledge, it might result in improper outcomes. So to keep away from this, knowledge pre processing is finished on the info set.

Step 2: Information Evaluation

Information Evaluation: Now the collected knowledge set is prepared for knowledge evaluation. Descriptive statistics like imply, median, mode, percentile are utilized.

Step 3: Outcomes

Report Era: After the info evaluation, the analyzed outcomes must be visualized. Tableau can be utilized for this goal. Bar charts, Line charts and Pie charts are generated together with the desk format.

Statistics Questions

- Establish the full variety of playing cards authorised by gender clever

- Establish the full variety of playing cards authorised by state clever

- Establish the full variety of playing cards authorised by age clever

- Establish the full variety of playing cards authorised in rural areas

- Establish the full variety of playing cards authorised in rural areas

- Establish the full variety of playing cards authorised in metropolis areas

- Establish the quantity playing cards rejected by authorities (State clever)

- Establish the quantity playing cards rejected by authorities (Gender clever)

- Establish the quantity playing cards rejected by authorities (Age clever)

Benefits

- Authorities can instantly take corrective measures for the problems discovered within the Aadhar card associated knowledge evaluation.

- Central and state authorities can take crucial precaution measurements to keep away from the problems in future.

Software program Necessities

- Linux OS

- MySQL

- Hadoop&MapReduce

- Tableau

{Hardware} Necessities

- Exhausting Disk – 1 TB or Above

- RAM required – 8 GB or Above

- Processor – Core i3 or Above

Know-how Used

- Huge Information – Hadoop

- Statistics

Supply projectgeek.com