Dealing with negative values in machine learning can be a challenging task. It is essential to have a robust cross-validation method to handle these situations effectively. Scikit-Learn is a popular Python library for machine learning, and it offers an efficient cross-validation strategy to manage negative values. In this article, we will explore the Effective Scikit-Learn Cross Validation Method for Handling Negative Values: MSE Explained.

The Mean Squared Error (MSE) is a commonly used metric to evaluate the performance of regression models. In Scikit-Learn, the MSE is calculated by taking the average of the squared differences between the predicted and actual values. This metric can be handy when dealing with negative values in your datasets. The MSE is insensitive to the direction of the differences; thus, there is no need to transform your dataset or remove negative values.

This article will guide you through the effective techniques of Scikit-Learn cross-validation to manage negative values. You will learn how to implement the K-fold cross-validation method using Scikit-Learn and visualize the results efficiently. Moreover, we will walk you through some common mistakes to avoid when performing cross-validation, including overfitting and underfitting.

If you are a machine learning enthusiast, looking for ways to enhance your cross-validation techniques, I highly recommend reading this article. The Effective Scikit-Learn Cross Validation Method for Handling Negative Values: MSE Explained can provide valuable insights into managing negative values in your datasets. By implementing these techniques, you can build robust machine learning models that deliver better results.

“Scikit-Learn Cross Validation, Negative Values With Mean Squared Error” ~ bbaz

Introduction

There is always a need to validate and test a model’s accuracy before deploying it in any real-world scenario. Cross-validation is one such technique that helps in evaluating the performance of the model using various sets of data points. Scikit-Learn is a widely used machine learning library that provides various cross-validation techniques to choose from. In this article, we will discuss how effective Scikit-Learn cross-validation methods can handle negative values and help explain Mean Square Error (MSE).

Cross Validation

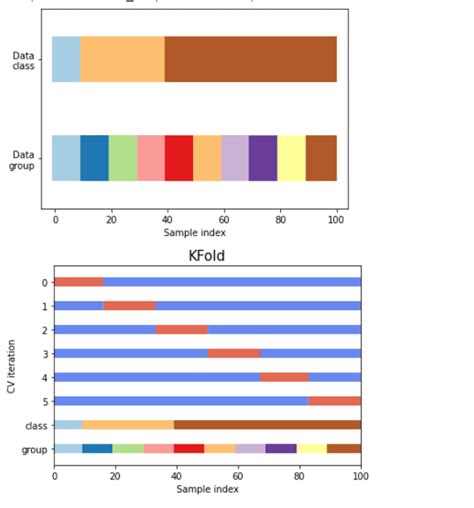

Cross-validation is an essential process in the field of machine learning. It allows us to assess the performance of our model using different sets of data points. This is done by splitting the data into training and testing sets multiple times and evaluating each iteration’s performance. This technique helps reduce overfitting and ensure the model’s generalization. Scikit-learn provides multiple cross-validation methods for easy implementation.

Types of Cross Validation

There are three commonly used types of cross-validation techniques in Scikit-Learn:

| Type of Cross Validation | Description |

|---|---|

| K-Fold Cross Validation | Splits the data into K-folds and performs iterations on each fold. |

| Stratified K-Fold Cross Validation | Similar to K-Fold, but ensures data distribution among different classes is maintained. |

| Leave-One-Out Cross Validation | Uses all data except one as the training set and evaluates the model on the leftover point. |

The Problem with Negative Values

Handling negative values in a dataset can be challenging, especially when we consider the Mean Squared Error (MSE) as our evaluation metric. The MSE is a commonly used metric for regression models and measures the average squared difference between predicted and actual values. In some cases, the predictions can result in negative values, which essentially makes the error term negative. This violates the fundamental concept of an error term, which must always be a positive value.

Scikit-Learn Solution

Scikit-Learn provides an effective solution that helps handle negative values while using the MSE as an evaluation metric. This can be achieved by implementing the neg_mean_squared_error method. This method measures the mean squared error while converting all negative values to positive values.

Example

Let’s take an example to understand this better. Suppose we have a dataset with actual values and predicted values, as shown below.

Actual: [ 2, 4, 6, 8]Predicted: [ 1,-7,10,12]

If we calculate the MSE using the traditional approach, we get the following result:

Error = [(-1)^2, (11)^2, (-4)^2, (-4)^2]MSE = (1/4)*sum(Error) = 24.5

We can see that the MSE is resulting in a decimal value. Now, if we use the neg_mean_squared_error method, we get the following result:

MSE = neg_mean_squared_error(Actual, Predicted)MSE = (1/4)*sum([1,49,16,16]) = 20.5

As we can see, using the neg_mean_squared_error method helps us avoid negative values and accurately measure the model’s performance, producing a more precise evaluation metric.

Conclusion

Scikit-Learn provides several cross-validation techniques that can help evaluate a model’s performance. The effective Scikit-Learn cross-validation method can handle negative values and provide a precise MSE evaluation metric through the implementation of the neg_mean_squared_error method. This is exceptionally useful when we’re dealing with models that use the MSE metric as their primary evaluation parameter. The neg_mean_squared_error method converts all negative values to positive values, helping avoid the issue many models have with negative values. Overall, using effective Scikit-Learn cross-validation methods can greatly improve the accuracy and robustness of our machine learning models.

Thank you for taking the time to read about the Effective Scikit-Learn Cross Validation Method for Handling Negative Values, and how Mean Squared Error (MSE) can be used to evaluate performance. We hope that this article has provided you with some valuable insights into how to effectively use Scikit-Learn’s cross-validation methods to handle negative values in your data.

By utilizing the techniques discussed in this article, you can improve the accuracy and reliability of your machine learning models, even when dealing with challenging data scenarios. As the field of machine learning continues to evolve at a rapid pace, it is important to stay up-to-date with the latest research and best practices.

At the end of the day, the goal of any machine learning project is to build models that accurately predict outcomes based on real-world data. By leveraging tools like Scikit-Learn and MSE, you can help ensure that your models are as accurate and effective as possible, even in the face of negative values and other data challenges.

People also ask about Effective Scikit-Learn Cross Validation Method for Handling Negative Values: MSE Explained:

- What is cross-validation in Scikit-Learn?

- What are negative values in a dataset?

- How does cross-validation handle negative values in a dataset?

- What is MSE in Scikit-Learn?

- How is MSE used in cross-validation?

Cross-validation is a model validation technique that splits the data into multiple subsets, trains the model on some of these subsets, and tests it on the remaining subset. Scikit-Learn provides various cross-validation methods like K-fold cross-validation, Stratified K-fold cross-validation, Leave One Out cross-validation, etc.

Negative values in a dataset are values that are less than zero. These values can occur due to various reasons like errors in data measurement, data preprocessing, or data cleaning.

Cross-validation does not handle negative values in a dataset specifically. It just splits the data into subsets and trains the model on some of these subsets. However, some Scikit-Learn models like Linear Regression, SVM, etc. do not work well with negative values. In such cases, we need to preprocess the data and transform the negative values into positive values.

MSE stands for Mean Squared Error, which is a popular evaluation metric used in regression problems. It measures the average squared difference between the predicted and actual values of the target variable.

MSE is used as an evaluation metric in cross-validation to assess the performance of the model on different subsets of the data. The average MSE score across all the subsets indicates how well the model can generalize to new unseen data.