If you are looking for efficient ways to insert bulk data into a database using Pandas and Sqlalchemy, then this article is just for you. Data insertion is a crucial aspect of any data processing pipeline, and it can often be a bottleneck that slows down your entire project. However, using the right tools and techniques can make the process much faster and easier.

In this article, we will explore how you can use the Pandas library along with Sqlalchemy to efficiently insert large amounts of data into a database. We will cover a range of topics including optimizing data types, chunking dataframes, using indexing, and more. By the end of this article, you will have a solid understanding of how to streamline your data insertion process and get the most out of your database.

Whether you are a data scientist, analyst, or developer, optimizing your data insertion process can save you time and resources. With the techniques outlined in this article, you can easily insert millions of rows of data at lightning-fast speeds. So, if you want to learn more about efficient bulk data insertion with Pandas and Sqlalchemy, keep reading until the end!

“Bulk Insert A Pandas Dataframe Using Sqlalchemy” ~ bbaz

Introduction

When it comes to storing large amounts of data, efficiency is key. Two popular options for bulk data insertion are Pandas and Sqlalchemy. While both have unique features, a comparison between the two can help determine which is the best choice for your particular use case.

What is Pandas?

Pandas is a powerful data manipulation tool written in Python. It is primarily used for cleaning, structuring and analyzing data. Pandas allows for data to be loaded from various file formats such as CSV or Excel, and can also be used to insert data into a database.

How does Pandas handle bulk data insertion?

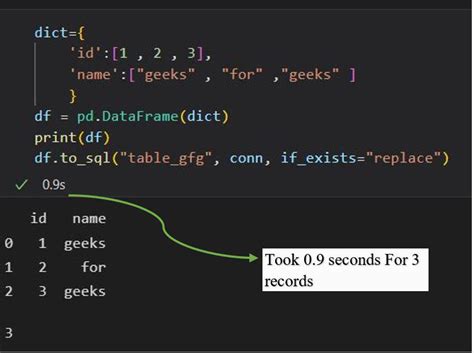

When inserting large amounts of data, Pandas uses DataFrame.to_sql() method which allows data to be inserted into databases from Pandas. This method works by creating a SQLALCHEMY engine, using it to connect to the database and inserting the data using a SQL INSERT statement. Pandas can also be used to batch commit data into the database in smaller chunks to optimize performance.

What is Sqlalchemy?

Sqlalchemy is an Object Relational Mapping(ORM) library for Python that provides a high-level interface for connecting to relational databases. It allows developers to work with databases using Python objects instead of writing raw SQL queries.

How does Sqlalchemy handle bulk data insertion?

Sqlalchemy uses the SQLAlchemy Core Expression API for inserting large amounts of data into databases. This method generates an SQL INSERT statement for each record that is being inserted. The records are then sent to the database one at a time. This can cause performance issues when inserting large amounts of data.

Comparison

| Pandas | Sqlalchemy |

|---|---|

| Efficient for bulk data insertion | Slow for bulk data insertion due to generating individual SQL statements for each record |

| Uses DataFrame.to_sql() method to insert data into databases | Uses SQLAlchemy Core Expression API to generate SQL INSERT statement for each record |

| Able to optimize performance by batching data into smaller chunks | Can cause performance issues when inserting large amounts of data |

Opinion

After comparing the two options, it is clear that Pandas is the more efficient choice for bulk data insertion. It allows for data to be inserted into a database in larger chunks, optimizing performance. Sqlalchemy, on the other hand, generates individual SQL statements for each record, causing performance issues when inserting large amounts of data.

Conclusion

While both Pandas and Sqlalchemy have unique features, the decision of which one to use for bulk data insertion ultimately comes down to the specifics of the project. However for projects where bulk data insertion is a main concern, Pandas would definitively seem like the right choice. Its ability to batch commit data efficiently into a database makes it stand out amongst its competitors.

Dear readers,

Thank you for taking the time to read our article on Efficient Bulk Data Insertion with Pandas and Sqlalchemy. We hope that you have found the information we provided helpful and informative. In this article, we have discussed how to bulk insert data into a SQL database using Pandas and Sqlalchemy to increase efficiency and improve speed. We highlighted the importance of using these libraries in combination, as they can provide a simple and powerful way to insert data into an SQL database.

In summary, we recommend using Pandas and Sqlalchemy for managing your databases as they offer easy integration, flexibility, and high efficiency. By utilizing these tools, you can significantly reduce the time and resources required for managing data tables. We recommend exploring further options available with these libraries to get an insight into the full capabilities of the toolset.

Again, we appreciate your time and interest in our article. We hope that it has been an insightful read, and we welcome any comments or feedback you may have. If you have any questions or would like to learn more about Pandas and Sqlalchemy, please do not hesitate to reach out to us.

Here are some of the most common questions that people ask about efficient bulk data insertion with Pandas and Sqlalchemy:

- What is Pandas?

- What is Sqlalchemy?

- How do I insert data into a SQL database using Pandas and Sqlalchemy?

- What is bulk data insertion?

- How can I perform bulk data insertion with Pandas and Sqlalchemy?

- What are some best practices for efficient bulk data insertion?

Pandas is a Python library that provides tools for data manipulation and analysis. It is particularly useful for working with tabular data, such as spreadsheets or SQL tables.

Sqlalchemy is a Python library that provides tools for working with SQL databases. It allows you to create database schemas, execute SQL queries, and manage database connections.

You can use the Pandas function to_sql() to write data from a Pandas DataFrame to a SQL database. The to_sql() function takes several arguments, including the name of the table you want to insert the data into and the connection string for the database.

Bulk data insertion is a technique for inserting large amounts of data into a database in a single transaction. This can be much faster than inserting the data row by row, as it reduces the overhead of creating and committing transactions.

You can use the fast_executemany parameter of the to_sql() function to perform bulk data insertion. When set to True, this parameter will use a bulk insert strategy that is optimized for performance.

- Use the

fast_executemanyparameter of theto_sql()function. - Limit the number of rows that you insert in a single transaction to avoid running out of memory or hitting other resource limits.

- Use appropriate data types for your database columns to minimize storage requirements and improve performance.

- Consider using an indexing strategy to improve query performance on large datasets.