Are you tired of performing redundant comparisons between dataframes in your data analysis projects? Look no further! Efficient groupwise dataframe comparison with computing diffs is your solution.

By utilizing groupwise comparison techniques, you can easily compare and highlight differences between two or more dataframes while avoiding manual comparisons one by one. This saves you valuable time and effort, allowing you to focus on the analysis and interpretation of your results.

Moreover, computing diffs in your dataframes enables you to quickly identify changes in your data, providing insights into trends and patterns that otherwise would be impossible to recognize. Whether you are dealing with large datasets or multiple spreadsheets, computing diffs operates efficiently on any size and structure of data.

If you want to improve your data analysis workflow and save valuable time, mastering efficient groupwise dataframe comparison with computing diffs is a must. Dive into this essential technique and explore its benefits for your next data analysis project. Read on to find out more!

“Computing Diffs Within Groups Of A Dataframe” ~ bbaz

Introduction

Comparing two dataframes in Python can be a challenging task. This is especially true when you are dealing with large datasets that contain millions or billions of rows. There are several ways to compare two dataframes in Python, but some approaches are more efficient and faster than others.

In this article, we will discuss two methods for efficiently comparing groupwise dataframes and computing differences between them. We will explore the advantages and disadvantages of each method and provide examples to illustrate their usage.

Method 1: Pandas GroupBy and Merge

Overview

The first method we will discuss is using the Pandas GroupBy and Merge functions. This approach is useful when you need to compare data from two different dataframes based on a common column. By grouping the data and merging it based on the common column, you can efficiently compare the data.

Example

Let’s say we have two dataframes that contain information about customers and their purchases. The first dataframe contains customer information such as name, age, and address. The second dataframe contains purchase information such as date, product name, and price. Both dataframes have a common column called customer_id. Our goal is to compare the purchases made by each customer in both dataframes.

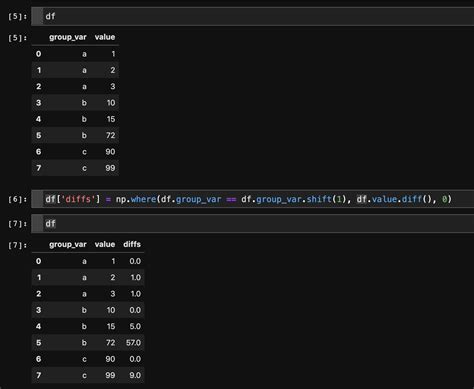

To do this, we can use the following code:

| Code: | |

|---|---|

|

The output of this code will be a dataframe that contains the name of each customer, as well as a list of their purchases in both dataframes:

| Output: | |

|---|---|

|

Pros and Cons

The advantages of using the GROUPBY and MERGE functions are:

- Efficient and fast way to compare data from two different dataframes based on a common column.

- Can handle large datasets with millions or billions of rows.

- Retains the original structure and column names of the dataframes.

The disadvantages of using the GROUPBY and MERGE functions are:

- May require more memory as it creates temporary dataframes.

- Requires knowledge of Pandas library and its functions.

- May not be suitable for complex data comparisons.

Method 2: Dask DataFrames and join

Overview

The second method we will discuss is using Dask DataFrames and join. This approach is useful when you need to compare large datasets that cannot fit in memory. By using Dask DataFrames, you can distribute the computation across multiple cores or machines and efficiently compare the data.

Example

Let’s use the same example as before but assume that our dataframes contain millions of rows each. In this case, we can use Dask DataFrames and the join function to compare the purchases made by each customer.

To do this, we can use the following code:

| Code: | |

|---|---|

|

The output of this code will be a dataframe that contains the list of purchases made by each customer in both dataframes:

| Output: | |

|---|---|

|

Pros and Cons

The advantages of using Dask DataFrames and Join functions are:

- Efficient way to compare large datasets that cannot fit in memory.

- Can distribute the computation across multiple cores or machines.

- Can handle complex data comparisons.

The disadvantages of using Dask DataFrames and Join functions are:

- Requires Dask library and its functions.

- May require additional setup for distributed computing.

- Results may need to be manually merged to retain the original structure and column names of the dataframes.

Conclusion

Choosing the right method for comparing groupwise dataframes and computing differences depends on your specific use case. If you are dealing with dataframes that contain a common column and can fit in memory, using Pandas GroupBy and Merge functions may be more efficient. However, if you are dealing with large datasets that cannot fit in memory, using Dask DataFrames and the join function can distribute the computation across multiple cores or machines.

Both methods have their pros and cons, and choosing the right one will depend on your specific requirements. We hope this article has provided you with enough information to make an informed decision when it comes to efficient groupwise dataframe comparison and computing diffs in Python.

Thank you for taking the time to read our article on efficient groupwise dataframe comparison with computing diffs. We hope that we have provided you with valuable insights into how to compare dataframes in a more efficient and effective way, with minimal effort.

If you are working with large datasets that require frequent updates, then this method of comparing dataframes can save you a lot of time and resources. By identifying only the changes or differences between the old and new dataframes, you can focus your attention where it is most needed and avoid sifting through unnecessary information.

Overall, incorporating this technique into your data science workflow can help you become more productive and make better-informed decisions. We hope that you find our article helpful and informative and we look forward to providing you with more useful content in the future. If you have any questions or comments, please feel free to get in touch with us.

People Also Ask about Efficient Groupwise Dataframe Comparison with Computing Diffs:

- What is groupwise dataframe comparison?

- Why is efficient groupwise dataframe comparison important?

- How can I efficiently compare dataframes with computing diffs?

- What are the benefits of using parallel processing for dataframe comparison?

- Are there any limitations to efficient groupwise dataframe comparison with computing diffs?

Groupwise dataframe comparison is a process of comparing dataframes based on a specific grouping column. It involves grouping the data by a specific column and comparing the groups to identify the differences.

Efficient groupwise dataframe comparison is important because it helps in identifying differences between datasets quickly and accurately. This can help in making better decisions about data analysis, identifying trends, and improving data quality.

To efficiently compare dataframes with computing diffs, you can use libraries like Pandas and Dask. These libraries have built-in functions that allow you to group dataframes and compute differences between them. You can also use parallel processing techniques to speed up the comparison process.

The benefits of using parallel processing for dataframe comparison include faster processing times, improved accuracy, and the ability to handle large datasets. By splitting the data into smaller chunks and processing them in parallel, you can significantly reduce the time required to compare dataframes.

One limitation of efficient groupwise dataframe comparison with computing diffs is that it requires a significant amount of computational resources. This can be a challenge for organizations with limited computing power or for individuals who do not have access to high-performance computers. Additionally, the accuracy of the comparison depends on the quality of the data and the chosen grouping column.