Are you struggling to monitor the performance of your PySpark application? Do you wish there was a way to efficiently log and track the execution of your tasks from the executor node? Look no further, because PySpark Logging is here to help!

With this tool, you can easily monitor the progress of your PySpark application from start to finish. You’ll be able to see exactly how long each task took to complete, how many records were processed, and any errors or exceptions that occurred along the way.

Most importantly, PySpark Logging is incredibly efficient. It doesn’t add any significant overhead to your application, so you won’t have to worry about it slowing down your tasks. And with its easy-to-use interface and powerful monitoring features, you’ll have all the information you need to optimize your PySpark application and improve its performance.

If you’re serious about making the most of your PySpark application, then you owe it to yourself to check out PySpark Logging. With its advanced monitoring capabilities and efficient logging features, you’ll be able to take your application to new heights of performance and productivity. So why wait? Start using PySpark Logging today and see the difference it can make!

“Pyspark Logging From The Executor” ~ bbaz

Introduction

PySpark has gained a reputation as one of the most popular and widely used platforms for processing large quantities of data. While it provides an efficient framework for handling big data tasks, it is crucial to monitor its performance to optimize its capabilities fully. One of the best ways to do this is by logging, which can provide insight into how well your system is performing. This article will compare the various logging techniques available in PySpark and discuss the most efficient method for logging from the Executor to monitor your application’s performance.

What is PySpark?

Before we dive into the world of PySpark logging, let’s take a brief detour to understand what PySpark is. Simply put, PySpark is a framework that allows us to manage and process large volumes of data in a distributed environment. It is built on top of Apache Spark, an open-source cluster computing system, and uses Python as its primary programming language.

Why is Logging Important?

Logging is essential, especially when we’re working with big data. It enables us to track records of system activity and usage over a given period, providing valuable information about how our system works. Without logging, identifying bugs, errors, and other issues would be nearly impossible. Logging helps us pinpoint these problems and come up with solutions to fix them.

Logging Techniques in PySpark

There are several logging techniques available in PySpark, including:

| Logging Technique | Description |

|---|---|

Print Statement Logging |

Basic and often used for debugging purposes; however, it is not ideal for tracking large-scale operations. |

Spark Listener Event Logging |

Reports events that occur during an application’s lifecycle, such as task completion, stage submission and completion, and more. |

Log4jLogging Driver Logging |

This technique provides extensive flexibility and customization options, but it requires significant setup time and effort. |

PySpark Executor Logging |

Logging output from the executor provides insights into what’s happening within each task. |

The Importance of PySpark Executor Logging

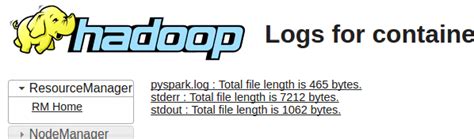

While each logging technique has its benefits, PySpark Executor Logging is perhaps the most critical. This method logs from the executor level of an application, providing a wide range of information about the system’s performance. When the executor logs, it captures all operations performed by that worker, including any error messages, warnings, and debug messages.

Challenges of PySpark Executor Logging

Despite the many advantages of PySpark executor logging, there are some challenges that users may face. One significant challenge is managing the sheer volume of data created by the logs. Given how fast an application can work, it’s easy to generate thousands, if not millions of log entries every second. This can quickly overwhelm a system and lead to reduced performance.

Strategies for Efficient PySpark Executor Logging

To overcome these challenges, there are several strategies you can employ to ensure efficient PySpark logging. Some of these include:

- Implementing high-performance logging mechanisms such as Structured Streaming or Delta Lake.

- Configuring log levels to prevent excessive debugging logging.

- Utilizing cloud storage services for large-scale logging data instead of relying on local storage.

- Strategically choosing which PySpark executor logging features are most important to track, in order to minimize the volume of logs generated.

Conclusion

In conclusion, logging plays a critical role in analyzing and optimizing PySpark applications. While there are several logging techniques available, PySpark executor logging is one of the most efficient methods for providing users with valuable insights into their system’s performance. However, users must carefully manage the large volume of data generated by these logs to avoid performance issues. By implementing the right strategies and making use of high-performance logging mechanisms, users can get the most out of their PySpark logging and optimize their application’s performance effectively.

Thank you for taking the time to read this article on efficient PySpark logging. We hope that we were able to provide valuable insights and tips on how to monitor performance through logging. With proper logging, you can easily identify bottlenecks and issues in your PySpark jobs, allowing you to optimize and improve overall efficiency.

Remember, logging is not just about recording events – it’s about understanding your system’s behavior, performance, and issues. By incorporating appropriate log levels and message formatting, you can make sure that the logs are easy to read and understand.

In summary, efficient PySpark logging is critical for maintaining a successful data processing workflow. Through logging, you can easily identify issues before they become more significant problems, enabling rapid resolution and preventing performance degradation. We hope that you found this blog post informative, and please feel free to share these tips with your fellow PySpark users.

Here are some common questions that people also ask about efficient PySpark logging from executor to monitor your performance:

- Why is logging important in PySpark?

- How can I optimize PySpark logging?

- What is the best way to send logs from executors to the driver in PySpark?

- How can I monitor PySpark performance using logs?

- What are some common logging mistakes to avoid in PySpark?

Logging is important in PySpark because it helps you monitor the performance of your code and identify any errors or issues that may be affecting the execution of your program. It allows you to track events, debug issues, and analyze the behavior of your application.

There are several ways to optimize PySpark logging, including minimizing the amount of data being logged, using appropriate log levels, and configuring logging output to be sent to a file or external service. Additionally, you can use structured logging to make it easier to search and analyze your logs.

The best way to send logs from executors to the driver in PySpark is by using a custom log appender that sends log messages to a centralized logging service, such as Apache Kafka or Amazon Kinesis. This allows you to aggregate and analyze logs from multiple executors in real-time.

You can monitor PySpark performance using logs by analyzing metrics such as task duration, shuffle size, and executor memory usage. You can also use tools like Apache Spark Web UI or third-party monitoring services to visualize and analyze your logs.

Some common logging mistakes to avoid in PySpark include logging too much data, using inappropriate log levels, and failing to configure logging output properly. It’s also important to use structured logging and consistent log formats to make it easier to search and analyze your logs.