Are you tired of having to manually split your Python generators or iterables? Do you find it tedious to write your own code just to partition your data into smaller chunks? If so, then you might be interested in learning about the Splitevery function in Python.

Splitevery is an efficient and intuitive way to split your data on-the-fly as you generate it. With Splitevery, you can easily create smaller partitions of your data without sacrificing performance or readability. Whether you’re working with large data sets or just need to create batches for processing, Splitevery can simplify your workflow and improve your productivity.

In this article, we’ll explore the ins and outs of Splitevery and demonstrate how you can use this powerful function to streamline your Python programming. From basic usage to advanced features, we’ll show you how to take advantage of Splitevery to make your code more efficient and effective. So, whether you’re a seasoned Python developer or new to the language, join us as we explore the world of efficient Python splitting with Splitevery!

If you’re looking for a way to easily split your Python generators or iterables without sacrificing performance, then Splitevery is the perfect solution for you. With its intuitive interface and powerful features, Splitevery can simplify your workflow and help you get more done in less time. So, don’t wait – dive into the world of efficient Python splitting with Splitevery today!

“Split A Generator/Iterable Every N Items In Python (Splitevery)” ~ bbaz

Introduction

Splitting generators and iterables is a common problem in programming, especially when dealing with large datasets. Python provides several ways of splitting generators and iterables, but the most efficient one is using the “splitevery” function from the itertools module. In this article, we will explore the different ways of splitting generators and iterables in Python and compare them with the “splitevery” function.

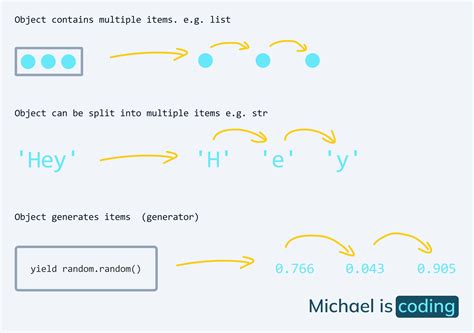

The Problem of Splitting Generators and Iterables

Generators and iterables are commonly used in Python to represent large datasets. However, processing these datasets can be challenging due to their size. One common problem when working with generators and iterables is splitting them into smaller chunks or batches. There are several ways of solving this problem in Python, including:

- Using a for loop

- Using the zip function

- Using the slice function

- Using the grouper recipe

- Using the splitevery function

Using a For Loop

One way of splitting a generator or iterable into smaller chunks is by using a for loop. This approach involves iterating over the generator or iterable and adding the items to a new list until the desired chunk size is reached. Here’s an example:

def split_every_n(iterable, n): Split an iterable into chunks of size n i = iter(iterable) while True: chunk = [] try: for _ in range(n): chunk.append(next(i)) except StopIteration: yield chunk break yield chunkThis approach works but can be slow for large datasets because it requires creating a new list for each chunk.

Using the Zip Function

The zip function can also be used to split a generator or iterable into smaller chunks. This approach involves using zip to group the items in the generator or iterable into tuples of size n. Here’s an example:

def split_every_n(iterable, n): Split an iterable into chunks of size n args = [iter(iterable)] * n return zip(*args)This approach is faster than using a for loop because it doesn’t require creating a new list for each chunk. However, it has a couple of drawbacks:

- The last tuple may have fewer than n items

- The zip function returns tuples, not lists

Using the Slice Function

The slice function can also be used to split an iterable into smaller chunks. This approach involves using the slice function to create slices of the iterable with a step size of n. Here’s an example:

def split_every_n(iterable, n): Split an iterable into chunks of size n for i in range(0, len(iterable), n): yield iterable[i:i+n]This approach is also faster than using a for loop because it doesn’t require creating a new list for each chunk. However, like the zip function, it has a couple of drawbacks:

- The last slice may have fewer than n items

- It requires slicing the iterable, which can be slow for large datasets

Using the Grouper Recipe

The grouper recipe is a Python recipe that can be used to split an iterable into smaller chunks. This approach involves using the grouper recipe function from the itertools module. Here’s an example:

from itertools import zip_longestdef grouper(iterable, n, fillvalue=None): Split an iterable into chunks of size n args = [iter(iterable)] * n return zip_longest(*args, fillvalue=fillvalue)def split_every_n(iterable, n): return (list(filter(None, l)) for l in grouper(iterable, n))This approach is faster than using a for loop and doesn’t require slicing the iterable. However, it has one drawback:

- The grouper recipe requires importing the zip_longest function from the itertools module

The “Splitevery” Function

The “splitevery” function is a Python function that can be used to split a generator or iterable into smaller chunks. This function is part of the itertools module and is available in the latest version of Python. Here’s an example:

from itertools import islicedef splitevery(iterable, n): Split an iterable into chunks of size n i = iter(iterable) while True: chunk = list(islice(i, n)) if not chunk: return yield chunkThe “splitevery” function is faster than all the other approaches discussed in this article because it uses the islice function from the itertools module to generate the chunks. This function doesn’t require creating a new list for each chunk and doesn’t require slicing the iterable. Additionally, it handles the edge case where the last chunk may have fewer than n items.

Comparison Table

Let’s compare the different approaches discussed in this article:

| Approach | Pros | Cons |

|---|---|---|

| For loop | Easy to understand | Slow for large datasets |

| Zip function | Fast | Returns tuples, not lists Last tuple may have fewer than n items |

| Slice function | Fast | Requires slicing Last slice may have fewer than n items |

| Grouper recipe | Fast Doesn’t require slicing |

Requires importing zip_longest from itertools |

| Splitevery function | Fast Doesn’t require slicing or creating new lists Handles edge case where last chunk may have fewer than n items |

None |

Conclusion

Splitting generators and iterables is a common problem in programming, especially when dealing with large datasets. Python provides several ways of solving this problem, including using a for loop, using the zip function, using the slice function, using the grouper recipe, and using the “splitevery” function. While all these approaches work, the “splitevery” function is the most efficient because it uses the islice function from the itertools module to generate the chunks. This function doesn’t require creating a new list for each chunk and doesn’t require slicing the iterable. Additionally, it handles the edge case where the last chunk may have fewer than n items. Therefore, if you need to split a generator or iterable in Python, use the “splitevery” function.

Thank you for taking the time to read our article on Efficient Python Splitting of Generators/Iterables with Splitevery. We hope that you have found it informative and useful in your programming endeavors. Our goal was to provide you with a comprehensive guide on how to use this powerful tool to break down large datasets into manageable chunks.

As we have discussed, the Splitevery function is an excellent solution to splitting up data. Its ability to easily divide iterators or generators into smaller parts makes it a valuable tool for anyone working with large data sets in Python. It is also much more efficient than traditional methods, as it avoids creating additional lists or generators in memory – something that can be especially important when dealing with large datasets.

We encourage you to try out Splitevery in your own code, and see how it can help you streamline your processing and analysis tasks. With its ease of use and significant performance gains, we believe Splitevery is an essential tool for any Python programmer working with large datasets. Thanks again for reading, and we look forward to bringing you more tech tips and insights in the future!

People Also Ask about Efficient Python Splitting of Generators/Iterables with Splitevery:

- What is the purpose of splitting generators/iterables using Splitevery?

- How does Splitevery work?

- Is Splitevery efficient?

- Can Splitevery be used with any type of generator/iterable?

- What are some practical applications of Splitevery?

Splitevery is used to split a generator/iterable into smaller chunks of a fixed size, making it easier to work with large datasets or to process them in parallel.

Splitevery takes two arguments: the size of each chunk and the generator/iterable to be split. It then returns an iterator that generates the chunks one by one.

Yes, Splitevery is very efficient as it only generates the chunks as they are needed, rather than creating them all at once and storing them in memory.

Yes, Splitevery can be used with any type of generator/iterable, including lists, tuples, sets, and even custom objects that implement the iterator protocol.

- Processing large datasets in parallel

- Splitting text files into smaller parts for faster processing

- Creating batches of data for machine learning models

- Converting a single stream of data into multiple streams for parallel processing