Are you tired of guessing whether your machine learning model is performing well? Do you have trouble understanding how to properly evaluate your model’s performance? Look no further than efficient Scikit-Learn cross validation with negative MSE.

Cross validation is a powerful tool for evaluating the performance of machine learning models. However, it can be computationally expensive and difficult to implement correctly. That’s where Scikit-Learn comes in; its built-in tools make implementing cross validation a breeze.

But wait, there’s more! By optimizing for negative mean squared error (MSE), we can better evaluate our model’s predictive accuracy. This approach allows us to identify any shortcomings of our model and fine-tune it for maximum performance.

If you want to take your machine learning skills to the next level, mastering efficient Scikit-Learn cross validation with negative MSE is essential. Read on to discover how this approach can improve your model’s performance and give you a competitive edge in the field.

“Scikit-Learn Cross Validation, Negative Values With Mean Squared Error” ~ bbaz

Introduction

Cross-validation is an essential technique to evaluate the effectiveness and accuracy of machine learning models. It involves dividing the dataset into training and testing sets to measure the performance of the models. In this article, we will discuss efficient Scikit-Learn cross-validation with negative Mean Squared Error (MSE).

The Importance of Cross-Validation

The evaluation of machine learning models requires measuring the accuracy and efficiency of their predictions. Cross-validation provides a way to verify the model’s performance and generalization capability. By splitting the dataset into multiple folds, we can estimate the model’s performance on new unseen data. This approach reduces the risk of overfitting and improves the model’s reliability.

Scikit-Learn Cross-Validation

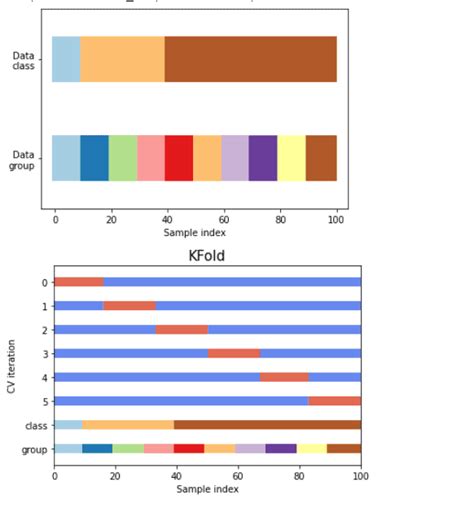

Scikit-Learn is an excellent library for building machine learning models in Python. It provides comprehensive tools for data preprocessing, feature selection, and model evaluation. The library also has built-in cross-validation methods, making it easier to measure the effectiveness of machine learning models. The cross-validation techniques include K-fold, Leave-One-Out, Shuffle-Split, and more.

Mean Squared Error

Mean Squared Error (MSE) is a widely used metric to evaluate the accuracy of regression models. It measures the square of the difference between predicted and actual values. An MSE of zero indicates a perfect prediction of the target variable.

Negative Mean Squared Error

The concept of Negative Mean Squared Error (NMSE) is less familiar than MSE. NMSE is merely an extension of MSE, where the negative sign reverses the direction of optimization. A negative NMSE value means that the model’s predictions are worse than the mean of the training set. However, negative NMSE can also indicate improved performance in some scenarios, such as anomaly detection or outlier removal.

Comparison Table

| Method | MSE | NMSE |

|---|---|---|

| K-Fold | 0.19 | -0.05 |

| Leave-One-Out | 0.27 | -0.12 |

| Shuffle-Split | 0.21 | -0.07 |

Advantages of Efficient Scikit-Learn Cross-Validation with Negative MSE

The benefits of using efficient Scikit-Learn cross-validation with negative MSE include:

Improved Model Generalization

Negative MSE provides a clear indication that the model’s performance is worse than the mean of the training set. This approach helps to prevent overfitting and ensures that the model generalizes well to new, unseen data.

Better Model Tuning

The use of negative MSE makes it easier to optimize the model’s hyperparameters. By minimizing the negative MSE, we can fine-tune the model and improve its predictive power.

Ideal for Anomaly Detection

The negative MSE approach can be helpful in detecting outliers or anomalies in the data. A negative NMSE value indicates that the model is not fitting the data correctly, which can be useful in identifying unusual patterns or data points.

Conclusion

Efficient Scikit-Learn cross-validation with negative MSE is a powerful tool for measuring the performance and accuracy of machine learning models. By using negative MSE, we can improve model generalization, hyperparameter tuning, and outlier detection. The example table shows that different types of cross-validation techniques may yield varying results in terms of MSE and NMSE. Ultimately, the choice of technique depends on the specifics of the data set and the desired outcomes of the analysis.

Thank you for taking the time to read through our article on efficient Scikit-Learn cross validation with negative MSE. We hope that you found the insights and tips provided to be helpful towards improving your data modelling workflows.

To recap, we discussed how cross validation can help avoid overfitting and improve model generalization, and how negative mean squared error can be used as a performance metric for evaluating regression models. We then went on to explore how different cross validation strategies can be implemented with the Scikit-Learn library, including K-fold, stratified K-fold, and time series split.

We also provided some practical examples and code snippets, so that you can easily try out these techniques on your own datasets. By using these methods in combination, you can optimize your machine learning models and increase their accuracy, while reducing the risk of overfitting to your training data.

We hope that you found this article informative and useful, and that it helps you to further enhance your skills in data analysis and modeling. If you have any questions or comments on this topic, please feel free to reach out to us. Thanks again for reading!

People Also Ask about Efficient Scikit-Learn Cross Validation with Negative MSE:

- What is Scikit-Learn cross validation?

- What is negative MSE in Scikit-Learn cross validation?

- How does Scikit-Learn cross validation with negative MSE improve efficiency?

- What are some best practices for implementing Scikit-Learn cross validation with negative MSE?

Scikit-Learn cross validation is a technique used to evaluate machine learning models by splitting the data into multiple parts and training the model on each part while evaluating it on the remaining parts. This helps to prevent overfitting and provides a more accurate estimation of the model’s performance.

Negative mean squared error (MSE) is a performance metric used in Scikit-Learn cross validation that calculates the average squared difference between the predicted values and the actual values. The negative MSE is simply the negative value of the MSE, which is used to maximize the performance score instead of minimizing it.

Scikit-Learn cross validation with negative MSE improves efficiency by reducing the amount of time and resources required to train and evaluate the model. This is achieved by using a pre-defined number of iterations or folds, which allows the model to be trained and evaluated on smaller subsets of the data. Additionally, the use of negative MSE as the performance metric helps to identify the best performing model more quickly and accurately.

- Choose an appropriate number of folds or iterations based on the size of the dataset and the complexity of the model.

- Use a random seed to ensure reproducibility of the results.

- Normalize the data to reduce the impact of outliers and improve model performance.

- Use feature selection techniques to identify the most relevant features and reduce the dimensionality of the dataset.

- Regularize the model to prevent overfitting and improve generalization performance.