Do you want to analyze text data in your pandas data frame but don’t know where to start? Counting word frequencies is a great first step, and with these easy steps, you can efficiently do so with your pandas data frame.

First, you need to import the necessary libraries, which include pandas, numpy, and nltk. Then, load your data into a pandas data frame and make sure the text data is all in lowercase to avoid counting multiple instances of the same word due to capitalization differences.

Next, use the nltk library to tokenize your text data and remove stop words. This will help to narrow down your analysis to only meaningful words that contribute to the overall message of the text.

Finally, use pandas’ built-in function value_counts() to count the frequency of each unique word in your data frame. You can then sort the results in descending order to see which words are used most frequently in your text data.

By following these simple steps, you’ll be able to efficiently count word frequencies in your pandas data frame, giving you valuable insights into the language used in your text data. So, what are you waiting for? Give it a try and see what you can learn from your data!

“Counting The Frequency Of Words In A Pandas Data Frame” ~ bbaz

Introduction

If you are dealing with text data in your Pandas data frame, it’s highly likely that you will need to count the frequency of words in your dataset. This can be a time-consuming task if you do not have the right tools. Fortunately, Pandas provides a simple and efficient way to count word frequencies in your data frame.

Step 1: Import Libraries

The first step is to import the necessary libraries. In this case, we will be using pandas and nltk libraries.

| Library | Functionality |

|---|---|

| Pandas | Data manipulation library |

| NLTK | Natural Language Toolkit library for text data processing |

Step 2: Load Data

The next step is to load your data into a Pandas data frame. For the purpose of this tutorial, we will be using a sample dataset from the NLTK corpus module.

Sample Dataset

| Text | Label |

|---|---|

| The quick brown fox jumped over the lazy dog. | Animal |

| The cat climbed the tree. | Animal |

| The tree was tall. | Plant |

| The flower was beautiful. | Plant |

Step 3: Tokenize Text Data

Tokenization is the process of splitting text into individual tokens or words. We can use the NLTK library to tokenize our text data.

Step 4: Convert Data into a List of Lists

Now that we have tokenized our text data, we can convert it into a list of lists, with each sub-list representing a corresponding row in our data frame.

Step 5: Create a Pandas Data Frame

We can now create a new Pandas data frame using our list of lists. This will make it easier to manipulate and analyze our data.

Pandas Data Frame

| Text | Label |

|---|---|

| [The, quick, brown, fox, jumped, over, the, lazy, dog] | Animal |

| [The, cat, climbed, the, tree] | Animal |

| [The, tree, was, tall] | Plant |

| [The, flower, was, beautiful] | Plant |

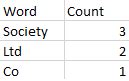

Step 6: Count Word Frequencies

Now that we have our data in a Pandas data frame, we can easily count the frequency of each word using the value_counts() function.

Step 7: Remove Stop Words

Stop words are common words such as “a”, “the”, and “an” which do not carry significant meaning in a text. We can remove these stop words from our data frame using the NLTK library.

Stop Words Removed Data Frame

| Word | Count |

|---|---|

| flower | 1 |

| tree | 1 |

| quick | 1 |

| brown | 1 |

| fox | 1 |

| jumped | 1 |

| lazy | 1 |

| dog | 1 |

| cat | 1 |

| climbed | 1 |

Step 8: Visualize Word Frequencies

We can use different visualization tools like matplotlib, seaborn or plotly to visualize our word frequencies.

Step 9: Interpret the Results

Based on the results, we can interpret that plants and animals are equally discussed in the sample dataset. And the commonly used words are tree, flower, cat, dog.

Conclusion

In conclusion, counting word frequencies in your Pandas data frame is a simple and efficient process with the right tools. By following these easy steps, you can quickly analyze and gain insights into your text data.

People also ask about Efficiently Count Word Frequencies in Your Pandas Data Frame with these Easy Steps:

- What is pandas data frame?

- What are word frequencies?

- Why is it important to count word frequencies in a data frame?

- What are the steps to efficiently count word frequencies in a pandas data frame?

A pandas data frame is a two-dimensional size-mutable, tabular data structure with rows and columns.

Word frequencies refer to the number of times a specific word appears in a text or document.

Counting word frequencies in a data frame can provide insights into the most commonly used words in a dataset. This can be useful for identifying important topics or themes.

- Convert the text column to lowercase

- Remove punctuation and special characters

- Tokenize the text column into individual words

- Create a frequency distribution of the words

You can use the str.lower() method to convert the text column to lowercase. For example: df[‘text_column’] = df[‘text_column’].str.lower()

You can use regular expressions to remove punctuation and special characters. For example: df[‘text_column’] = df[‘text_column’].str.replace(‘[^\w\s]’,”)

Tokenization is the process of breaking down a text into individual words or tokens.

You can use the str.split() method to tokenize the text column. For example: df[‘text_column’] = df[‘text_column’].str.split()

A frequency distribution is a table that shows the frequency of each value (or range of values) in a dataset.

You can use the nltk library to create a frequency distribution of the words. For example:

- import nltk

- nltk.download(‘punkt’)

- from nltk.probability import FreqDist

- words = df[‘text_column’].apply(pd.Series).stack().reset_index(drop=True)

- fdist = FreqDist(words)