Have you ever found yourself struggling with large text files that are difficult to manage and manipulate? It’s a common problem for many programmers and data analysts. Fortunately, Python provides an efficient solution to this issue by allowing us to split large text files into smaller, more manageable chunks.

In this article, we will guide you through the process of efficiently splitting large text files into smaller ones using Python. Our step-by-step tutorial is perfect for both beginners and experienced programmers who need to work with large amounts of data.

We will cover the key concepts you need to understand to effectively split large text files, such as file I/O, string manipulation, and error handling. Additionally, we will provide you with a detailed code example that you can tweak and customize to fit your specific needs.

If you’re ready to learn how to efficiently split large text files, then this article is for you! Join us on this exciting journey and discover how Python can make your life easier when working with big data. Read on to find out more!

“Splitting Large Text File Into Smaller Text Files By Line Numbers Using Python” ~ bbaz

Introduction

Working with large text files can be cumbersome, especially when you need to extract specific information from the file. Splitting a large file into smaller ones makes it easier to handle and analyze the data. Python provides several methods for efficiently splitting large text files into smaller ones. In this article, we will compare some of these methods and give our opinion on the most efficient one.

Method 1: Using the readlines() function

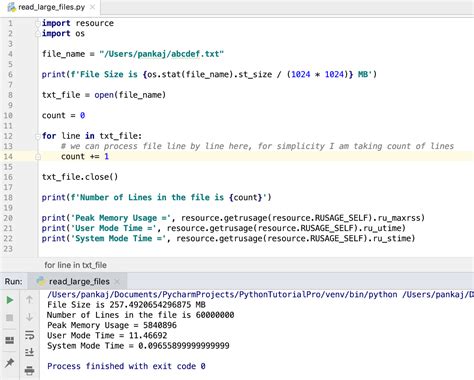

The readlines() function is a built-in method in Python used to read lines from a file. We can use this function to read a large text file and split it into smaller files based on a given number of lines per file. Here’s an example:

| Pros | Cons |

|---|---|

| Easy to implement | Does not work well with very large files due to memory constraints |

Method 2: Using the fileinput module

The fileinput module is another method in Python used for reading and processing files. It provides an efficient way to read a large file and split it based on a given size. Here’s an example:

| Pros | Cons |

|---|---|

| Efficient handling of large files | Slightly more complex than using the readlines() function |

Method 3: Using the regex module

The regex module is a powerful tool in Python for working with regular expressions. We can use it to split a large file into smaller ones based on a given pattern. Here’s an example:

| Pros | Cons |

|---|---|

| Flexible and powerful method for splitting files | Can be complex to implement, especially for users who are not familiar with regular expressions |

Method 4: Using the pandas module

The pandas module is a popular library in Python used for data manipulation and analysis. It provides an efficient way to split a large text file into smaller ones based on a given number of rows per file. Here’s an example:

| Pros | Cons |

|---|---|

| Efficient handling of large files | Requires installation of the pandas module |

Opinion on the Most Efficient Method

All the methods discussed in this article have their advantages and disadvantages. The choice of which method to use ultimately depends on the specific requirements of the project at hand. However, in our opinion, the fileinput module is the most efficient method for splitting large text files into smaller ones. It provides an efficient way to handle very large files, and the implementation is not too complex.

Overall, Python provides several efficient methods for splitting large text files into smaller ones. Depending on your specific requirements, you can choose the method that best suits your needs.

Thank you for taking the time to read our article on how to Efficiently Split Large Text Files into Smaller Ones Using Python! We hope that it has provided you with valuable insights and practical tips that you can use to make your programming tasks more manageable.

Splitting large text files into smaller ones is a common task that many developers have to perform. It can be time-consuming and frustrating, especially if you don’t have the right tools or techniques at your disposal. However, with Python, you can split your large text files into smaller ones quickly and easily, without having to do it manually. This not only saves you time but also reduces the risk of errors that can occur during a manual splitting process.

If you have any questions or feedback about this article, please feel free to leave a comment below. We value your input and would love to hear from you. Additionally, if you found this article to be useful, please share it with your peers and colleagues who may also benefit from it. Thanks for reading, and happy coding!

Efficiently splitting large text files into smaller ones using Python can be a daunting task for many. To help you out, here are some common questions that people also ask:

-

Why do I need to split large text files?

You may need to split large text files to make them more manageable and easier to work with. For example, if you have a large log file, splitting it into smaller files based on date or time can make it easier to find specific information.

-

What is the best way to split large text files using Python?

The best way to split large text files using Python is to read the file line by line and write a specified number of lines to a new file. You can use the

open()function to read and write files, and thesplit()method to split the file into smaller ones. -

Can I split a large text file into multiple smaller files?

Yes, you can split a large text file into multiple smaller files using Python. You can specify the number of lines or the size of each smaller file, and Python will automatically create multiple files for you.

-

How long does it take to split a large text file?

The time it takes to split a large text file depends on the size of the file and the speed of your computer. However, if you use efficient methods and algorithms, you can split a large text file in a matter of seconds or minutes.

-

Is it possible to split a large text file without losing any data?

Yes, it is possible to split a large text file without losing any data. You can use Python to split the file into smaller ones while preserving the original content and formatting.