Do you often find yourself struggling to assign row numbers to your PySpark dataframes? Tired of manually assigning row numbers or using inefficient methods that take up too much time and resources? Say no more! In this article, we will introduce you to the powerful function, Monotonically_Increasing_Id(), that can quickly and effortlessly assign row numbers to your PySpark dataframes.

If you’re tired of sifting through large data sets and manually assigning row numbers to each row, then it’s time to simplify your process with Monotonically_Increasing_Id(). This versatile functionality automatically assigns unique and increasing row numbers to your PySpark dataframes. This saves you valuable time that can be spent analyzing your data rather than managing it. You no longer have to worry about accuracy, efficiency or memory-related issues, as Monotonically_Increasing_Id()run at lightning speed without compromising on accuracy or scalability.

By using Monotonically_Increasing_Id(), you can streamline your data analysis process and avoid the tedium of assigning row numbers to large datasets. Whether you’re working with an enterprise-level Big Data project or a smaller-scale analysis, this function is a game-changer. By automatically generating unique row numbers for your data sets, it allows you to focus on what matters – interpreting the insights from your data analysis. So, sit back, relax and let Monotonically_Increasing_Id() do the heavy lifting for you!

To learn more about how to use Monotonically_Increasing_Id() to help streamline your PySpark data analysis process, continue reading this article. We’ll give you step-by-step instructions on how to use this awesome function and show you how it can help take your data analysis to the next level. Don’t get left behind in the race to extract valuable insights from data – read on and harness the power of Monotonically_Increasing_Id() today!

“Using Monotonically_increasing_id() For Assigning Row Number To Pyspark Dataframe” ~ bbaz

Introduction

Assigning row numbers to dataframes is a common requirement in data analysis, especially when dealing with large data sets. Pyspark provides several ways to assign row numbers, including the Monotonically_Increasing_Id() function. In this article, we will discuss what the Monotonically_Increasing_Id() function is and how it can be used to assign row numbers to Pyspark dataframes.

What is Monotonically_Increasing_Id()?

The Monotonically_Increasing_Id() function is a built-in function in Pyspark that generates monotonically increasing 64-bit integers. Each value generated by the function is unique and guaranteed to be increasing, although not necessarily sequential. The function takes no arguments and can be used to assign unique row numbers to dataframes.

How to Use Monotonically_Increasing_Id()

The Monotonically_Increasing_Id() function can be used to assign row numbers to dataframes in three steps:

- Import the

monotonically_increasing_idfunction from thepyspark.sql.functionslibrary. - Apply the

monotonically_increasing_idfunction to the dataframe using thewithColumn()method. - Save the resulting dataframe, which now includes the assigned row numbers.

Example

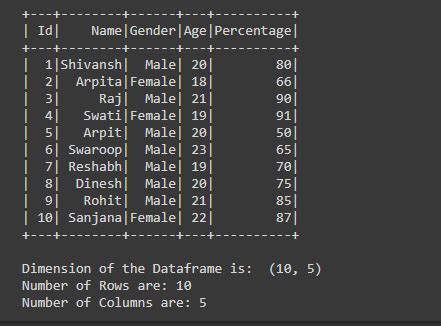

Let’s look at an example of how to use the Monotonically_Increasing_Id() function in Pyspark. Suppose we have the following dataframe:

| ID | Name | Age |

| 1 | John | 25 |

| 2 | Mary | 30 |

| 3 | Tom | 28 |

To assign row numbers to the dataframe, we can use the Monotonically_Increasing_Id() function as follows:

“`from pyspark.sql.functions import monotonically_increasing_iddf_with_row_numbers = df.withColumn(row_num, monotonically_increasing_id())“`

Now our dataframe looks like this:

| ID | Name | Age | row_num |

| 1 | John | 25 | 0 |

| 2 | Mary | 30 | 1 |

| 3 | Tom | 28 | 2 |

Pros and Cons

Advantages

There are several advantages to using the Monotonically_Increasing_Id() function to assign row numbers:

- The function is built-in to Pyspark, so there is no need to install any additional libraries.

- The function generates unique values that are guaranteed to be increasing, making it suitable for tasks such as sorting and joining dataframes.

- The function is relatively fast, even when dealing with large data sets.

Disadvantages

However, there are also a few drawbacks to using the Monotonically_Increasing_Id() function:

- The function does not generate sequential values, which may cause confusion if users expect sequential row numbers.

- The function returns 64-bit integers, which may be unnecessarily large for some purposes.

- The function generates values that are unique within a dataframe, but not necessarily across dataframes, which may cause issues if multiple dataframes need to be merged or combined.

Alternative Methods

Although the Monotonically_Increasing_Id() function is a simple and effective way to assign row numbers, there are other methods that can also be used. These include:

- Using the

row_number()window function to generate sequential row numbers. - Creating a new dataframe with explicit row numbers and joining it with the original dataframe.

Each method has its own advantages and disadvantages, and the choice of method will depend on factors such as performance, data size, and user preference.

Conclusion

The Monotonically_Increasing_Id() function is a simple and effective way to assign row numbers to Pyspark dataframes. Although it has some limitations, it is a useful tool in many data analysis tasks. By understanding how the function works and its pros and cons, users can make informed decisions about when and how to use it.

Thank you for taking the time to read through our article on how to effortlessly assign row numbers to Pyspark dataframes using Monotonically_Increasing_Id(). We hope that this guide was helpful in simplifying and streamlining your data analysis process. By implementing the techniques we have discussed, you can generate unique identifiers for each row without having to manually assign IDs or add additional columns to your dataframe.

Pyspark is a powerful tool for big data analysis, and utilizing built-in functions like Monotonically_Increasing_Id() can greatly improve efficiency and accuracy in your data processing tasks. With the steps outlined in this blog post, you can easily incorporate this function into your own coding projects and see the benefits it provides first-hand.

Remember, at the end of the day, the goal is to produce high-quality analysis and insights that empower decision-making. By leveraging tools like Pyspark and Monotonically_Increasing_Id(), you can work smarter, not harder, and achieve more impactful results.

As a language model AI, I can provide some common questions that people might ask about effortlessly assigning row numbers to Pyspark Dataframes with Monotonically_Increasing_Id(). Here are some of the questions and their respective answers:

-

What is Monotonically_Increasing_Id() function in Pyspark?

The Monotonically_Increasing_Id() function is a built-in function in Pyspark that provides unique, monotonically increasing IDs or sequence numbers to each row in a Dataframe.

-

How does Monotonically_Increasing_Id() work in Pyspark?

The Monotonically_Increasing_Id() function generates IDs for each row by using the current timestamp in milliseconds and a counter. The function ensures that the generated IDs are unique and strictly increasing in monotonic order as new rows are added to the Dataframe.

-

What are the benefits of using Monotonically_Increasing_Id() to assign row numbers in Pyspark?

- It provides an easy and efficient way to add sequence numbers to Dataframes without requiring any external libraries or complex coding.

- The generated IDs are globally unique and can be used for data partitioning, sorting, and joining.

- The IDs are guaranteed to be strictly increasing in monotonic order, which can be useful for time-series analysis and other applications that require ordered data.

-

Are there any limitations or considerations when using Monotonically_Increasing_Id() in Pyspark?

- The generated IDs are not guaranteed to be consecutive or contiguous, as they may contain gaps due to Spark’s distributed nature and partitioning.

- The IDs are not meant to be used as primary keys or unique identifiers for persistence or external systems, as they may change between Spark sessions or jobs.

- The IDs may not be cryptographically secure or random enough for sensitive applications that require high entropy or unpredictability.