Are you tired of slow and clunky Python code? Do you wish there was an easy solution to improve the performance of your scripts? Look no further than this simple multithreading parallel url fetching tip! This technique allows you to effortlessly boost your Python skills without the need for a queue.

With this method, you can quickly fetch data from multiple URLs in a single script, allowing you to save time and streamline your code. No longer will you have to wait for each request to complete before moving on to the next one. Instead, you can run multiple requests simultaneously, greatly increasing the speed and efficiency of your program.

If you’re ready to take your Python skills to the next level, then don’t hesitate to read this article from beginning to end. You’ll find step-by-step instructions for implementing this multithreading technique, as well as helpful tips and tricks for optimizing your code. Plus, you’ll learn how to avoid common pitfalls and errors that can often arise when working with threads.

So what are you waiting for? Say goodbye to slow and sluggish Python scripts and hello to lightning-fast performance with this simple multithreading parallel url fetching tip. Read on and discover how you can effortlessly boost your Python skills today!

“A Very Simple Multithreading Parallel Url Fetching (Without Queue)” ~ bbaz

The Need for Faster Python Code

Python is a powerful programming language that can be used for a wide range of applications. However, many developers find that their Python scripts can run slowly or become clunky, especially when dealing with large amounts of data or complex algorithms. This can lead to frustrating delays and reduced productivity, making it difficult to achieve your programming goals.

If you’re looking for a way to improve the performance of your Python scripts, then you’ve come to the right place. In this article, we’ll introduce you to a simple yet powerful technique for multithreading parallel url fetching, allowing you to quickly and easily fetch data from multiple URLs in a single script.

The Benefits of Multithreading

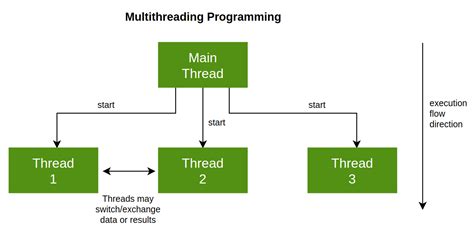

Traditionally, Python scripts operate in a linear fashion, which means that each task must be completed before moving on to the next one. With multithreading, however, you can execute multiple tasks simultaneously, greatly improving the speed and efficiency of your program.

One of the biggest benefits of using multithreading is that it allows you to leverage the full power of modern processors. Rather than letting your CPU sit idle while waiting for a single task to complete, you can keep it busy by running multiple threads at once.

By implementing multithreading parallel url fetching, you can avoid the need for queues and other complex structures, making it easy to write and maintain your code. Plus, you’ll be able to handle more requests at once, increasing the overall throughput of your program.

The Technique: Multithreading Parallel URL Fetching

So how does multithreading parallel url fetching actually work? It’s surprisingly simple:

- Create a list of URLs that you want to retrieve data from.

- Create a function that will handle the retrieval of data for each URL.

- Create a thread for each URL, passing in the appropriate function and URL as arguments.

- Launch all threads and wait for them to complete.

By following these basic steps, you can quickly and easily implement multithreading parallel url fetching in your Python code. Of course, there are many variations and optimizations that you can use to make this technique even more effective, but these steps provide a great starting point.

Implementing the Technique: Step-by-Step Guide

If you’re ready to try out multithreading parallel url fetching for yourself, then let’s get started! Here’s a step-by-step guide to help you implement this technique:

- Import the required modules (such as ‘threading’ and ‘urllib.request’).

- Define a function to retrieve data from a single URL.

- Define a list of URLs that you want to retrieve data from.

- Create a list to hold your threads.

- For each URL in your list, create a new thread using the threading.Thread() method.

- Pass in the function and URL as arguments to each thread.

- Append each thread to your list of threads.

- Launch all threads using the start() method.

- Join all threads using the join() method to wait for them to complete.

- Process the retrieved data (such as by parsing it or saving it to a file).

- Clean up any resources (such as closing open files or releasing memory).

By following these steps, you can quickly and easily implement multithreading parallel url fetching in your Python scripts, saving time and boosting your productivity.

Tips and Tricks for Optimizing Your Code

While the basic technique of multithreading parallel url fetching is relatively simple, there are many ways that you can optimize your code to make it even more efficient. Here are a few tips and tricks to help you get the most out of this technique:

- Use a thread pool or queue to limit the number of simultaneous requests.

- Consider using asynchronous programming libraries like asyncio to manage multiple tasks more effectively.

- Use an HTTP client library like ‘requests’ to simplify the retrieval of data from URLs.

- Be mindful of resource usage, such as memory and disk space, and optimize your code accordingly.

- Test your code thoroughly to ensure that it works as expected and that there are no compatibility issues with other modules.

Pitfalls and Errors to Watch Out For

As with any programming technique, there are a number of common pitfalls and errors that can arise when using multithreading parallel url fetching. Here are a few examples:

- Race conditions, where multiple threads try to access the same resources simultaneously, leading to unpredictable results.

- Deadlocks, where one thread is waiting for another to release a shared resource, but both are stuck and unable to proceed.

- Inefficient code, where threads spend too much time waiting on I/O operations or are not properly synchronized.

- Compatibility issues with different Python versions or modules, causing your code to break or produce unexpected results.

By being aware of these pitfalls and errors, you can write more robust and reliable code that takes full advantage of the benefits of multithreading parallel url fetching.

Conclusion: Boost Your Python Skills Today!

If you’re tired of slow and clunky Python code, then multithreading parallel url fetching is a great way to improve the performance of your scripts and streamline your workflow. By following the steps outlined in this article, you can quickly and easily implement this technique in your own projects, and take your Python skills to the next level.

| Pros | Cons |

|---|---|

| – Faster and more efficient processing of data | – Potential for race conditions, deadlocks, and other issues if not implemented properly |

| – Easy to implement and maintain | – Requires careful optimization and testing to ensure best performance |

| – Leverages the full power of modern processors | – Compatibility issues with different Python versions or modules can occur |

Overall, we highly recommend giving multithreading parallel url fetching a try if you want to boost your Python skills and overcome the limitations of linear processing. With a little bit of practice and patience, you’ll soon be able to master this technique and create faster, more powerful Python scripts than ever before.

Thank you for taking the time to read through this article on effortlessly boosting your Python skills with simple multithreading parallel URL fetching tips. We hope that the information we have shared has been able to help you gain a better understanding of how to use Python more efficiently and make your programming tasks easier.

As we have discussed in the article, utilizing multithreading and parallel processing can make a significant difference in the speed and efficiency of your Python code. By using the simple tips provided, you can easily start implementing these techniques into your projects in no time.

Don’t be afraid to experiment and try out new things with your Python code. The more you practice and hone your skills, the easier it will become to incorporate advanced techniques like multithreading and parallel processing into your work. Thank you again for visiting our blog, and we hope to see you back soon!

People Also Ask about Effortlessly Boost Your Python Skills with Simple Multithreading Parallel Url Fetching Tip – No Queue Needed!

- What is multithreading in Python?

- Why is multithreading useful for URL fetching?

- Do I need to use a queue for multithreading URL fetching?

- Is this tip suitable for beginners?

- Are there any potential drawbacks to using multithreading for URL fetching?

Multithreading in Python allows for concurrent execution of multiple threads within a single process. This can improve performance and efficiency when dealing with tasks that require parallel processing.

URL fetching involves making requests to multiple URLs, which can take a significant amount of time if done sequentially. By using multithreading, the requests can be made concurrently, reducing the overall time taken to fetch all the URLs.

No, the Simple Multithreading Parallel Url Fetching Tip described in this article does not require the use of a queue. Instead, it uses a list to store the URLs and a thread pool executor to handle the concurrent execution of the requests.

Yes, this tip is relatively simple to implement and can be useful for beginners who want to improve their Python skills. However, it may be helpful to have some basic understanding of multithreading concepts before attempting to use this tip.

One potential drawback is that it may increase the load on the server being queried, especially if a large number of requests are being made concurrently. Additionally, there may be issues with thread safety and race conditions if not implemented properly.