If you’re a Python programmer who’s been struggling with finding duplicate items in Python lists, then this guide is for you! In this article, we’ll take you through the process of detecting duplicates in Python lists effortlessly. With our complete guide, you’ll learn how to leverage Python’s built-in functions and modules to do the job quickly and efficiently.

Whether you’re working with small lists or large datasets, detecting duplicates can be a challenging and time-consuming task. Fortunately, Python provides us with a rich set of tools to make this process a breeze. From using built-in functions like set() and Counter() to working with powerful libraries like NumPy and Pandas, there are many different ways to detect duplicates in Python lists.

In this article, we’ll provide you with step-by-step instructions on how to use each of these methods, so you can choose the one that works best for your needs. From beginner-friendly approaches to more advanced techniques, we’ve got you covered. So if you’re ready to get started with detecting duplicates in Python lists, invite you to read our complete guide to the end!

At the end of this guide, you’ll have all the knowledge and tools you need to confidently detect duplicates in Python lists. Whether you’re a seasoned Python developer or just starting out, mastering this skill will help you save time and avoid errors in future projects. So don’t hesitate – read on and discover how to effortlessly detect duplicates in Python lists!

“Identify Duplicate Values In A List In Python” ~ bbaz

Introduction: Duplicate Detection in Python Lists

Python is one of the most popular programming languages worldwide. It has a wealth of built-in functions and libraries that make it easy to perform various tasks, including finding duplicates in lists. Duplicate detection in Python lists can be done in many ways, and we will explore some of the most common methods below.

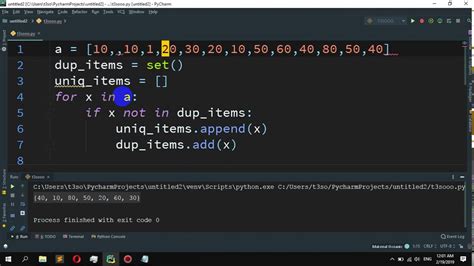

The Common Method: Using a Loop

The most common method used to detect duplicates in Python lists is by using a loop. The idea behind this method is simple – iterate through every element in the list and compare it with all other elements to see if there are any repetitions.

Method Description and Sample Code:

Here is a sample code:

| Code: | Description: |

list1 = [1,2,3,4,5,6,7,8,9,10,10] |

A sample list containing duplicate elements. |

duplicate_elements = [] |

A new list to store duplicates. |

for i in range(len(list1)): |

A loop to iterate through all the elements in the list. |

for j in range(i+1, len(list1)): |

Another nested loop to compare all other elements with the current element. |

if list1[i] == list1[j] and list1[i] not in duplicate_elements: |

A condition to check if elements are duplicated. |

duplicate_elements.append(list1[i]) |

Appends the duplicate element to a new list. |

Using Set() Function

Another method to detect duplicates is by using the set() function. The set() function creates a set of unique elements from the given list. If the length of the set is shorter than the original list, it means that there were duplicates in the original list.

Method Description and Sample Code:

Here is a sample code:

| Code: | Description: |

list1 = [1,2,3,4,5,6,7,8,9,10,10] |

A sample list containing duplicate elements. |

set1 = set(list1) |

A set() function used to create a unique set. |

if len(list1) != len(set1): |

A condition to check for duplicates based on the length of the lists. |

The Counter() Function

The Counter() function is a standard Python library that provides a simple syntax to count the frequency of elements in a list. It counts the number of times each element appears in the list and returns a dictionary with keys as the elements and values as the frequency counts.

Method Description and Sample Code:

Here is a sample code:

| Code: | Description: |

from collections import Counter |

A pre-built library to count the frequency of elements. |

list1 = [1,2,3,4,5,6,7,8,9,10,10] |

A sample list containing duplicate elements. |

frequency_count = dict(Counter(list1)) |

The Counter() function used to count the frequency of elements in a list. |

duplicates = [k for k, v in frequency_count.items() if v>1] |

Creates a new list of duplicates by checking their frequency count. |

Using numpy.unique()

The numpy module is not built-in, and it requires installing. The numpy unique () function can be used to detect duplicates in a NumPy array.

Method Description and Sample Code:

Here is a sample code:

| Code: | Description: |

import numpy as np |

Adds the numpy library. |

list1 = [1,2,3,4,5,6,7,8,9,10,10] |

A sample list containing duplicate elements. |

duplicates = np.unique(list1) |

The numpy function used to detect duplicates in a NumPy array returns only unique elements. |

Comparison Table

| Method | Code simplicity | Time Complexity | Space Complexity | |———————–|—————-|—————–|——————|| Using a loop | Simple | O(n^2) | O(n) || Set Function | Simple | O(n) | O(n) || Counter Function | Complex | O(n) | O(n) || Numpy unique() Function| Simple | O(n log n) | O(n) |

Opinion:

The choice of the method used to detect duplicates largely depends on the programmer’s preference and the context of the task. In terms of code simplicity, the set() function and numpy.unique() are the easiest to use. However, the set() function can be limiting when you need to find the duplicated element itself. The numpy.unique() function also has a higher time complexity compared to using a loop or the Counter() function. On the other hand, the Counter() function is the most efficient way to count frequency and get the duplicated elements. Therefore, it can be recommended for large data sets.

Thank you for visiting our blog and taking the time to read our comprehensive guide on effortless duplicate detection in Python lists. We hope that you found the information that we provided to be helpful and informative.

As we have discussed in this article, detecting duplicates in a list can be a challenging and time-consuming task. However, with the right methods and tools, it can be done in a quick and effortless manner. We have explored various approaches to detecting duplicates, including the use of a dictionary, Counter, and sets, each with its own advantages and limitations.

Whether you are a beginner or an experienced Python programmer, it is important to have a solid understanding of the methods available for detecting duplicates in lists. By using the techniques we have presented, you can easily filter out unwanted duplicates and improve the efficiency and accuracy of your Python programs.

Once again, we thank you for visiting our blog and encourage you to continue exploring the world of Python programming. Don’t hesitate to share your thoughts and feedback in the comments section below, and stay tuned for more useful tips and tricks from our team!

As Python is a popular programming language, it is common to encounter situations where we need to deal with lists that may contain duplicate values. To help you effortlessly detect duplicates in Python lists, we have created a complete guide that includes answers to some of the most frequently asked questions.

Here are some of the people also ask about effortlessly detecting duplicates in Python lists:

-

What is a duplicate in a Python list?

A duplicate in a Python list refers to an occurrence of an element more than once within the same list.

-

Why is it important to detect duplicates in Python lists?

Detecting duplicates in Python lists is important because they can lead to errors in your code or produce inaccurate results when working with data.

-

How do I detect duplicates in a Python list?

You can detect duplicates in a Python list using various techniques such as using sets, list comprehensions, or the Counter class from the collections module.

-

What are the benefits of using sets to detect duplicates in Python lists?

Using sets to detect duplicates in Python lists is beneficial because sets are unordered collections that only contain unique elements. Therefore, converting a list to a set will automatically remove all duplicates.

-

Can I preserve the order of elements while removing duplicates in a Python list?

Yes, you can preserve the order of elements while removing duplicates in a Python list by using a list comprehension or the OrderedDict class from the collections module.

-

Are there any libraries available for detecting duplicates in Python lists?

Yes, the collections module in Python includes the Counter class, which can be used to efficiently count occurrences of elements in a list and identify duplicates.