As a data analyst, you may find yourself dealing with large datasets stored in Amazon S3. Retrieving and reading these files can be quite tedious, especially if you have to read them line by line. But what if I told you that there’s a way to do this effortlessly with Boto?

Boto is a popular Python library for accessing AWS services. It provides a simple interface for working with S3 objects, including the ability to read files line by line without the need for complicated code. This makes it ideal for data analysts who want to focus on analyzing data instead of spending precious time on file I/O operations.

In this article, we’ll show you how to use Boto to read S3 files line by line in just a few easy steps. Whether you’re a seasoned Python developer or just getting started with AWS, our simple guide will help you streamline your data analysis workflows.

So, if you’re tired of spending countless hours sifting through large datasets in S3, then it’s time to discover the power of Boto. Follow our step-by-step guide and start effortlessly reading S3 files line by line today!

“Read A File Line By Line From S3 Using Boto?” ~ bbaz

Introduction

Working with big data requires reading and processing large amounts of information. This can be a challeng, especially when working with files stored in the cloud. To overcome this challenge, developers can use Boto, a popular Python library for AWS. In this blog post, we will compare the traditional approach to reading S3 files line by line to using Boto for effortless reading.

The Traditional Approach

Reading S3 files line by line, using the traditional approach, requires multiple steps starting with establishing a connection to S3, creating a Bucket object and downloading the file locally. Once the file is downloaded, the data can be read line by line and processed accordingly.

Establishing a Connection

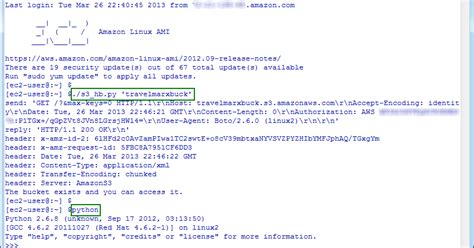

The first step in reading S3 files using the traditional approach is establishing a connection to S3. This requires specifying the correct access credentials and endpoint. Once connected, you can perform several operations such as listing available buckets, uploading, and downloading files among others.

Creating Bucket Object

Once a connection is established, a Bucket object is created. The bucket is specified using its unique name in S3. The bucket object can be used to perform various operations on objects stored within it, including reading a file line by line.

Downloading the File

After creating the bucket object, you need to download the file locally before processing the content line by line. This process is time-consuming and requires considerable disk space when loading large files.

Processing the File Line by Line

Once the file is downloaded, the data can be read line by line and processed accordingly. This process can be challenging, especially when dealing with large files, as it requires loading the entire file into memory before splitting into lines.

The Boto Approach

Boto offers a more convenient and time-efficient approach to read files stored in S3 line-by-line. The process is effortless thanks to the S3KeyIterator object. This object enables fast and efficient reading of large files from S3 in chunks without downloading them locally.

Creating the S3KeyIterator Object

The first step of the Boto approach is creating an instance of the S3KeyIterator class, which allows you to iterate over the contents of the file line by line without explicitly downloading it.

Reading File Line by Line

The S3KeyIterator class returns string objects, each representing a line from the file in S3. The file is processed line by line, reducing the amount of memory used for processing large files.

Comparison Table

Let’s compare these two approaches side by side to evaluate their differences.

| Traditional Approach | Boto Approach | |

|---|---|---|

| Connection | Manually establishing connection | Built-in connection to AWS |

| Bucket Object | Manually creating bucket object | Built-in S3KeyIterator object |

| Downloading | File must be downloaded locally | No need to download the file |

| Processing | Data is processed line by line after downloading | Data is directly processed line by line from S3 |

| Move Operation | Requires moving the file to local storage before processing | Does not require the move operation, and can directly process data |

Conclusion

Boto provides a more effortless and faster approach for accessing files stored in S3 compared to the traditional method. Boto removes the need for manual intervention, reducing errors due to human intervention, and multiplies productivity due to the built-in functions. When working with massive data files or dealing with frequent uploads, ETL, or big data processing, it is recommended to use Boto. The S3KeyIterator object in Boto provides an easy way to read S3 files line by line without the need to download the file locally. As organizations look for more efficient and faster ways to process data, libraries like Boto become a valuable asset.

Thank you for visiting our blog and reading about Effortlessly Read S3 Files Line by Line with Boto. We hope that you found the information in this article helpful and informative. By now, you should be able to easily read and process large S3 files using Boto by following the tips and techniques discussed in the article.

If you have any questions or suggestions regarding the topic, please don’t hesitate to leave a comment or contact us for further clarification. We value your feedback and interaction, and we are committed to delivering quality content to help you succeed in your endeavors.

Lastly, we invite you to explore our other blog posts and resources that cover a wide range of topics related to programming, web development, data science, and more. Our aim is to provide you with valuable insights and practical advice that you can apply to your work and projects. Thank you again for your visit, and we look forward to seeing you again on our blog.

Effortlessly reading S3 files line by line with Boto is a common task for many developers. Here are some frequently asked questions about this process:

-

What is Boto?

Boto is a Python package that provides interfaces to Amazon Web Services (AWS) services, including Simple Storage Service (S3).

-

How do I install Boto?

You can install Boto using pip, the Python package manager. Simply type

pip install botointo your terminal or command prompt. -

How do I read an S3 file line by line with Boto?

You can use Boto’s S3 resource and Bucket objects to access your S3 files. Then, you can use Python’s built-in

open()function to read the file line by line. Here’s an example:s3 = boto3.resource('s3')bucket = s3.Bucket('my-bucket')with bucket.Object('path/to/my/file.txt').get() as f: for line in f: print(line) -

Can I read a specific range of lines from an S3 file?

Yes, you can use Python’s

islice()function from theitertoolsmodule to read a specific range of lines from your S3 file. Here’s an example:import itertoolss3 = boto3.resource('s3')bucket = s3.Bucket('my-bucket')with bucket.Object('path/to/my/file.txt').get() as f: for line in itertools.islice(f, start_line, end_line): print(line) -

What if I want to read a large S3 file without loading it all into memory?

You can use Python’s

iter()function to create an iterator for your S3 file, which will allow you to read the file one line at a time without loading it all into memory. Here’s an example:s3 = boto3.resource('s3')bucket = s3.Bucket('my-bucket')file_iterator = bucket.Object('path/to/my/large/file.txt').get()['Body'].iter_lines()for line in file_iterator: print(line)