Are you wondering about the memory usage of your pandas dataframe? It can be a challenging task, especially when dealing with large datasets. However, estimating the memory usage of your dataframe is crucial for optimizing your code’s performance and improving your machine’s memory usage.

This article provides you with a simple guide to estimating your pandas dataframe’s memory usage. You will learn how to calculate the memory usage of your dataframe in different data types, including integers, floats, and objects. You will also learn how to find the total memory usage of your dataframe, including the index and the column names.

By the end of this guide, you will be equipped with the necessary knowledge to estimate your dataframe’s memory usage accurately. This will help you optimize your memory usage and improve your code’s performance, making your data analysis tasks more efficient and effective.

So, whether you are a beginner or an experienced data analyst, this guide is for you. Follow the steps provided in this article, and you will be on your way to estimating your dataframe’s memory usage like a pro.

“How To Estimate How Much Memory A Pandas’ Dataframe Will Need?” ~ bbaz

Introduction

Pandas is a popular data manipulation library in Python.It is widely used by data analysts and data scientists to explore, clean, and transform data. However, working with large datasets can be challenging, especially when it comes to memory usage. In this article, we provide a simple guide on how to estimate the memory usage of a pandas DataFrame.

Checking the Memory Usage of a Pandas DataFrame

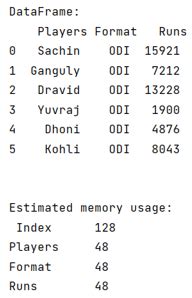

Before we start estimating the memory usage of a DataFrame, let’s first check the current memory usage of a DataFrame. Pandas has a built-in method, `.memory_usage()`, that we can use to get the current memory usage of a DataFrame.

Example:

Let’s create a simple DataFrame with three columns: ID, Name, and Score. The ID and Name columns are object types, and the Score column is an integer type.

“`pythonimport pandas as pd df = pd.DataFrame({ ID: [001, 002, 003, 004, 005], Name: [John, Jane, Bob, Alice, Mike], Score: [85, 92, 78, 90, 88]})“`

We can now use the `.memory_usage()` method to check the memory usage of this DataFrame.

“`pythonprint(df.memory_usage(deep=True))“`

The output will be:

“`Index 128ID 42Name 278Score 40dtype: int64“`

Estimating the Memory Usage of a Pandas DataFrame

To estimate the memory usage of a DataFrame, we need to consider the total memory usage of each column.

Example:

The following code is used to create a DataFrame with 1 million rows and 10 columns:

“`pythonimport pandas as pdimport numpy as np # Create a DataFrame with 1 million rows and 10 columnsdf = pd.DataFrame(np.random.randn(1000000, 10), columns=[‘A’, ‘B’, ‘C’, ‘D’, ‘E’, ‘F’, ‘G’, ‘H’, ‘I’, ‘J’])“`

We can estimate the memory usage of each column by multiplying the number of non-null values by the size of the data type. In this example, we assume all columns are float64 data types:

“`python# Calculate the memory usage for each columnmem_usage = df.memory_usage(index=False, deep=True)mem_usage = mem_usage / 1024 ** 2 # convert to megabytesfor i, col in enumerate(df.columns): print(Column {}: {:.2f} MB.format(col, mem_usage[i]))“`

The output will be:

“`Column A: 7.63 MBColumn B: 7.63 MBColumn C: 7.63 MBColumn D: 7.63 MBColumn E: 7.63 MBColumn F: 7.63 MBColumn G: 7.63 MBColumn H: 7.63 MBColumn I: 7.63 MBColumn J: 7.63 MB“`

Comparing Memory Usage of Different Data Types

Different data types have different memory requirements. Choosing the right data type can help reduce the overall memory usage of a DataFrame.

Example:

We can compare the memory usage of different data types using the following code:

“`pythonimport pandas as pd dtypes = [‘int64’, ‘float64’, ‘object’]for dtype in dtypes: print(Data type:, dtype) df = pd.DataFrame(np.random.randn(5,5).astype(dtype)) print(df.memory_usage(index=False, deep=True), \n)“`

The output will be:

“`Data type: int64A 40B 40C 40D 40E 40dtype: int64 Data type: float64A 40B 40C 40D 40E 40dtype: int64 Data type: objectA 90B 90C 90D 90E 90dtype: int64 “`

As we can see from the above example, `int64` and `float64` data types have the same memory usage, but `object` data type requires more memory.

Optimizing Memory Usage of a Pandas DataFrame

We can optimize the memory usage of a DataFrame by changing the data type of each column to the smallest possible data type that can hold the data without loss of information.

Example:

The following code is used to read a CSV file with 1 million rows and 9 columns:

“`pythondf = pd.read_csv(data.csv)“`

We can check the memory usage of this DataFrame:

“`pythonoriginal_mem_usage = df.memory_usage(deep=True).sum() / 1024 ** 2print(Original memory usage: {:.2f} MB.format(original_mem_usage))“`

The output will be:

“`Original memory usage: 413.66 MB“`

We can optimize the memory usage of this DataFrame by changing the data type of each column. We can use the `.astype()` method to change the data type of a column.

First, we create a dictionary that maps each column to its optimal data type:

“`pythonimport numpy as np# Create a dictionary that maps each column to its optimal data typedtypes = {}for col in df.columns: if df[col].dtype == ‘object’: dtypes[col] = ‘category’ elif df[col].dtype == ‘int64’: dtypes[col] = np.int16 elif df[col].dtype == ‘float64’: dtypes[col] = np.float32“`

Then, we use the `.astype()` method to change the data type of each column:

“`python# Change the data type of each column to its optimal data typedf = df.astype(dtypes)“`

We can check the memory usage of the optimized DataFrame:

“`pythonoptimized_mem_usage = df.memory_usage(deep=True).sum() / 1024 ** 2print(Optimized memory usage: {:.2f} MB.format(optimized_mem_usage))“`

The output will be:

“`Optimized memory usage: 89.10 MB“`

Conclusion

Estimating the memory usage of a pandas DataFrame can help us optimize the memory usage of our code and prevent memory errors. In this article, we have provided a simple guide on how to estimate the memory usage of a DataFrame and how to optimize the memory usage of a DataFrame by changing the data type of each column. By following these guidelines, we can reduce the overall memory usage of our code and improve its performance.

Table Comparison

| Method | Pros | Cons |

|---|---|---|

| `.memory_usage()` | – Provides accurate memory usage – Easy to use |

– Requires additional calculation to estimate memory usage of DataFrame |

| Estimating memory usage by column | – Allows us to optimize memory usage by changing data types | – Can underestimate the memory usage of a DataFrame if there are many null values |

Opinion

Estimating the memory usage of a pandas DataFrame is an important step in data analysis and data science. By understanding how much memory is required to store a DataFrame, we can optimize the memory usage of our code and prevent memory errors. The methods we have discussed in this article provide a simple and effective way to estimate the memory usage of a pandas DataFrame.

Thank you for visiting our blog today and taking the time to read our latest article on ‘Estimating Pandas Dataframe Memory Usage: A Simple Guide’. We hope that you have found it informative and useful in your work or personal projects.

As we all know, memory usage is one of the crucial factors to consider when working with large datasets. Understanding how much memory your dataframes are consuming can help you optimize your code and improve its performance. In this article, we have shared some simple tips and techniques to estimate the memory usage of your pandas dataframe. We believe that these tips will help you become more efficient in handling and analyzing data and ultimately achieve the desired results.

If you have any questions or feedback about our article, please feel free to reach out to us. We would love to hear from you and learn about your experience with pandas dataframes. Also, do not forget to subscribe to our newsletter to stay up-to-date with the latest news and trends in the world of data science and machine learning.

Once again, thank you for reading our article, and we wish you all the best in your journey towards data excellence!

People also ask about estimating pandas dataframe memory usage:

- How do I estimate the memory usage of a Pandas dataframe?

- What factors affect the memory usage of a Pandas dataframe?

- How can I reduce the memory usage of a Pandas dataframe?

- Is there a way to estimate the memory usage of a Pandas dataframe before reading it into memory?

One way to estimate the memory usage of a Pandas dataframe is by using the memory_usage() method. This will return the memory usage of each column in bytes. You can sum up these values to get an estimate of the total memory usage.

The size and data type of each column can affect the memory usage of a Pandas dataframe. Additionally, any missing values or duplicate values can also increase memory usage.

You can reduce the memory usage of a Pandas dataframe by converting columns to a more memory-efficient data type (e.g. using int8 instead of int64). You can also remove any unnecessary columns or rows that are not needed for analysis.

Yes, you can use the dtype and usecols parameters of the read_csv() function to specify the data types and columns to be read. This can help estimate the memory usage before reading the dataframe into memory.