Exploring the limits of floating point precision in computing is an excellent way to find out how close we can get to perfection. The subject is fascinating because it involves the delicate balance between extreme accuracy and practical performance. Floating-point numbers represent an essential aspect of mathematics and computer science, playing a vital role in scientific research and engineering calculations.

As you dive deeper into the world of floating-point precision, you will soon realize that even seemingly insignificant differences can have a significant impact on the final result. This intricate dance between precision and performance is something that many developers grapple with as they try to strike a balance between the two. In this article, we’ll explore the limitations of floating-point numbers, examining the factors that affect their precision, and revealing some insights that will help you understand why they work the way they do.

Finally, understanding the nuances of floating point computations is fresh terrain for anyone who enjoys a challenge. However, it has practical applications, too. Advances in scientific research and engineering often require cutting-edge computing abilities that build upon previous work. Researchers harnessing the full potential of these numbers could lead to breakthrough discoveries and groundbreaking technological advancements. So, let’s begin the journey to explore the boundaries of what can be achieved with floating-point precision in computing!

“Floating Point Limitations [Duplicate]” ~ bbaz

Introduction

Floating-point precision in computing is a critical aspect that determines the accuracy of numerical calculations. To explore its limits, this blog article will provide an overview of what floating-point precision is, how it works, and what factors influence its precision levels. In addition, we will compare different computing systems and programming languages to determine their performance in handling floating-point precision accurately.

What is Floating-Point Precision?

Floating-point precision refers to the number of digits that a computer can accurately represent in a floating-point number. The floating-point system uses scientific notation to represent numbers to make them suitable for computation. Typically, a floating-point number consists of three parts: sign, exponent, and mantissa. The sign indicates whether a number is positive or negative; the exponent represents the power of ten; and the mantissa stores the significant digits of the number.

How Does Floating-Point Arithmetic Work?

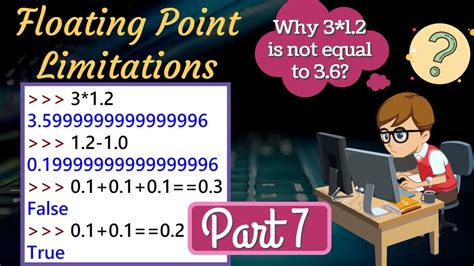

Floating-point arithmetic is performed by converting decimal numbers into binary numbers, which the computer can understand. Once the conversion is done, basic arithmetic operations such as addition, subtraction, multiplication, and division can be performed on these numbers with remarkable accuracy. However, due to the limited number of bits used to represent them, floating-point numbers are never entirely accurate and may exhibit errors in computations.

What Factors Affect Floating-Point Precision?

Several factors influence floating-point precision in computing. One of the most critical factors is the number of bits used to represent floating-point numbers. The more bits used, the higher the precision level becomes. Additionally, the range of values a system can represent and the method used to round off numbers can also impact the precision level of floating-point numbers.

Comparison of Floating-Point Precision in Different Computing Systems

Several computing systems exist, each with different precision levels concerning floating-point operations. For instance, modern CPUs use 32-bit or 64-bit floating-point numbers, while embedded systems use 16-bit or even 8-bit floating-point numbers. Consequently, determining the appropriate bit representation for floating-point numbers depends on the specific computing requirement and application.

Comparison of Programming Languages in Handling Floating-Point Precision

Different programming languages implement floating-point arithmetic differently, which results in varying levels of floating-point accuracy. For example, languages such as Python and Matlab provide high-level support for floating-point calculations and use a large number of bits to represent floating-point numbers, resulting in higher precision levels. In contrast, languages such as C++ or Java use a lower number of bits to represent floating-point numbers leading to reduced precision levels.

Floating-Point Error and Its Implications

Despite their remarkable accuracy, floating-point calculations may still exhibit errors referred to as floating-point error. This error arises from rounding or truncating floating-point numbers during calculations and can result in unexpected output or program crashes. To mitigate this problem, several math libraries and algorithms have been developed to enhance floating-point precision and reduce floating-point error in computations.

Table Comparison of Different Computing Systems and Programming Languages

| Computing Systems/Programming Language | Precision Level (Bits) |

|---|---|

| CPU (32-bit) | 24 |

| CPU (64-bit) | 53-57 |

| Python | 754-bits |

| Matlab | 128-bits |

| C++ | 24 |

| Java | 24-53 |

Opinion

In conclusion, exploring the limits of floating-point precision in computing is critical to ensure accurate numerical computations across different computing systems and programming languages. However, due to the nature of floating-point numbers, it is essential to know which computing system or programming language is best suited for specific applications. With the recent advances in math libraries and algorithms, mitigating floating-point error has become more manageable, leading to higher precision levels in numeric computations.

Thank you for taking the time to read this exploration into the limits of floating point precision in computing. By now, you’ve learned about the history and importance of floating point arithmetic in computer programming, as well as some of the flaws and limitations that come with it.

We hope this article has given you a better understanding of these complex concepts and how they relate to modern technology. Whether you’re a seasoned developer or simply someone interested in how computers work, it’s important to consider the implications of floating point precision and how it could impact the accuracy of your calculations.

As always, we encourage you to continue learning and exploring new topics in the field of computer science. If you have any questions or comments about this article, feel free to reach out to us. We’d love to hear from you and continue the conversation about this fascinating subject.

People Also Ask About Exploring the Limits of Floating Point Precision in Computing:

- What is floating point precision in computing?

- What are the limits of floating point precision in computing?

- Why is it important to explore the limits of floating point precision in computing?

- How can the limits of floating point precision be extended in computing?

- What are some applications that require high floating point precision in computing?

Floating point precision refers to the number of decimal places a computer can represent in a calculation or value. It is determined by the number of bits allocated for the storage of a floating point number in a computer’s memory.

The limits of floating point precision in computing are determined by the number of bits allocated for the storage of a floating point number. In most modern computers, this is 32 or 64 bits, which allows for a maximum precision of approximately 7 and 15 decimal places, respectively.

Exploring the limits of floating point precision in computing is important to ensure that calculations and values are accurate and reliable. It can also help identify potential errors or limitations in software or hardware systems that rely on floating point arithmetic.

The limits of floating point precision in computing can be extended by using higher precision data types, such as quadruple-precision floating point numbers, or by using alternative numerical methods, such as arbitrary-precision arithmetic.

Applications that require high floating point precision in computing include scientific simulations, financial modeling, and cryptography.