Are you tired of manually optimizing your functions? Do you want to take your optimization skills to the next level? If so, you need to master multivariable optimization with Scipy’s Minimize.

Scipy’s Minimize function is a powerful tool that allows you to find optimal values for your objective function with multiple parameters. This means that you can optimize complex functions and create models that better reflect real-world scenarios.

Your understanding of multivariable optimization not only allows you to make more efficient decisions, but it also enables you to gain deeper insights into the behavior of the systems you are modeling. From finance to engineering, mastering multivariable optimization is a skill that every analytics professional needs to have in their toolkit.

If you want to take your optimization skills to the next level, keep reading. This article will guide you through the basics of Scipy’s Minimize function and provide examples of how to use it in different contexts. By the end of this article, you will be equipped with the tools you need to tackle any multivariable optimization problem.

“Multiple Variables In Scipy’S Optimize.Minimize” ~ bbaz

Introduction

The Scipy library is a popular package that provides various scientific computing operations for Python. One of its features includes optimization functions, including the minimize function that allows users to perform multivariable optimization. This article will compare Scipy’s minimize function with other optimization techniques and discuss its advantages and limitations.

Multivariable Optimization Overview

In many real-life applications, problems often deal with multiple variables that need optimizing simultaneously. There are various optimization techniques available that can handle these types of problems. These include quadratic programming, linear programming, genetic algorithms, and many more.

Quadratic Programming

Quadratic programming involves minimizing or maximizing a quadratic objective function subject to a set of linear constraints. This method can solve a wide range of industrial and engineering applications such as portfolio optimization, machine learning models, and transportation planning. However, quadratic programming requires solving an N x N matrix, making it computationally expensive for large problems. It is a specialized technique that may not be suitable for some general optimization tasks.

Linear Programming

Linear programming is a technique for optimizing a linear objective function subjected to linear constraints. It is commonly used in operations research, economics, and business applications such as production planning, supply chain management, and resource allocation. Linear programming is fast and efficient but limited to linear relationships between variables, making it unsuitable for non-linear problems.

Genetic Algorithms

Genetic algorithms simulate natural selection to solve optimization problems. It starts with an initial population of solutions and generates new ones by recombining and mutating existing ones. This process continues until a good solution is found or reaches a predetermined number of generations. Genetic algorithms can handle non-linear and multimodal problems but are computationally intensive and require fine-tuning parameters for optimal results.

Features of Master Multivariable Optimization with Scipy’s Minimize

The Scipy library provides a versatile and robust optimization function called minimize that can find the minimum of a multivariable objective function using various algorithms.

Wide Range of Optimization Algorithms

Scipy’s minimize function supports various optimization methods such as BFGS, Nelder-Mead, Powell, CG, L-BFGS-B, TNC, and COBYLA. Each algorithm has different strengths and weaknesses suitable for different types of problems. Users can choose an appropriate algorithm based on their problem and initial conditions.

Constraint Handling

Scipy’s minimize function can handle both equality and inequality constraints, which can be important in real-life applications. Users can define constraints using lambda functions and pass them as arguments to minimize function. Scipy implements a constrained optimization technique based on Sequential Least SQuares Programming (SLSQP) that is efficient and reliable for constrained problems.

Function Derivatives

Scipy’s minimize function can use analytical or numerical derivatives of the objective function. Analytical derivatives are faster and more accurate but require mathematical skills to compute them. In contrast, numerical derivatives use finite differences to approximate the gradient, making them slower but easier to use. Users can switch between analytical and numerical derivatives by setting the jac parameter in minimize function.

Comparison Table

| Features | Scipy’s Minimize | Quadratic Programming | Linear Programming | Genetic Algorithms |

|---|---|---|---|---|

| Optimization Algorithms | Many | 1 | 1 | 1 |

| Constraint Handling | Yes | Yes | Yes | Some |

| Function Derivatives | Yes | No | No | Some |

| Applicability | General | Specialized | Linear | Non-linear |

| Computational Efficiency | Fast | Slow | Fast | Slow |

| Scalability | High | Low | High | Low |

| Parameter Tuning | Minimal | High | Low | High |

Conclusion

In conclusion, Scipy’s minimize function provides a versatile and reliable optimization method that can handle a wide range of multivariable problems. Its various optimization algorithms, constraint handling, and function derivative options make it suitable for general and specialized applications. Furthermore, its computational efficiency and scalability make it an excellent choice for large problems that require fast solutions.

While Scipy’s minimize function may not always be the best option for some specialized applications, it provides a reliable and relatively easy-to-use tool that can handle many real-life problems. With minimal parameter tuning and extensive documentation, we recommend Scipy’s minimize function to those looking for general-purpose optimization techniques.

We hope that after reading this article, you now have a better understanding of how to use Scipy’s Minimize function to solve multivariable optimization problems. With this powerful tool at your disposal, you can optimize a wide range of functions and achieve better results than ever before.

Whether you’re a professional researcher or simply someone who enjoys tinkering with complex mathematical problems, mastering multivariable optimization is an important skill to have. By using Scipy’s Minimize function, you can save yourself time and effort by automating the process of finding the optimal solution.

Thank you for visiting our blog and taking the time to read this article. We hope that you found it informative and helpful, and that you’re now inspired to explore the world of multivariable optimization further. If you have any questions or comments, please don’t hesitate to get in touch. We’d love to hear from you!

People Also Ask About Master Multivariable Optimization with Scipy’s Minimize

Here are some common questions that people ask about mastering multivariable optimization with Scipy’s Minimize:

-

What is Scipy’s Minimize?

Scipy’s Minimize is a Python library that provides functions for optimizing multivariable equations.

-

What is multivariable optimization?

Multivariable optimization is the process of finding the maximum or minimum value of a function that involves more than one variable.

-

Why is multivariable optimization important?

Multivariable optimization is important because many real-world problems involve multiple variables, and finding the optimal solution can lead to improved efficiency and cost savings.

-

What are some common optimization algorithms?

Some common optimization algorithms include gradient descent, Newton’s method, and the Broyden-Fletcher-Goldfarb-Shanno (BFGS) algorithm.

-

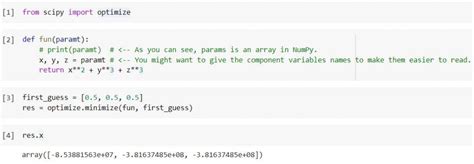

How do I use Scipy’s Minimize?

To use Scipy’s Minimize, you must define a function that you want to optimize and specify the initial values for the variables. You can then call the minimize function and pass in your function and initial values as arguments.

-

What are some tips for optimizing multivariable equations?

Some tips for optimizing multivariable equations include starting with good initial values, using appropriate optimization algorithms, and testing the robustness of your solution.