Are you looking to become a master in gradient descent? Do you want to know how to set adaptive learning rates? If your answer is yes, then you’ve come to the right place. This article will take you through everything you need to know about mastering gradient descent, from the basic concepts to advanced techniques.

Have you ever encountered the problem of getting stuck in a local minimum when applying gradient descent? This can be a frustrating experience for data scientists and machine learning engineers alike. However, with the right approach to setting adaptive learning rates, you can avoid this issue and achieve faster and more efficient convergence rates.

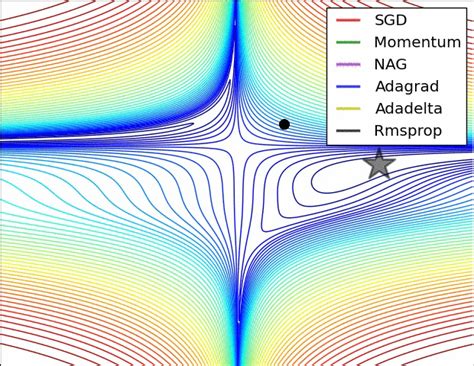

In this article, we’ll explore the different methods for setting adaptive learning rates, including stochastic gradient descent, Adagrad, RMSprop, and Adam. We’ll also discuss the advantages and disadvantages of each method, and provide practical examples of when and how to use them.

If you’re serious about improving your gradient descent performance and achieving better results in your machine learning models, then you won’t want to miss out on this comprehensive guide. So, read on and let’s delve into the world of mastering gradient descent!

“How To Set Adaptive Learning Rate For Gradientdescentoptimizer?” ~ bbaz

Introduction

Gradient Descent is one of the most commonly used optimization algorithms in Machine Learning, which helps in minimizing the cost function by adjusting the weights of the model. When dealing with large datasets, or complex models with many parameters, updating these weights too slowly or too quickly can have a big impact on model performance. In such cases, Adaptive learning rates offer a better alternative. They help in dynamically adjusting the learning rate and achieve faster convergence, better accuracy, and stability.

The Descending Gradient

In Gradient descent, the goal is to minimize the cost function by finding the optimal set of weights. It is based on the concept of descending down the gradient of the cost function, towards the minimum point. It involves computing the gradient of the cost function at each iteration, which defines the direction of steepest descent, and updating the weights in that direction.

The Need for Adaptive Learning Rates

Traditional Gradient descent uses a fixed learning rate, which works well when the cost function is smooth and has a consistent curvature. But in more complex scenarios, this primitive approach may fail to converge or converge slowly. In such cases, adaptive learning rates become necessary to facilitate quicker convergence, better accuracy, and stability.

Adaptive Learning Rates Techniques

There are multiple adaptive learning rate techniques available like Adagrad, RMSProp, Momentum, and Adam. These techniques adjust the learning rate based on the gradient and its variance over time, which provides better control and faster convergence. Here we will compare Adagrad and RMSProp methods.

Adagrad

Adagrad is an adaptive learning rate optimization algorithm that adjusts the learning rate for each parameter based on its historical gradients. It performs smaller updates for frequently occurring parameters and larger updates for infrequent ones, which helps in scaling down the learning rate for parameters with larger gradients and vice versa. This increases the convergence speed and stability, especially when dealing with sparse data.

RMSProp

RMSProp is an adaptive learning rate optimization algorithm that utilizes the moving average of squared gradients to dynamically adjust the learning rate. It scales the learning rate based on the recent gradient history instead of accumulating all the past gradients, which helps in mitigating the vanishing and exploding gradients problem. This makes it more suitable for Sequential models like RNNs or LSTMs.

Comparison Table between Adagrad and RMSProp

| Adagrad | RMSProp | |

|---|---|---|

| Learning Rate Update | Adaptive | Adaptive |

| Gradients Scaling | Divide by Squared Sum | Divide by Root Mean Squared Gradients |

| Memory usage | High | Low |

| Computational Complexity | Low | Low |

| Suitability | Sparse Data | Sequential Models (RNNs/LSTMs) |

Opinion

Both Adagrad and RMSProp are excellent adaptive learning rate optimization algorithms that can help achieve better results with faster convergence and stability when dealing with large datasets, complex models, or Sequential structures. However, choosing the right optimization technique depends on the nature of the data, complexity of the model, and computational resources. It is necessary to experiment with various optimization techniques to find the one that suits the specific use case.

Conclusion

Mastering Gradient Descent is an essential skill in Machine Learning. Adaptive Learning Rates is a crucial ingredient in this process, which helps in achieving faster convergence, better accuracy, and stability. Adagrad and RMSProp are two popular techniques that dynamically adjust the learning rate based on the gradient and its variance, providing better control over optimization. Choosing the right method depends on the specific use case, and it’s always recommended to experiment and compare different techniques to achieve the best results.

Dear valued reader,

Thank you for taking the time to read this article about Mastering Gradient Descent and Setting Adaptive Learning Rates. We hope that you found the information provided to be informative and helpful in your understanding of the topic.

As you may have learned, Gradient Descent is a widely-used optimization algorithm in machine learning and neural networks that can be used to minimize the error rate of a model. However, setting the appropriate learning rate for this algorithm is crucial to achieving success.

By implementing an adaptive learning rate, such as the Adam optimizer, you can ensure that your model is constantly adjusting and improving throughout the training process. This can result in faster convergence, better accuracy, and more efficient use of computational resources.

We hope that this article has encouraged you to further explore the world of machine learning and deep learning, and also that it has given you the necessary tools to optimize your own models using gradient descent and adaptive learning rates.

Thank you again for visiting our blog, and we hope to see you again for future articles!

Mastering Gradient Descent: Setting Adaptive Learning Rates is an important concept in machine learning. Here are some common questions that people ask about it:

- What is Gradient Descent?

- What are Adaptive Learning Rates?

- Why is it important to set Adaptive Learning Rates?

- What are some common methods for setting Adaptive Learning Rates?

Gradient Descent is an optimization algorithm used in machine learning to minimize the error of a model’s predictions by adjusting the model’s parameters iteratively.

Adaptive Learning Rates are a technique used in Gradient Descent to adjust the learning rate (step size) according to the gradient magnitude. This helps the algorithm converge faster and more accurately.

Setting Adaptive Learning Rates is important because it can help prevent the algorithm from getting stuck in local minima and improve the accuracy of the model’s predictions.

- Adagrad

- Adam

- RMSprop

The choice of method depends on the specific problem and dataset you are working with. It may require some experimentation to determine which method works best for your particular situation.