If you are struggling with data filtering in Pyspark, you are not alone. Filter efficiency is often a challenge when working with large datasets, and manually filtering rows can be time-consuming and error-prone. However, there is a solution that can help maximize your filtering efficiency: Groupby columns in Pyspark.

Grouping columns in Pyspark allows you to filter rows based on specific criteria, such as unique values or combinations of values across multiple columns. This approach can significantly simplify your filtering tasks and lead to more accurate results. With the right techniques, you can leverage groupby columns in Pyspark to optimize your data filtering and achieve better performance.

If you want to learn more about how to use groupby columns to improve your filtering efficiency in Pyspark, keep reading. In this article, we will cover the basics of grouping columns and demonstrate some practical examples of how you can apply this method to your own projects. Whether you are a beginner or an experienced Pyspark user, you will find valuable insights and tips to elevate your data filtering skills.

Don’t let the complexity of data filtering slow you down. Maximize your efficiency with groupby columns in Pyspark and take your data analysis to the next level. Read on and discover how you can transform your data filtering tasks into a streamlined and effective process that delivers results.

“Groupby Column And Filter Rows With Maximum Value In Pyspark” ~ bbaz

Introduction

Pyspark is a powerful tool that allows data analysts and software engineers to work with large datasets. One of the key features of Pyspark is the ability to groupby columns to maximize filtering efficiency. In this article, we will explore how to use this feature effectively.

What are groupby columns?

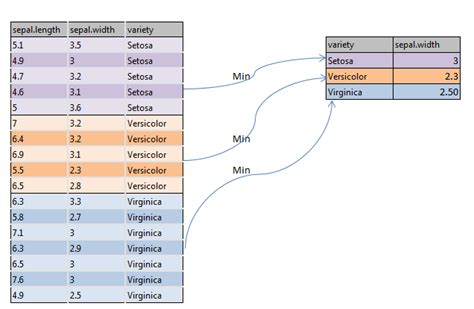

Groupby columns allow us to aggregate data based on specific columns in a dataset. This is similar to using the GROUP BY clause in SQL. When using groupby columns, Pyspark will group the data by the selected columns and apply operations to the grouped data. This can significantly improve filtering efficiency, as it reduces the amount of data that needs to be processed.

How to use groupby columns in Pyspark

Using groupby columns in Pyspark is fairly simple. To start, we need to create a SparkSession object and load our dataset into a DataFrame. Once we have our DataFrame, we can call the groupBy() method and pass in one or more column names.

For example, let’s say we have a dataset with customer purchase data. We can group the data by customer ID and get the total purchase amount for each customer using the following code:

“`from pyspark.sql import SparkSessionspark = SparkSession.builder.appName(‘groupby_columns’).getOrCreate()df = spark.read.csv(‘customer_purchases.csv’, header=True)grouped_df = df.groupBy(‘customer_id’).sum(‘purchase_amount’)“`

Comparing groupby columns to regular filtering

To demonstrate the effectiveness of groupby columns, let’s compare it to regular filtering. Suppose we want to find all customers who made a purchase greater than $100. We can achieve this goal in two ways.

First, we can use regular filtering:

“`filtered_df = df.filter(df.purchase_amount > 100)“`

Second, we can use groupby columns:

“`grouped_df = df.groupBy(‘customer_id’).sum(‘purchase_amount’)filtered_df = grouped_df.filter(grouped_df[‘sum(purchase_amount)’] > 100)“`

Comparing the two methods, we can see that using groupby columns requires an extra step of filtering the aggregated data. However, groupby columns can still be more efficient because it reduces the amount of data that needs to be processed.

Performance considerations

While groupby columns can improve filtering efficiency, there are some performance considerations to keep in mind. One important consideration is the size of the dataset. Groupby columns work best on large datasets with many rows. If the dataset is small, the overhead of aggregating the data may actually decrease performance.

Another consideration is the complexity of the aggregations. More complex aggregations, such as computing the average or median, require more processing power and memory. It’s important to strike a balance between complexity and efficiency when selecting aggregations.

Conclusion

In this article, we explored how to use groupby columns in Pyspark to maximize filtering efficiency. We compared groupby columns to regular filtering and discussed performance considerations. While not a panacea, groupby columns can be an effective tool for working with large datasets in Pyspark.

Thank you for taking the time to read our article on maximizing filtering efficiency with groupby columns in PySpark. We hope that you found the information helpful and informative as you navigate the world of PySpark development.

By using the GroupBy function in your PySpark scripts, you can greatly improve the efficiency and accuracy of your data analysis. Whether you are working with large datasets or simply need to streamline your code, the GroupBy function is an essential tool that every PySpark developer should have in their toolkit.

Of course, this article is just the beginning when it comes to exploring the many powerful features of PySpark. As you continue to delve deeper into this powerful language, we encourage you to explore other resources and tutorials to help you master the art of PySpark development.

Thank you again for visiting our blog and we wish you all the best in your future PySpark endeavors!

People also ask:

- What is Maximize Filtering Efficiency in Pyspark?

- How does GroupBy columns optimize filtering efficiency in Pyspark?

- What are some best practices for using GroupBy columns in Pyspark?

Maximize Filtering Efficiency refers to the process of optimizing the performance of Apache Spark’s filter operation by using GroupBy columns in Pyspark.

GroupBy columns allow Spark to perform filtering operations more efficiently by reducing the amount of data that needs to be scanned. By grouping the data on specific columns, Spark can avoid scanning through the entire dataset and only focus on the relevant groups. This leads to faster query processing and improved overall performance.

- Choose the right columns to group by: The columns you choose should have high cardinality and be relevant to the queries you plan to run.

- Limit the number of columns to group by: Grouping by too many columns can lead to slower performance and increased memory usage.

- Consider partitioning your data: Partitioning your data can further improve performance by allowing Spark to distribute the workload across multiple nodes.