Python is one of the most widely used programming languages around the world for developing various applications. However, with the ever-increasing size and complexity of software projects, enhancing the performance of Python programs has become a crucial aspect of application development. If you’re struggling to optimize your Python code, then Multi-Process Profiling is here to help.

Multi-Process Profiling is a powerful technique that enables developers to identify bottlenecks in their Python code by analyzing different processes running simultaneously. With this technique, developers can efficiently pinpoint factors such as I/O bound operations, CPU utilization, memory usage, and other performance issues that contribute to slower execution times.

In this article, we delve deeper into the benefits of using Multi-Process Profiling for maximizing Python performance. We explain how it works, provide step-by-step instructions on how to use it, and highlight best practices for using profiling tools. By the end of this article, you’ll have a comprehensive understanding of how to profile your Python code efficiently and effectively, improving the performance of your programs significantly.

Don’t let slow-running Python codes hold you back. Boost your productivity and get your applications performing at their best by reading this article in its entirety. Discover how Multi-Process Profiling can help you achieve maximum Python performance and take your software development skills to the next level.

“Python Multiprocess Profiling” ~ bbaz

Python Performance Optimization

When it comes to programming languages, Python is one of the most versatile and widely used languages in the world. However, it has its limitations in terms of performance which can hinder the execution of tasks. As developers, we often find it challenging to optimize the performance of our Python applications. In this article, we will explore Python’s Multi-Process Profiling and how it can help maximize the performance of our code.

What is Multi-Process Profiling?

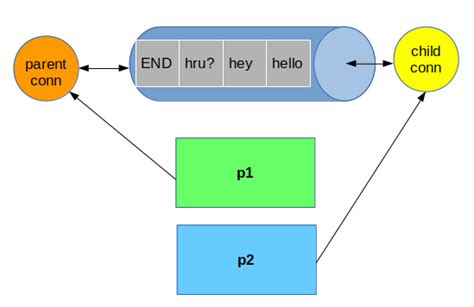

The Multi-Process Profiling approach is a way of analyzing the performance of a program by measuring the time taken by each process to execute within the system. It involves running parallel processes that share data but run independent of each other. These processes communicate with each other and share data through inter-process communication. The idea behind this is to have multiple processes running simultaneously allowing more work to be done in less time.

Benefits of Multi-Process Profiling

Multi-Process Profiling offers various benefits when it comes to Python performance optimization. Some of these benefits include:

Improved Speed

By running multiple processes concurrently rather than sequentially, you can take advantage of idle CPU cores resulting in faster execution of your code.

Efficient Resource Utilization

With multi-processing, you can efficiently utilize resources like memory and CPU which leads to overall better performance of your program.

Better Scalability

The scalability of your application improves as the number of processes increases. This means that as your program grows, multi-process profiling can handle a heavier workload and execute tasks faster.

Multi-Process Profiling vs Multi-Thread Profiling

In addition to multi-process profiling, multi-thread profiling is another approach developers can use to improve Python’s performance. However, it is important to understand the differences between both approaches.

| Multiprocessing | Multithreading |

|---|---|

| Runs multiple processes concurrently | Runs multiple threads simultaneously within a single process |

| Each process runs in its own memory space | All threads share the same memory space within a process |

| Scaling is easier | Scaling can be difficult and can lead to locks with shared data |

As shown in the table above, multi-process profiling provides better scaling and resource management advantages.

Maximizing Python Performance with Multiprocessing Profiling

Here are some steps developers can take to maximize Python’s performance using multiprocessing:

Identify Bottlenecks

The first step is to identify the parts of your code that are taking up the most time. Profiling tools can help you identify these areas by showing how long each operation takes to execute.

Break Workload into Parallel Tasks

The next step is to break down the workload into smaller parallel tasks. You can use a “divide and conquer” approach to divide the work into smaller chunks that can be executed concurrently.

Implement Multi-Process Profiling

Utilize the multiprocessing module in Python to implement multi-process profiling. This can be done by creating multiple processes that perform different tasks and then sharing their results through inter-process communication.

Monitor Performance

After implementation, monitor your program’s performance to ensure that it’s running efficiently. Continuously identify and eliminate bottlenecks to maximize performance.

Conclusion

By implementing multi-process profiling, Python developers can achieve better performance by optimizing resource usage and maximizing code speed. Multi-process profiling scales well and can handle heavier workloads. By utilizing these steps, developers can create applications that perform better, use fewer resources, and scale more effectively.

Thank you for reading our article about maximizing Python performance with multi-process profiling. We hope that the information we shared has been informative and useful to you, whether you are a beginner or an experienced Python developer.

By implementing the techniques we discussed in this article, you can significantly improve the performance of your Python applications. Multi-process profiling, in particular, can help you identify bottlenecks in your code and optimize them for faster execution.

In conclusion, we encourage you to continue exploring the world of Python and to keep learning new techniques and strategies for improving your code. If you have any questions or feedback about our article, feel free to leave a comment or get in touch with us directly.

We hope you found our article informative and helpful! Please let us know if you have any questions or feedback. Thank you for reading!

Below are some commonly asked questions about maximizing Python performance with multi-process profiling:

-

What is multi-process profiling in Python?

Multi-process profiling in Python is a technique that involves analyzing the performance of a Python program by running it in multiple processes simultaneously. This allows for better utilization of available system resources and can lead to significant improvements in overall performance.

-

How does multi-process profiling work?

Multi-process profiling works by dividing the workload of a Python program into multiple subprocesses that can run concurrently. Each subprocess is assigned a specific task, and the results of each subprocess are combined to produce the final output. By distributing the workload across multiple processes, the overall performance of the program can be greatly improved.

-

What are the benefits of multi-process profiling?

The main benefit of multi-process profiling is that it allows for better utilization of available system resources, which can result in significant improvements in overall performance. Other benefits include increased scalability, improved fault tolerance, and reduced latency.

-

What are some best practices for multi-process profiling in Python?

Some best practices for multi-process profiling in Python include using a process pool to manage subprocesses, minimizing data transfer between processes, using shared memory to store data that needs to be accessed by multiple processes, and utilizing profiling tools like cProfile and PyCharm to identify performance bottlenecks.

-

What are some common performance bottlenecks in multi-process Python programs?

Common performance bottlenecks in multi-process Python programs include inefficient data transfer between processes, excessive use of the global interpreter lock (GIL), and poor load balancing between subprocesses. These issues can be addressed through careful design and profiling of the program.