Python Spark has emerged as a popular big data processing engine in recent years. One of the significant features of Spark is Resilient Distributed Datasets (RDDs), which enable parallel processing on vast datasets. Working with RDDs can be a daunting task, especially when you have to explore their contents thoroughly. But now, exploring RDD contents has become easy and hassle-free with Python Spark.

If you’re a data analyst or a data scientist, you know how time-consuming it can be to dig deep into large RDDs to find critical insights. With Python Spark, you can use the various built-in functions to discover the contents of RDDs without any hassle. From finding the most common words to mapping and filtering specific data sets, Python Spark allows you to analyze data quickly and efficiently.

Python Spark is an excellent tool for data exploration in big data environments. It provides analysts and data scientists with various libraries and functions to help them achieve their goals. With the ability to process large datasets and explore their contents with ease, Python Spark is undoubtedly a valuable asset to any company or organization that prioritizes data analysis and insight generation.

In conclusion, if you want to streamline your data exploration and analysis processes, Python Spark is an excellent tool to consider. With its ability to explore large datasets effortlessly, you can save time and resources while gaining critical insights into your data. So, if you’re interested in learning more about how Python Spark offers an easy way to explore RDD contents, be sure to check out the rest of this article.

“View Rdd Contents In Python Spark?” ~ bbaz

Introduction

Python and Apache Spark are two buzzwords in the data science industry. Python is a popular high-level, general-purpose programming language that is easy to learn, read, and write. It has several libraries for scientific computing, such as NumPy, Matplotlib, and Pandas. On the other hand, Apache Spark is an open-source, distributed computing system that can process large-scale datasets with speed and ease. It uses a data abstraction called RDD (Resilient Distributed Dataset) to achieve fault-tolerant parallel processing. In this comparison blog post, we will explore how Python Spark makes it easy to explore RDD contents.

What is an RDD?

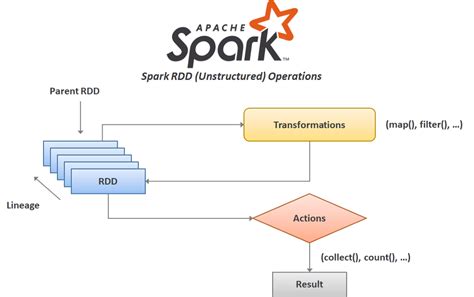

RDD stands for Resilient Distributed Dataset. It is a fundamental data structure in Apache Spark that represents an immutable distributed collection of objects. RDDs allow for transparent fault-tolerance by recomputing lost partitions on demand. They support two types of operations: transformations, which produce a new RDD from an existing one, and actions, which trigger computation and return a result to the driver program or write data to an external storage system.

Exploring RDD Contents in Python

Python Spark provides several methods to explore the contents of an RDD. Let’s look at some of them:

Collect()

The collect() method returns all the elements of an RDD as an array to the driver program. It is useful for small RDDs that can fit in memory, but not recommended for large RDDs. The collect() method can cause out-of-memory errors if used improperly. Here is an example:

“`>>> rdd = sc.parallelize([1, 2, 3, 4, 5])>>> rdd.collect()[1, 2, 3, 4, 5]“`

Take()

The take() method returns the first n elements of an RDD as an array to the driver program. It is a safer alternative to collect() for large RDDs because it limits the amount of data transferred to the driver. Here is an example:

“`>>> rdd = sc.parallelize([1, 2, 3, 4, 5])>>> rdd.take(3)[1, 2, 3]“`

Count()

The count() method returns the number of elements in an RDD. It is useful for estimating the size of an RDD. Here is an example:

“`>>> rdd = sc.parallelize([1, 2, 3, 4, 5])>>> rdd.count()5“`

First()

The first() method returns the first element of an RDD to the driver program. It is useful for inspecting the contents of an RDD. Here is an example:

“`>>> rdd = sc.parallelize([1, 2, 3, 4, 5])>>> rdd.first()1“`

TakeSample()

The takeSample() method returns a random sample of n elements from an RDD to the driver program. It is useful for selecting a representative subset of an RDD. Here is an example:

“`>>> rdd = sc.parallelize([1, 2, 3, 4, 5])>>> rdd.takeSample(False, 3)[2, 5, 1]“`

Comparison Table

| Method | Description | Pros | Cons |

|---|---|---|---|

| collect() | Returns all elements of an RDD as an array to the driver program | Useful for small RDDs that can fit in memory | Can cause out-of-memory errors if used improperly |

| take() | Returns the first n elements of an RDD as an array to the driver program | Safer alternative to collect() for large RDDs because it limits the amount of data transferred to the driver | Less useful for estimating the size of an RDD than count() |

| count() | Returns the number of elements in an RDD | Useful for estimating the size of an RDD and for comparing RDD sizes | May require extra computation depending on the RDD lineage |

| first() | Returns the first element of an RDD to the driver program | Useful for inspecting the contents of an RDD without loading it into memory | Less useful for computing statistics and aggregations than other methods |

| takeSample() | Returns a random sample of n elements from an RDD to the driver program | Useful for selecting a representative subset of an RDD | Can be less accurate than other methods for estimating statistics and aggregations |

Conclusion

Exploring RDD contents is an essential step in working with Apache Spark. Fortunately, Python Spark provides several methods to make this task easy and efficient. The collect() method is useful for small RDDs that can fit in memory, while the take() and takeSample() methods are safer alternatives for large RDDs. The count() method is useful for estimating the size of an RDD, while the first() method is useful for inspecting its contents. By using these methods wisely and understanding their pros and cons, you can explore RDDs with confidence and efficiency.

Thank you for taking the time to explore Python Spark with us. Our focus on this article was on RDD contents, and we hope that we have made it easy for you to understand the important concepts that make up RDDs. We believe that by now, you can see how RDD contents are used in Big Data processing and how they can be a powerful tool for data analysts and scientists alike.

We acknowledge that the topic of RDD contents may seem daunting at first, but as we have demonstrated in this article, understanding RDDs is not as hard as it seems. With the right resources and guidance, including the tools and techniques covered here, you can become comfortable working with RDDs in Python Spark.

We hope that you have found the information in this article both useful and informative. We believe that Python Spark is an exciting technology that is here to stay, and mastering RDD schematics is crucial to successfully using Python Spark. Please take advantage of our detailed discussions and examples and put them to work in shaping your Big Data projects.

People Also Ask about Python Spark: Exploring RDD Contents Made Easy

- What is RDD in Python Spark?

- How do you explore RDD contents in Python Spark?

RDD stands for Resilient Distributed Datasets, which are fault-tolerant collections of elements that can be processed in parallel across a cluster. In simple terms, RDD is a fundamental data structure in Spark that allows data to be stored in memory and distributed across multiple nodes.

You can use various methods to explore RDD contents in Python Spark, such as:

- collect(): It returns all the elements of the RDD as an array to the driver program.

- take(n): It returns the first n elements of the RDD.

- count(): It returns the number of elements in the RDD.

- foreach(f): It applies the given function f to each element of the RDD.

- first(): It returns the first element of the RDD.

The advantage of using RDD in Python Spark are:

- Fault tolerance: RDDs are fault-tolerant and can recover from node failures.

- Distributed: RDDs are distributed across multiple nodes in a cluster, which can improve the performance of data processing.

- In-memory computation: RDDs can store data in memory, which can make data processing faster and more efficient.

Some common transformations you can apply to RDD in Python Spark are:

- map(f): It applies the function f to each element of the RDD and returns a new RDD.

- filter(f): It filters the elements of the RDD based on the given condition and returns a new RDD.

- flatMap(f): It applies the function f to each element of the RDD and returns a flattened new RDD.

- union(rdd): It combines two RDDs and returns a new RDD with the elements of both RDDs.

- distinct(): It removes the duplicates from the RDD and returns a new RDD.