Are you tired of struggling with PySpark debugging? Looking for a comprehensive solution that can help you better understand how to call PySpark in debug mode? Look no further! We have the perfect guide for you!

Our article entitled, Python Tips: Calling PySpark in Debug Mode – A Comprehensive Guide is designed to provide you with all the tools you need to make debugging in PySpark simple and easy. This guide will take you through step-by-step instructions on how to leverage PySpark’s built-in debugging capabilities.

We cover everything from setting breakpoints, inspecting variables, tracing program execution, and more. Whether you’re a beginner or an expert, our guide is guaranteed to improve your understanding of PySpark debugging, and help you troubleshoot issues with greater ease.

So if you’re looking for an easy-to-follow guide that provides a comprehensive understanding of PySpark debugging, then this article is for you. We invite you to read our article in full and discover how to call PySpark in debug mode for effortless debugging today.

“How Can Pyspark Be Called In Debug Mode?” ~ bbaz

Introduction

If you’ve been working with PySpark, you know that debugging can be a real pain. It’s not always easy to figure out what’s causing an error or why your code isn’t working the way it should be. But don’t worry – we’re here to help. In this article, we’ll show you how to call PySpark in debug mode, so you can quickly identify and fix any problems in your code.

What is PySpark?

PySpark is a Python library that allows you to interface with Apache Spark, which is a powerful big data processing engine. By using PySpark, you can write Python code that can be executed in a distributed environment, allowing for fast and efficient processing of large datasets.

Why Debugging in PySpark is Important

Debugging is an important aspect of software development, and PySpark is no exception. When working with large datasets, it’s common to encounter errors or unexpected behavior in your code. By using PySpark’s built-in debugging capabilities, you can quickly identify and fix these problems, saving you time and frustration.

Setting up PySpark in Debug Mode

The first step in debugging PySpark code is to set it up in debug mode. This involves attaching a debugger to your PySpark process, so you can pause execution and inspect variables and program flow.

Attaching a Debugger

To attach a debugger to your PySpark process, you first need to start your PySpark shell or application in debug mode. This can be done by setting the `PYSPARK_SUBMIT_ARGS` environment variable to include the appropriate command line arguments for your debugger. For example, if you’re using PyCharm as your debugger, you can set the `PYSPARK_SUBMIT_ARGS` variable as follows:

| Environment Variable | Value |

|---|---|

| PYSPARK_SUBMIT_ARGS | –conf spark.executor.extraJavaOptions=-agentlib:jdwp=transport=dt_socket,server=y,suspend=y,address=5005 |

Setting Breakpoints

Once your PySpark process is running in debug mode, you can set breakpoints in your code by using the `pdb` module, which is Python’s built-in debugger. Simply add the following line of code where you want to set a breakpoint:

| Code Example |

|---|

import pdb;pdb.set_trace() |

Inspecting Variables

One of the most powerful features of PySpark’s debugging capabilities is the ability to inspect variables. When paused at a breakpoint, you can use the debugger to view the current value of any variable in your code. This can be especially helpful when trying to figure out why your code isn’t working the way it should be.

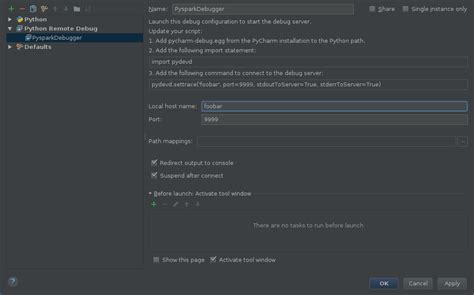

Viewing Variables in PyCharm

If you’re using PyCharm as your debugger, you can view variables by opening the Variables pane in the Debug tool window. This pane will show you a list of all the variables currently in scope, along with their current value.

Tracing Program Execution

Another useful feature of PySpark’s debugging capabilities is the ability to trace program execution. When tracing is enabled, the debugger will pause execution at every line of code, allowing you to step through your program and see exactly what’s happening at each step.

Enabling Tracing in PyCharm

To enable tracing in PyCharm, simply open the Debug tool window and click on the Trace button. This will start tracing program execution, and the debugger will pause at every line of code.

Conclusion

Debugging in PySpark can be frustrating, but with the right tools and techniques, it doesn’t have to be. In this article, we’ve shown you how to set up PySpark in debug mode, how to inspect variables, and how to trace program execution. By using these techniques, you’ll be able to quickly identify and fix any problems in your code, making your PySpark development process much smoother.

Thank you for visiting our blog! We hope that you found our comprehensive guide to calling PySpark in debug mode helpful. Python is a powerful programming language, and PySpark provides an efficient way for developers to process large datasets using the power of distributed computing. Debugging is an essential part of software development, and being able to debug PySpark applications efficiently can save you time and effort.

We covered many different aspects of PySpark debugging in this guide, including how to enable debugging, setting breakpoints, and inspecting variables. We also provided useful tips and tricks, such as using DataFrames instead of RDDs and leveraging PyCharm’s debugging capabilities. By following the steps outlined in this guide, you should be able to easily debug your PySpark applications and identify any issues quickly.

If you have any feedback on this article or any suggestions for future topics, please don’t hesitate to leave a comment below or contact us directly. We’re always eager to hear from our readers and are dedicated to providing valuable resources to the Python community. Thank you again for visiting, and we hope to see you back here soon!

As Python is a widely-used programming language, PySpark has become increasingly popular among data scientists and engineers for processing large datasets. In this article, we will discuss some of the most commonly asked questions about calling PySpark in debug mode.

1. What is PySpark?

- PySpark is a Python library used for processing large datasets using Apache Spark.

2. What is debugging?

- Debugging is the process of finding and fixing errors or bugs in code.

3. Why do I need to run PySpark in debug mode?

- Running PySpark in debug mode can help you identify and fix errors in your code more efficiently.

4. How do I call PySpark in debug mode?

- You can call PySpark in debug mode by setting the PYSPARK_PYTHON environment variable to the path of your Python debugger.

5. What are some common PySpark debugging tools?

- Some common PySpark debugging tools include PyCharm, Visual Studio Code, and pdb.

6. How can I debug PySpark code in PyCharm?

- You can debug PySpark code in PyCharm by setting a breakpoint in your code and then running the code in debug mode.

By understanding these common questions about calling PySpark in debug mode, you can optimize your PySpark code and make it more efficient.