If you are working with PySpark, you know how challenging it can be to efficiently ship Python modules to other nodes. PySpark is a distributed computing system that runs Python programs on a cluster of machines. One of the biggest challenges is ensuring that all the necessary Python modules are available on every node in the cluster.

Fortunately, there are ways to improve the efficiency of shipping Python modules in PySpark. By understanding how PySpark and Python work together, you can optimize your workflow and ensure that your code runs smoothly. In this article, we will provide several tips for efficiently shipping Python modules in PySpark to other nodes.

If you want to improve your PySpark code’s performance and avoid issues with missing modules, then read on. This article offers practical advice and actionable tips that will help you streamline your PySpark workflows. By the end of this article, you will have a solid foundation for shipping Python modules in PySpark more efficiently.

“Shipping Python Modules In Pyspark To Other Nodes” ~ bbaz

Introduction

PySpark is an incredible tool for distributed data processing with its capacity to harness the power of Python. However, one significant challenge when working with PySpark is efficiently shipping Python modules to other nodes in the cluster. Without a proper workflow, Python modules tend to go missing, leading to problems in running code across multiple machines consistently.

Understanding PySpark and Python Linkage

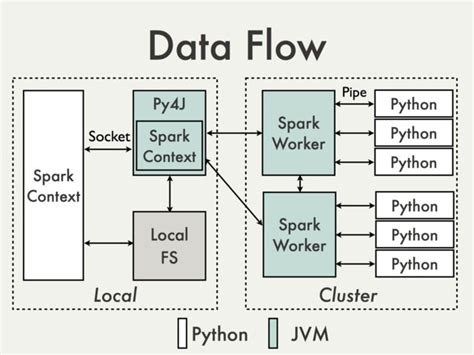

In PySpark, Python workers run separately from the driver program, particularly in different JVMs, which means that Python dependencies are not readily available. PySpark addresses this by storing locally all files required by the driver program on every worker node. However, it’s not advisable to rely on this storage method due to latency issues, resulting in unsatisfactory performance.

Improve Shipping Efficiency in PySpark

We can improve how PySpark ships Python modules to other nodes by following specific coding practices to make our delivery process much quicker and more efficient. Below are some tips that can help:

Use Requirements files to Specify Dependencies

Requirements.txt files list all libraries you need and their versions and allows you to install everything at once with one command. PySpark supports pip, so setting up requirements.txt ensures all your nodes have the programmer’s required dependencies without hand installation.

Bundling Dependencies into One Zip File

You can bundle all dependencies into one zip file, including all third-party libraries the program needs. This facilitates the sending of only one zip file around, which contains everything.

Compressing Files for Consistency

In situations where many python files are needed across different nodes, compressing the files reduces the burden of transmitting multiple files. This can be done manually or via Apache Spark’s deflate algorithm.

Optimizing PySpark Workflows

Beyond shipping Python modules more effectively, improving PySpark workflows involves optimizing code for speed, efficiency and readability. Below are some strategies:

Reduce Data Shuffling

Shuffling data in Spark can be expensive, so minimizing it wherever possible is crucial. You can avoid shuffling data by using techniques like broadcasting, filtering data based on partition level before joining, and more.

Choose Reading Formats Carefully

Data can be read from various sources like Hadoop or the local file system. However, reading in the wrong format or choosing an unsuitable file type can lead to slow processing times. Careful thought should be given to reading and writing formats while ensuring that they match.

Table Comparison: Shipping Efficiency Techniques

| Technique | Pros | Cons |

|---|---|---|

| Using Requirements.txt files | Simplifies installation on all nodes. | If setting up custom libraries or specific dependency-version requirements, additional detailed specifications may be required. |

| Bundling Dependencies into One Zip File | Facilitates easy delivery of a single file with all dependencies included. | Determining which libraries the program needs and creating the zip file accurately can be tedious. |

| Compressing Files for Consistency | Reduces the burden of transmitting multiple files across many nodes. | Compression/decompression can add an overhead cost due to additional computation time. |

Opinion

In conclusion, PySpark is a powerful tool for distributed computing. Excellent performance and consistency require efficient shipping of Python modules and optimizing code for improved workflows. The techniques discussed in this article provides practical steps to improve the shipment and optimize the workflow of PySpark projects. Choosing the appropriate technique based on project needs is advisable. After implementing these tips, developers can confidently work with PySpark while enjoying enhanced performance and zero missing module issues.

Thank you for taking the time to read our blog post about efficiently shipping Python modules in PySpark to other nodes! We hope that this article has given you some valuable insights and practical tips that you can use to streamline your PySpark workflows and improve your productivity.

As we’ve discussed in this article, using Python modules in PySpark can significantly enhance the functionality and flexibility of your data processing pipelines. However, shipping these modules to other nodes in a distributed environment can be a challenge, especially when dealing with large-scale datasets or complex analysis scenarios.

By following the best practices and strategies we’ve outlined in this post, you can ensure that your Python modules are delivered efficiently and reliably to all nodes in your PySpark cluster. Whether you’re working on a small personal project or collaborating on a large enterprise-level data pipeline, these tips will help you get the most out of your PySpark experience and achieve your data processing goals with ease.

People also ask about Python Tips: Efficiently Shipping Python Modules in PySpark to Other Nodes:

- What is PySpark and why is it important?

- What are Python modules and why do they need to be shipped efficiently in PySpark?

- How can I efficiently ship Python modules in PySpark?

PySpark is the Python API for Apache Spark, a powerful open-source cluster-computing framework. It is important because it allows developers to write distributed data processing applications using Python, a popular programming language.

Python modules are files containing Python code that can be imported and used in other Python programs. In PySpark, these modules need to be shipped efficiently to other nodes in the cluster so that the code can be executed in a distributed manner.

There are several ways to efficiently ship Python modules in PySpark:

- Use the –py-files option when submitting your PySpark application to include the necessary Python modules.

- Use the PYSPARK_DRIVER_PYTHON environment variable to set the path to the Python interpreter on all nodes.

- Use the zip() function to create a .zip file containing your Python modules and distribute it to all nodes.

- Package your Python modules as an egg file using setuptools and distribute it to all nodes.

Here are some best practices for shipping Python modules in PySpark:

- Minimize the number of Python modules you need to ship by only including the necessary modules for your application.

- Use the most efficient method for shipping your Python modules based on your specific use case.

- Test your PySpark application on a small cluster before deploying it to a larger cluster to ensure that your Python modules are being shipped efficiently.