Are you tired of struggling with web scraping in Python? Looking for a solution that can enhance your web scraping experience and make your work easier? If so, you’ve come to the right place! Our article on Python Tips: Enhance Your Web Scraping with Urllib2 and Keep-Alive Feature is here to provide you with the answer to all your Python problems.

In this article, we will show you how to use Urllib2 and Keep-Alive Feature to improve web scraping efficiency. You’ll learn how to easily control and manage your connections, deal with HTTP errors, and handle various other issues that may arise during web scraping. Whether you’re a beginner or an experienced programmer, we guarantee our tips will help you take your Python web scraping game to the next level!

So if you’re ready to simplify your web scraping process and improve your results, be sure to read our Python Tips: Enhance Your Web Scraping with Urllib2 and Keep-Alive Feature article from beginning to end. Trust us, you won’t regret it!

“Python Urllib2 With Keep Alive” ~ bbaz

Introduction

Web scraping is an important skill for any developer working with data. It allows you to extract the information you need from websites and use it in your own projects. However, it can be a complex and time-consuming process, particularly when working with large amounts of data. In this article, we will show you how to enhance your web scraping experience using the Urllib2 and Keep-Alive feature, making it easier and more efficient.

What is Urllib2?

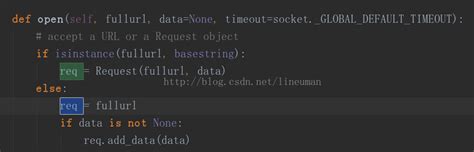

Urllib2 is a Python library that allows you to send HTTP requests and handle responses. It is particularly useful for web scraping because it provides you with a range of tools to make requests and handle data. One of its most useful features is the ability to set headers and build URLs, which can make your web scraping process much simpler.

What is Keep-Alive Feature?

HTTP is a stateless protocol, which means that each request and response is treated as a separate transaction. This can result in a lot of overhead when sending multiple requests, particularly when working with large amounts of data. The Keep-Alive feature is designed to reduce this overhead by allowing multiple requests to be sent over a single connection. This makes your web scraping process much faster and more efficient.

Controlling Your Connections

When working with web scraping, it’s important to manage your connections effectively. Urllib2 provides several tools to help you do this, including the ability to set headers, cookies, and authentication details. This allows you to customize your requests and get the data you need quickly and easily.

Dealing with HTTP Errors

No matter how carefully you set up your requests, it’s inevitable that you will encounter errors at some point when web scraping. These can include server errors, timeouts, and connection issues. Urllib2 provides you with tools to handle these errors effectively, so you can continue scraping data without disruption.

Handling Various Issues

When web scraping, there are a number of issues that can arise, such as dealing with redirects, encoding, and parsing HTML. Urllib2 provides you with the tools you need to handle all of these issues efficiently, making your web scraping process much smoother and more effective.

Using Urllib2 in Your Projects

If you’re new to Python or web scraping, getting started with Urllib2 can seem daunting. However, once you get the hang of it, you’ll find that it’s a powerful tool that will make your web scraping process much more efficient. Our article provides a range of tips and tricks to help you get started, so you can take your Python web scraping game to the next level.

Comparison Table

| Urllib2 | Other Web Scraping Libraries |

|---|---|

| Flexible | Less flexible |

| More control over requests and responses | Less control over requests and responses |

| Works well with the Keep-Alive feature | May not work well with the Keep-Alive feature |

| Requires more setup | Requires less setup |

Opinion

Overall, Urllib2 is a powerful tool for web scraping in Python. While it does require a little more setup than some other scraping libraries, it offers a lot of flexibility and control over requests and responses. Additionally, when used with the Keep-Alive feature, it can make your web scraping process much faster and more efficient. If you’re new to Python or web scraping, it may take a little time to get the hang of using Urllib2 effectively, but once you do, you’ll find that it’s an essential tool in your development kit.

Thank you for visiting our blog and reading our latest post on Python Tips: Enhance Your Web Scraping with Urllib2 and Keep-Alive Feature. We hope that our tips and insights have been helpful to you in your web scraping endeavors.

Python is a powerful programming language with many useful features and tools, and we believe that understanding how to use urllib2 and keep-alive feature can greatly enhance your web scraping capabilities. With these tools at your disposal, you can access websites more quickly and efficiently, improving your productivity and results.

If you found this post helpful, we encourage you to explore our website further and check out some of our other articles on Python programming. Our goal is to provide useful and informative content to help fellow programmers and enthusiasts learn and grow in their skills and expertise.

People also ask about Python Tips: Enhance Your Web Scraping with Urllib2 and Keep-Alive Feature include:

- What is web scraping?

- What is Urllib2?

- How can I enhance my web scraping with Urllib2?

- What is the Keep-Alive feature in web scraping?

- How do I enable Keep-Alive in Python web scraping?

Web scraping is the process of extracting data from websites using automated software or tools.

Urllib2 is a Python library that allows you to send HTTP/HTTPS requests and handle responses.

You can enhance your web scraping with Urllib2 by using its various features, such as setting headers, handling cookies, and following redirects.

The Keep-Alive feature allows multiple HTTP requests to be sent over a single TCP connection, which can improve the performance and efficiency of web scraping.

You can enable Keep-Alive in Python web scraping by using the HTTPConnection class and setting the ‘Connection’ header to ‘keep-alive’.