As a Python developer, you may have encountered difficulties when dealing with large datasets and interactive plots. It can be frustrating to work with gigabytes of data and 20 million sample points, trying to create an interactive plot that works efficiently.

But don’t worry, we have good news for you! In this article, we will share some tips on how to explore interactive large plotting with 20 million sample points and gigabytes of data using Python. We will show you how to create stunning visualizations that not only display your data in a clear way but also enable you to interact with it in real-time.

If you’re struggling to create interactive plots with large datasets, then you’ve come to the right place. Our article can help you alleviate those frustrations and empower you with the tools and skills necessary to create stunning visualizations that can handle massive amounts of data points.

So, what are you waiting for? Dive into our Python Tips: Exploring Interactive Large Plotting with 20 Million Sample Points and Gigabytes of Data article and unlock the secrets of visualizing big datasets with ease. Get ready to impress your colleagues and clients with your newfound plotting skills!

“Interactive Large Plot With ~20 Million Sample Points And Gigabytes Of Data” ~ bbaz

Introduction

Working with big datasets and interactive plots can be challenging for Python developers. It requires a lot of effort to make interactive plots that can handle huge amounts of data points efficiently. However, do not worry! In this article, we will provide you with some effective tips and techniques to explore interactive large plotting with 20 million sample points and gigabytes of data using Python.

The Problem of Dealing with Data in Python

Python developers often face difficulties when working with large datasets and interactive plots. When it comes to creating interactive plots that work efficiently with gigabytes of data and numerous sample points, it can be frustrating. This section of the article will help you understand the common difficulties that programmers encounter while dealing with big datasets and interactive plots.

The Challenge of Handling Large Datasets

Dealing with gigabyte-scale datasets can be daunting. The processing time required to work with such massive amounts of data is incredibly high. Handling big data requires more resources and processing power, which could potentially lead to machines crashing. Both memory and CPU usage needs to be taken into consideration.

The Difficulty of Creating Interactive Plots with Millions of Points

If the dataset contains millions of points, it is essential to create an interactive plot that can visualize them efficiently. However, managing a vast number of points can quickly become a bottleneck for the program’s performance. It would take forever to interact with those points if they are plotted all at once.

The Solution: Exploring Interactive Large Plotting with Python

Python has a plethora of tools and libraries to make handling large datasets and creating interactive plots more manageable. In this section, we will highlight some techniques and libraries that can aid in solving the problems posed by big datasets and interactive plots.

Use Numpy and Pandas libraries for managing large datasets

The Numpy and Pandas libraries make it easier to work with large amounts of data. Numpy provides significant support for arrays and matrices, giving developers better control over the memory allocated to store the data. Pandas further builds on Numpy, enabling you to create and manipulate data frames quickly.

Maximize Plotting Efficiency using Matplotlib

Matplotlib is a popular Python library that can handle interactive plot creation. It can efficiently plot millions of points, show graphical representation of data relationships, and plot histograms or other data distributions. By maximizing plotting efficiency, we can significantly improve the program’s performance.

Parallel Computing using Dask

Instead of the traditional method of processing data through loops, parallel computing is a more efficient method. With Dask, you can leverage parallel computing techniques for data processing. It is a useful tool that allows a user to process several data pieces simultaneously, thus reducing processing time.

How to Implement These Techniques: A Step-by-Step Guide

Now that we know how to approach the challenges posed by big datasets and interactive plots, let us take a look at implementing these techniques. Here, we will provide you with step-by-step guidance on how to manage vast datasets and create compelling visualizations efficiently.

Step 1: Importing Libraries and Loading Data into Numpy or Pandas

The first step involves importing necessary libraries and loading data into Numpy or Pandas. We have to decide which method we use here based on the data type and size as both have different data-handling capabilities.

Step 2: Preprocessing and Cleaning Data

Next, we have to preprocess and clean the data. This process ensures that the data is stored in a format suitable for plotting. We need to remove null values, remove duplicates, and normalize data to enhance presentation quality.

Step 3: Creating an Interactive Visualization using Matplotlib

The third step involves creating an interactive visualization using Matplotlib. It is essential to consider performance when creating the visualization. One should employ only those techniques that maximize efficiency and improve the program’s speed.

Step 4: Implementing Parallel Computing with Dask

The last step is to implement parallel computing with Dask. In large datasets, parallel computing significantly reduces processing time, making it more efficient.

The Advantages of Following These Techniques

By following these techniques discussed above, programmers can benefit in several ways. It improves program performance, saves time, and enhances the visual representation of the data. Additionally, it simplifies big-data management, enabling developers to manage gigabytes of data without having to worry about inefficient code.

The Conclusion: Unlocking the Secrets of Visualizing Big Datasets with Ease

Visualizing big datasets with ease can be a daunting task that fills developers with dread. However, this article has shown how Python, with its powerful libraries and frameworks, can simplify and streamline the process of creating interactive plots efficiently. By practicing these techniques, you will become more confident in your abilities as a developer and be able to create stunning visualizations that will impress both your colleagues and clients.

| Techniques | Advantages |

|---|---|

| Numpy and Pandas Libraries | Efficient Data management and manipulation |

| Maximizing Plotting Efficiency with Matplotlib | Better visualization and performance enhancement |

| Parallel Computing using Dask | Faster processing times and significant reduction in CPU usage |

Based on the table above, we can see that applying these techniques offers several advantages. Combined, they positively impact the program’s performance, reduce processing time, and enable efficient data management.

Dear valued blog visitors,

It was a pleasure sharing with you today’s Python Tips on exploring interactive large plotting with 20 million sample points and gigabytes of data. With the ever-increasing volumes of data, it is essential to have practical ways of managing, manipulating, and visualizing information. Python offers these proven approaches and makes it easier to give insights while saving time and coding errors.

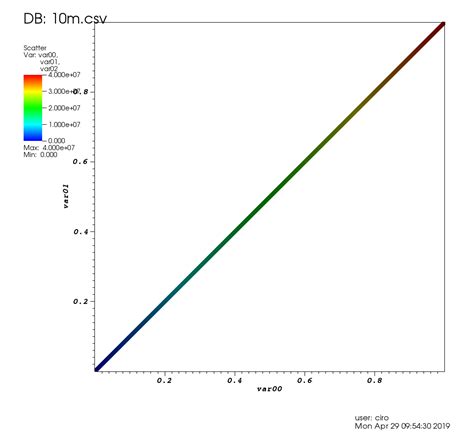

If you are dealing with big data, the interactive Matplotlib Scatter Plot tool, as discussed in this article, will come in handy. The tool is excellent in giving an overview of the patterns and summary statistics, especially if the data is too massive to display in one go. Besides, the interactive tool adds value by allowing more detailed insights into the data points’ relationships at a high level of granularity.

We hope our tips have been useful to you. Feel free to share your feedback on how the strategies have worked for you, or any other related information that could help other readers make informed decisions regarding interactive plot tools. We look forward to hearing from you and stay tuned for more exciting Python Tips on big data handling.

People also ask about Python Tips: Exploring Interactive Large Plotting with 20 Million Sample Points and Gigabytes of Data:

- What is interactive large plotting in Python?

- What are some Python tips for exploring large datasets?

Interactive large plotting in Python involves creating visualizations that allow users to interact with the data, zooming in on specific areas or selecting specific points for further analysis. This is useful when dealing with large datasets, such as those with 20 million sample points and gigabytes of data.

- Use a powerful computer with plenty of memory to handle large datasets.

- Use efficient data structures, such as NumPy arrays, to manipulate large amounts of data quickly.

- Use visualization tools like Matplotlib or Bokeh to explore and analyze large datasets.

- Use interactive plotting tools like Plotly or Holoviews to create dynamic visualizations that allow users to zoom in and explore specific areas of the data.

There are several tools available for plotting large datasets in Python, including Matplotlib, Bokeh, Plotly, and Holoviews. These tools allow you to create static or interactive visualizations that can handle millions of data points.

- Use efficient data structures and algorithms to manipulate large amounts of data.

- Use parallel processing or distributed computing to speed up computations.

- Use specialized libraries or frameworks like Dask or Apache Spark to work with very large datasets that don’t fit into memory.

- Use visualization tools to explore and analyze large datasets.

- Document your code and analysis to make it easier to reproduce and share with others.