Are you struggling with Python web scraping and encountering ‘Cannot Find Element’ errors? If so, you’re not alone, but the good news is that there’s a solution. By following some simple Python tips, you can troubleshoot your web scraping woes and resolve those frustrating errors that are causing you problems.

In this article, we’ll take you step-by-step through the process of resolving ‘Cannot Find Element’ errors found in a browser. From checking the HTML structure of the page you’re trying to scrape to using powerful Python tools like Selenium and Beautiful Soup, we’ll cover everything you need to know to get your web scraping project back on track.

If you’re tired of feeling stuck and limited by these common web scraping errors, don’t give up just yet. With our expert tips and guidance, you can gain confidence in your Python skills and become a more efficient and effective web scraper. So don’t wait any longer – read on to discover how you can finally resolve those ‘Cannot Find Element’ errors!

“Web Scraping Program Cannot Find Element Which I Can See In The Browser” ~ bbaz

Troubleshooting ‘Cannot Find Element’ Errors in Python Web Scraping

Introduction

Python web scraping can be a challenging task, especially when you encounter errors like Cannot Find Element. This article will guide you through resolving these errors with the help of simple tips and powerful Python tools like Selenium and Beautiful Soup.

Understanding the Issue

When web scraping, sometimes the HTML structure of a page can change, resulting in ‘Cannot Find Element’ errors. Before attempting to resolve the error, it’s important to understand why your script is encountering this issue.

Checking the HTML Structure

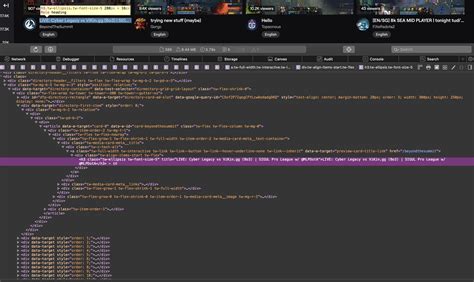

One way to resolve this error is to examine the HTML structure of the page you’re scraping. Use the developer tools in your browser to view the HTML code and make sure you are targeting the correct element.

Using Selenium

Selenium is a powerful tool that allows you to automate web browsers. You can use it to interact with elements on the page, wait for elements to load, and retry if the element is not present.

Using Beautiful Soup

Beautiful Soup is a Python library that allows you to parse HTML and XML documents. It provides a simple interface to access elements on the page, making it easy to extract data from websites.

Retrying Requests

Sometimes, even after using Selenium or Beautiful Soup, you may still encounter ‘Cannot Find Element’ errors. In this case, it may be worth retrying the request a few times before giving up.

Handling Dynamic Content

Dynamic content can be difficult to scrape, as the HTML structure may change depending on user actions. In such cases, it’s important to use tools like Selenium to interact with the page and extract the necessary data.

Logging and Debugging

Logging and debugging can help you pinpoint the exact location of the error in your code. By understanding where the ‘Cannot Find Element’ error is occurring, you’ll be able to better troubleshoot and resolve it.

Comparing Data Sources

If you have access to multiple data sources, it may be worth comparing the data from different sources to see if there are any discrepancies. This can help identify any errors in your scraping code.

Opinions and Insights

Web scraping is a valuable skill for anyone who wants to collect data from the web. While encountering errors like ‘Cannot Find Element’ can be frustrating, there are many tools and techniques available to help you overcome these issues and efficiently extract data from websites.

Conclusion

By following the tips outlined in this article, you’ll be able to troubleshoot and resolve ‘Cannot Find Element’ errors in your Python web scraping projects. Remember to check the HTML structure, use powerful Python tools like Selenium and Beautiful Soup, retry requests, and handle dynamic content. With persistence and effort, you’ll soon become an expert web scraper.

| Method | Pros | Cons |

|---|---|---|

| Checking HTML Structure | Simple and quick | May not work if HTML structure changes frequently |

| Using Selenium | Allows for automation and interaction with page elements | Requires installation and familiarity with Selenium syntax |

| Using Beautiful Soup | Provides easy access to elements on page | May not work with dynamic content or complex HTML |

| Retrying Requests | Can help resolve intermittent errors | May slow down scraping process |

| Handling Dynamic Content | Makes it possible to scrape dynamic content | Requires additional knowledge of web development and Selenium |

Thank you for taking the time to read our article on Python Tips for Troubleshooting Web Scraping. We hope that by now, you have a better understanding of how to approach fixing ‘Cannot Find Element’ errors that arise during scraping.

Remember that most ‘Cannot Find Element’ errors occur when we’re trying to scrape elements that aren’t yet visible or present on the page. This is usually attributed to an AJAX call or some other form of dynamic rendering of content. Our recommended solution, in this case, is to use explicit waits that will allow the scraper to wait for the required elements to load before proceeding with the scraping process.

We also recommend taking advantage of browser-based tooling such as Developer Console and Inspect Element. These tools can help with identifying elements to scrape and provide useful debugging information. Additionally, using these tools can help simulate human-like behavior, which increases the success rate of web scraping, reduces errors, and avoids detection by websites.

We hope that our tips have been helpful in resolving ‘Cannot Find Element’ errors when web scraping. Remember, refine your code often, test frequently, and stay patient when dealing with these issues. Happy scraping!

When it comes to web scraping using Python, encountering ‘Cannot Find Element’ errors in the browser can be frustrating. Here are some common questions people also ask about troubleshooting these errors and their corresponding answers:

-

What does ‘Cannot Find Element’ mean in web scraping?

‘Cannot Find Element’ is an error message that appears when the Python script cannot locate a specific HTML element on a web page. This error can occur due to various reasons such as incorrect XPath or CSS selector, slow internet connection, or dynamic content that is not fully loaded.

-

How can I resolve ‘Cannot Find Element’ errors in web scraping?

To resolve this error, you can try the following steps:

- Check if the HTML element exists in the page source code by inspecting the page with the browser’s developer tools.

- Verify that the XPath or CSS selector used in the Python script is correct.

- Include a wait time to allow dynamic content to load fully before attempting to scrape.

- If the website uses AJAX requests to fetch data, use a tool like Selenium to automate the browser and wait for the AJAX response before proceeding with the scrape.

-

Is there a way to handle ‘Cannot Find Element’ errors automatically in Python?

Yes, you can use a try-except block to catch the ‘NoSuchElementException’ exception that is raised when an element cannot be found. You can then handle the error by either skipping the element or retrying the scrape after a certain amount of time.

-

Are there any best practices to avoid ‘Cannot Find Element’ errors in web scraping?

Yes, here are some best practices:

- Avoid using absolute XPath expressions as they can break easily when the HTML structure changes.

- Use CSS selectors whenever possible as they are more robust and easier to read.

- Check if the website has an API that you can use instead of scraping the HTML directly.

- Be respectful of the website’s terms of service and do not scrape excessively or in a manner that could cause harm.