If you are a python developer who is looking for ways to export a table dataframe in PySpark to CSV, look no further! We have the perfect solution to your problem. While working with large datasets, exporting a table in PySpark is a common task and could be a bit tricky. Fortunately, there is an easy way to do it.

In this article, we will provide you with tips on how to export a table dataframe in PySpark to CSV. You don’t have to go through all the trouble of manually exporting each column one by one. With PySpark, you can easily export the entire table dataframe to a CSV file with just a few lines of code.

Not only will this save you time but it will also ensure accuracy and uniformity in the exported data. Imagine having to copy and paste thousands of rows of data and still have to worry about the format being ruined, a nightmare right? Our article on exporting a table dataframe in PySpark to CSV has got you covered! So go ahead and sit back while we take you through the simplified process.

In conclusion, if you are looking for a hassle-free way to export a table dataframe in PySpark to CSV, this article is for you! We guarantee that you will find our tips useful and practical. No PySpark developer should be without this knowledge. We invite you to dive into our step-by-step guide and learn how to export your table dataframe with ease. Don’t wait any longer, read the article now and make the most out of your PySpark experience!

“How To Export A Table Dataframe In Pyspark To Csv?” ~ bbaz

Introduction

Exporting a table dataframe in PySpark to CSV can be a demanding task, especially when dealing with large datasets. However, there is an easy and practical way to do it, which we will explore in this article. We will provide you with tips on how to export a table dataframe in PySpark to CSV, saving you time and ensuring accuracy and uniformity in your exported data.

The Importance of Exporting Table Dataframes in PySpark

Exporting table dataframes in PySpark is essential for many reasons. One important reason is that the exported data can be analyzed with other tools or used for further purposes. For example, data analysts can use the exported data to create visualizations or reports, while developers can use it to build machine learning models.

The Challenge of Exporting Table Dataframes in PySpark

Exporting table dataframes in PySpark can be challenging, especially when the dataset is large. Manually exporting each column one by one can take a lot of time and effort. Moreover, it can be prone to errors, inconsistency, and messy formatting. Therefore, it’s crucial to have an efficient and reliable method for exporting table dataframes in PySpark to CSV.

The Solution: How to Export Table Dataframes in PySpark to CSV

The good news is that PySpark provides an easy and straightforward way to export table dataframes to CSV. You can achieve this with just a few lines of code, without the need for any external libraries or complex configurations. In the following paragraphs, we will describe the step-by-step process of exporting table dataframes in PySpark to CSV.

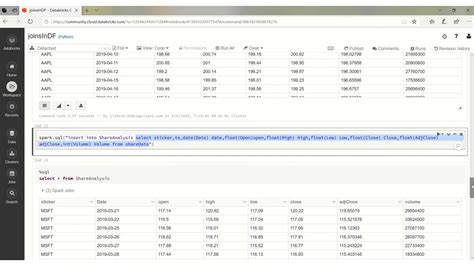

Step 1: Load Data into PySpark

The first step is to load the data you want to export into PySpark. You can do this by creating a SparkSession object and using it to read the data from a file, a database, or any other source. For example, the following code loads a CSV file into a PySpark dataframe:

Code:

from pyspark.sql import SparkSessionspark = SparkSession.builder.appName(ExportTable).getOrCreate()df = spark.read.csv(data.csv, header=True, inferSchema=True)

Step 2: Export Dataframe to CSV

The second step is to export the dataframe to CSV format. You can do this by calling the write.csv() method on the dataframe, specifying the path where the CSV file will be saved. Additionally, you can set various options for the export, such as the delimiter, header, or compression. For example, the following code exports the dataframe to a CSV file:

Code:

df.write.csv(output.csv, header=True, sep=,, mode=overwrite)

Step 3: Verify the Exported Data

The last step is to verify that the exported data is correct and consistent with the original dataframe. You can do this by reading the CSV file back into a PySpark dataframe and comparing it with the original one. Additionally, you can use tools such as Excel, Python, or R to analyze, visualize, or manipulate the exported data. For example, the following code reads the exported CSV file back into a PySpark dataframe:

Code:

df_export = spark.read.csv(output.csv, header=True, inferSchema=True)

Comparison Between Manual and PySpark Exporting Methods

To illustrate the benefits of using PySpark for exporting table dataframes to CSV, we will compare it with the manual method. The manual method involves copying and pasting each column from a table into a CSV file, which can be prone to errors, inefficiency, and inconsistency. On the other hand, using PySpark provides several advantages, such as automation, scalability, and reliability. PySpark allows you to export entire table dataframes with just a few lines of code, without worrying about formatting, manual errors, or memory constraints. Therefore, PySpark is a more efficient and practical method for exporting table dataframes to CSV.

Conclusion

Exporting table dataframes in PySpark to CSV is an essential task for many data analysts and developers. In this article, we have provided you with tips on how to export table dataframes in PySpark to CSV, using a simple and reliable method. We have also explained the importance, challenges, and advantages of exporting table dataframes in PySpark, compared to the manual method. By following our step-by-step guide, you can save time, increase accuracy, and improve productivity in your PySpark experience. Don’t hesitate to try it out and see the difference for yourself!

Thank you for visiting our blog and reading our tips on how to export a table dataframe in PySpark to CSV. We hope that this article has been informative and helpful for your programming needs. As you may have discovered, PySpark is a powerful tool for processing large amounts of data in a distributed setting.

Exporting a table dataframe to CSV can be a useful step in the data analysis process, as it allows for further manipulation and visualization in tools such as Excel or R. With the PySpark code we provided, you will be able to efficiently and easily export your data without any complications.

We encourage you to continue exploring the world of PySpark and other data processing tools. The more you learn, the more valuable you will become in the job market and in your programming endeavors. Thank you again for visiting our blog and we hope to see you soon!

Here are some common questions people ask about exporting a table dataframe in PySpark to CSV:

- What is PySpark?

- What is a table dataframe?

- How do I export a table dataframe in PySpark to CSV?

Answers:

- What is PySpark?

- What is a table dataframe?

- How do I export a table dataframe in PySpark to CSV?

PySpark is the Python API for Apache Spark, a powerful open-source framework for distributed computing. PySpark allows you to write Spark applications using Python APIs and run them on Hadoop, Kubernetes, and other distributed computing systems.

A table dataframe is a distributed collection of data organized into named columns. It is similar to a table in a relational database or a data frame in R or Python. In PySpark, you can create a dataframe from various data sources, including CSV files, JSON files, or SQL databases.

You can use the write.csv() method to export a table dataframe in PySpark to CSV format. Here’s an example code snippet:

- First, import the necessary libraries:

# Import PySpark librariesfrom pyspark.sql import SparkSession# Create a SparkSessionspark = SparkSession.builder.appName(ExportTableDataframeToCSV).getOrCreate()# Import CSV file as a table dataframedf = spark.read.format(csv).option(header, true).load(path/to/csv/file)# Export table dataframe to CSV filedf.write.csv(path/to/output/csv/file, header=True, mode=overwrite)# Stop the SparkSessionspark.stop()By using these tips, you can easily export a table dataframe in PySpark to CSV format.