Are you struggling with how to replace or modify gradients in TensorFlow? Look no further than this comprehensive tutorial on Python Tips: Replace or Modify Gradients in TensorFlow.

Whether you’re a beginner or an expert, this tutorial will provide you with all the information you need to tackle your gradient problems in Python. From understanding the basics of gradient computation to learning how to modify and replace them, this tutorial covers everything you need to know about TensorFlow gradients.

With easy-to-follow explanations and code examples, you’ll be able to quickly put what you learn into practice. Say goodbye to frustration and confusion when it comes to working with gradients in TensorFlow, and say hello to a new level of Python expertise.

If you’re ready to take your Python skills to the next level and finally master gradients in TensorFlow, then don’t hesitate to read through this comprehensive tutorial. You won’t regret it!

“Tensorflow: How To Replace Or Modify Gradient?” ~ bbaz

Introduction

TensorFlow is a popular open-source library, specifically designed for developing deep learning models. One of the essential components of a deep learning model is calculating gradients, which are used to optimize the parameters of the model during training. In this tutorial, we will discuss how to replace or modify gradients in TensorFlow.

Why Modify or Replace Gradients?

In deep learning, optimizing the parameters using gradient descent is a crucial step. Although the basic idea of gradient descent is simple, there are several modifications and extensions that can be made to it. Modifying and replacing gradients can result in faster convergence, improved performance, and better generalization of the model.

Understanding Gradients in TensorFlow

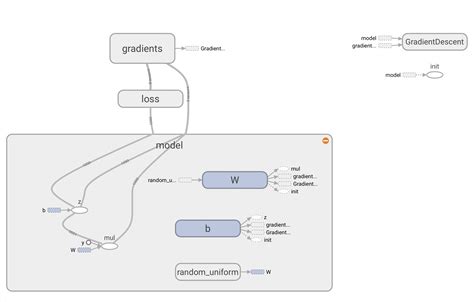

Before we dive into modifying gradients in TensorFlow, it’s important to understand how gradients are calculated and represented. In TensorFlow, gradients are represented using computational graphs.

Computational Graphs and Automatic Differentiation

Computational graphs are a powerful tool for describing mathematical computations. In TensorFlow, computational graphs are used to represent the structure of deep learning models and calculate the gradients automatically. Automatic differentiation is the process by which TensorFlow calculates the gradients.

Modifying Gradients in TensorFlow

There are several ways to modify gradients in TensorFlow. One of the most common ways is to use gradient clipping, which limits the range of the gradients. Another way is to use gradient normalization, which scales the gradients to have a certain norm. We will discuss these methods in detail in this section.

Gradient Clipping

Gradient clipping is a technique used to prevent the gradients from becoming too large. This can happen when there are outliers in the data or when the model is complex. Gradient clipping limits the range of the gradients, ensuring that they are within a certain range.

Comparison Table: Gradient Clipping Techniques

| Method | Description | Advantages | Disadvantages |

|---|---|---|---|

| Global Norm Clipping | Clips the global norm of gradients across all variables. | Simple to implement, works well in most cases. | May not work well for extremely large models. |

| Per-Variable Norm Clipping | Clips the norm of gradients for each variable separately. | Allows for finer control over gradient clipping, can be more effective for large models. | Requires more computation and may slow down training. |

Gradient Normalization

Gradient normalization is a technique used to scale the gradients to have a certain norm. This can help prevent vanishing or exploding gradients, which can occur in deep neural networks. Gradient normalization can also help improve the convergence speed of the model.

Comparison Table: Gradient Normalization Techniques

| Method | Description | Advantages | Disadvantages |

|---|---|---|---|

| L2 Gradient Normalization | Divides the gradients by the L2 norm. | Simple to implement, improves generalization. | May slow down training. |

| L1 Gradient Normalization | Divides the gradients by the L1 norm. | Can improve sparsity, works well for certain models. | May not work well for all models. |

Conclusion

In this tutorial, we have discussed how to modify and replace gradients in TensorFlow. We learned that modifying gradients can result in better performance and faster convergence of deep learning models. We also discussed two common techniques, gradient clipping and gradient normalization, and compared their advantages and disadvantages using tables. With this knowledge, you can take your Python skills to the next level and master gradients in TensorFlow.

Thank you for reading this comprehensive tutorial on how to replace or modify gradients in TensorFlow using Python. It is our hope that you have gained valuable insights and practical tips that will help you optimize your TensorFlow models.

As we saw in this tutorial, replacing and modifying gradients is a fundamental aspect of optimizing and fine-tuning neural networks in TensorFlow. By mastering this technique, you can achieve better accuracy and faster convergence of your models, and tackle a wide range of machine learning tasks with greater ease.

We encourage you to experiment with the code snippets and examples provided in this tutorial, and to explore more advanced techniques and applications of TensorFlow in your own projects. By staying up-to-date with the latest trends and best practices in machine learning and AI, you will be better equipped to face the challenges and opportunities of the fast-changing world of technology.

When it comes to working with TensorFlow, it’s important to know how to replace or modify gradients. Here are some common questions people have about this topic:

- What is a gradient in TensorFlow?

- Why would I need to replace or modify gradients?

- How do I replace or modify gradients in TensorFlow?

- Can I replace gradients for specific variables only?

- Are there any pitfalls or limitations to watch out for?

A gradient is a mathematical term used in machine learning to represent the rate of change of a function. In TensorFlow, gradients are used to calculate the direction and magnitude of the change required to minimize a loss function.

There may be situations where you want to customize the way gradients are computed or modify them to achieve better results. For example, you may want to use a different optimization algorithm or adjust the learning rate based on certain conditions.

There are different ways to replace or modify gradients in TensorFlow, but one common approach is to use the GradientTape API. This allows you to record operations for automatic differentiation and compute gradients on the fly. You can then manipulate these gradients before applying them to the variables you want to update.

Yes, you can specify which variables you want to compute gradients for and which ones you want to exclude. This can be useful if you have a large model with many parameters and you only want to update a subset of them.

Yes, modifying gradients can be tricky and may result in unstable or slow convergence if not done properly. It’s important to have a good understanding of the math behind it and to experiment carefully with different techniques.