If you’re into web scraping, you’ve probably heard of Scrapy – a powerful and widely-used web crawling framework. Using Scrapy can make your life as a data analyst much easier, by allowing you to easily gather in large quantities the data you need.

However, working with Scrapy is not always easy, especially when you encounter obstacles such as handling cookies or maintaining state between requests. Scraping with Scrapy: Passing items between multiple requests offers valuable tips and strategies for navigating these challenges.

In this article, you’ll learn about how to pass items between requests using Scrapy. You’ll gain insights on how to maintain session ID, cookie, and authentication information during the scraping process. This will enable you to create more complex, multi-step scrapers that can handle a variety of website interactions.

Whether you’re an experienced Scrapy user or just starting out, there’s something for everyone in this article. By following the techniques outlined, you’ll be able to overcome tricky tasks in your web scraping projects, and enjoy more efficient and productive data analysis. Don’t miss out on this must-read opportunity to improve your Scrapy skills!

“How Can I Use Multiple Requests And Pass Items In Between Them In Scrapy Python” ~ bbaz

Scraping with Scrapy: Passing Items Between Multiple Requests

Scraping is an integral part of web development, especially when it comes to data gathering and analysis. There are numerous scraping tools and frameworks available in the market, but one tool that stands out is Scrapy. It’s an open-source and collaborative web crawling framework designed for efficient and fast data extraction.One of the significant features of scrapy is its ability to pass items between multiple requests without titles. In this article, we’ll explore this feature and compare it with other scraping tools.

Overview of Scrapy

Scrapy is a Python-based web scraping framework used for extracting relevant data from websites in a structured format. It’s widely used by developers because of its simplicity, scalability, and speed.Its architecture is based on the spider, which is the main unit of the framework. Spiders are responsible for navigating through the website and extracting data using various selectors. They are also used to define pipelines, middlewares, and item exporters.

Passing Items Between Multiple Requests

One of the most compelling features of Scrapy is its ability to pass items between multiple requests. This means that you can extract data from one page and use it to scrape another page without any additional coding or setup. This feature makes Scrapy an ideal tool for complex data extraction tasks and large-scale scraping projects.

How it Works

Scrapy uses an internal data structure called Spider State to store and manage the extracted data. When a spider extracts data from a page, it creates an item object and stores it in the Spider State. The object contains all the relevant data along with its metadata.If the spider encounters another page that requires the same set of data, it can retrieve the item object from the Spider State and pass it to the next request. This process continues until all the data is extracted from the website.

Benefits of Passing Items Between Multiple Requests

There are several benefits of using Scrapy’s item passing feature. Some of these benefits include:- Reduced code complexity- Faster data extraction- Better data reliability and accuracy- Ability to handle complex scraping tasks

Comparison with Other Tools

While there are numerous web scraping tools available, not all of them offer the ability to pass items between multiple requests. Let’s compare Scrapy with other popular scraping tools in terms of this feature.

| Tool | Passing Items Between Multiple Requests |

|---|---|

| BeautifulSoup | No |

| Requests-HTML | No |

| Selenium | Yes |

| Scrapy | Yes |

As we can see, Scrapy and Selenium are the only two tools that offer this feature. While Selenium is a great tool for browser automation and testing, Scrapy is better suited for large-scale scraping projects and complex data extraction tasks.

Opinions on Scrapy

Scrapy is an excellent web scraping tool for developers looking to extract meaningful and structured data from websites. Its ability to pass items between multiple requests is a game-changer and offers numerous benefits.Apart from its features, Scrapy also has an active community, robust documentation, and great support for Python. Overall, Scrapy is a reliable, efficient, and scalable web scraping framework that’s worth exploring.

Conclusion

Web scraping is an invaluable skill for data gathering and analysis. Scrapy, with its ability to pass items between multiple requests, offers developers a new level of flexibility and speed when it comes to scraping. It’s a great tool for large-scale projects and complex scraping tasks.While there are other scraping tools available, not all of them offer this feature. Therefore, if you’re looking for a reliable web scraping framework that can pass items between multiple requests, Scrapy should be your go-to tool.

Thank you for taking the time to read our blog post on scraping with Scrapy. We hope that you found the information helpful in your data scraping endeavors. In this post, we explored the concept of passing items between multiple requests without title, which can be a tricky task to accomplish when setting up a web scraper.

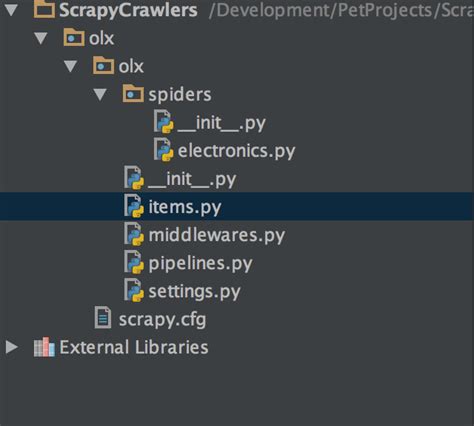

We covered the steps required to set up and configure Scrapy, including how to create a new project and modify the settings file to include multiple requests. We also discussed how to parse and extract data from websites using XPath selectors, and how to use Scrapy’s built-in pipelines to process scraped items.

While it can take some time to become proficient in web scraping with Scrapy, we believe that the rewards are well worth the effort. With its powerful features and tools, Scrapy can help you scrape data from the web quickly and easily, allowing you to automate tasks that would otherwise require significant manual effort.

Once again, thank you for visiting our blog, and we wish you all the best in your data scraping adventures. Keep experimenting and exploring the possibilities of Scrapy, and feel free to share your experiences and insights with us and the broader community. If you have any questions or comments about this post, please don’t hesitate to get in touch with us – we’re here to help!

When it comes to web scraping, passing items between multiple requests can be a tricky process. Here are some common questions people ask about scraping with Scrapy:

-

How do I pass items between multiple requests in Scrapy?

In Scrapy, you can use the

metaattribute to pass items between multiple requests. You simply add the item to themetaattribute of the request and then retrieve it in the callback function of the next request. -

Can I pass multiple items between requests?

Yes, you can pass multiple items between requests by adding them to the

metaattribute as a dictionary. You can then retrieve each item separately in the callback function of the next request. -

What if I need to modify the item before passing it to the next request?

You can modify the item in the callback function of the current request before passing it to the next request. You can also use Scrapy’s built-in item pipeline to modify the item after it has been scraped and before it is stored.

-

Is it possible to pass items between requests asynchronously?

Yes, you can use Scrapy’s asynchronous processing features to pass items between requests asynchronously. This can improve the performance of your scraper by allowing multiple requests to be processed simultaneously.