Cross-validation is a powerful technique used in machine learning that helps to evaluate the performance of a predictive model. In cross-validation, we split data into training and testing sets and use them to validate the accuracy of the model. This can be done by using the k-fold cross-validation technique, where the data is divided into k groups, and each group is used for testing while the rest is used for training.

However, before we can start with cross-validation, we need to first split our dataset into training and testing sets. As simple as it may seem, this is a crucial step that can have significant impacts on the accuracy of our model. Therefore, it is essential to understand how to split our dataset for cross-validation correctly.

In this step-by-step guide, we will walk you through the process of splitting your dataset for cross-validation. We will cover everything from the reasons why we need to split datasets to the different methods of doing so. Whether you are a beginner or an advanced user in machine learning, this guide will provide the right insights to help you get started with cross-validation.

So, if you want to learn more about splitting datasets for cross-validation, be sure to read this guide to the end. By the time you finish reading, you will have a better understanding of how to split your data efficiently and accurately. Let’s get started!

“How To Split/Partition A Dataset Into Training And Test Datasets For, E.G., Cross Validation?” ~ bbaz

Introduction

Training machine learning models often requires splitting the dataset into training, validation, and testing sets to combat overfitting. Cross-validation is a widely used method to evaluate model performance that involves splitting the data multiple times to get a reasonable estimate of the model’s quality. This article is a step-by-step guide on how to split your dataset for cross-validation in Python.

Why Split Data?

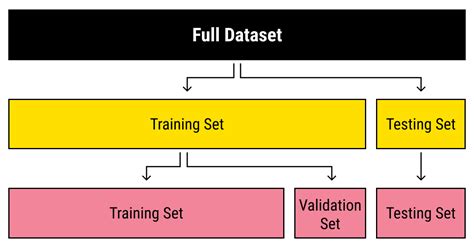

Splitting the data into training, validation, and testing datasets helps us create better, more accurate models. We use the training dataset to train our model and tune the parameters, the validation dataset to tweak the model and prevent it from overfitting, and the testing dataset to evaluate the final model’s performance.

What is Cross-Validation?

Cross-validation involves dividing the data into K-folds, then holding out one fold for testing while training the model on K-1 folds. This process is repeated K times, with each fold used once as validation data. Using this technique, we can train multiple models on different folds and get a better estimate of the performance metric than using only one randomly selected test set.

Choosing the Number of Folds

The number of folds used in cross-validation is an important decision that needs careful consideration. Generally, it is recommended to use 5 or 10 folds, but the optimal value depends on the dataset size, model complexity, and available computational resources. In practice, increasing the number of folds leads to better estimates but also increases the computational cost.

Step-by-Step Guide on Splitting the Dataset for Cross-Validation

Step 1: Load the dataset

The first step is to load the dataset into memory. We can use several libraries like Pandas or Numpy to handle data in Python.

| Pandas | Numpy |

|---|---|

| df = pd.read_csv(‘data.csv’) | data = np.loadtxt(‘data.csv’, delimiter=’,’) |

Step 2: Shuffle the dataset

Before we split the data, we need to shuffle it randomly to remove any dependency between the samples. This step ensures that the folds represent a reasonably unbiased split of the whole dataset. We can use the scikit-learn library for this purpose.

| Code |

|---|

| X, y = shuffle(X, y, random_state=42) |

Step 3: Split the dataset into K folds

We use scikit-learn’s KFold function to split the dataset into K folds. The function takes three arguments: the number of folds, shuffling parameter, and random state.

| Code |

|---|

| kfold = KFold(n_splits=5, shuffle=True, random_state=42) |

Step 4: Train the model on K-1 folds

Next, we train the model using K-1 folds as training data. We iterate over each fold and use it as validation data.

| Code |

|---|

| for train_index, val_index in kfold.split(X): X_train, X_val = X[train_index], X[val_index] y_train, y_val = y[train_index], y[val_index] |

Step 5: Evaluate the model on the validation set

We then evaluate the performance of the model on the held-out validation set. This step allows us to compare the different models trained on different fold combinations.

| Code |

|---|

| score = model.fit(X_train, y_train).score(X_val, y_val) |

Step 6: Repeat for all K folds

We repeat this process for all K folds, combining the results to obtain an accurate estimate of the model’s performance.

Conclusion

Splitting datasets is an essential and crucial step in creating robust and accurate machine learning models. Cross-validation provides an excellent method of evaluating the model’s performance and preventing overfitting by dividing the data into multiple folds for training and testing. The process outlined in this article provides an easy-to-follow and practical guide for splitting and cross-validating the dataset. However, the number of folds chosen will depend on the dataset size, model complexity, and available computational resources.

Thank you for taking the time to read our step-by-step guide on splitting a dataset for cross-validation. With these techniques, you can improve your machine learning models and make better predictions.

We’ve covered several different approaches to splitting your data, including k-fold cross-validation, stratified sampling, and shuffle splitting. Each of these methods has its own benefits, and it’s important to choose the appropriate one for your specific use case.

Keep in mind that the goal of cross-validation is to ensure that your model generalizes well to new, unseen data. By testing your model on a separate validation set, you can get a more accurate estimate of how it will perform in the real world.

We hope you found this guide helpful and informative. If you have any questions or suggestions, please feel free to leave a comment below. And be sure to check out our other articles on machine learning, data science, and AI!

People also ask about splitting dataset for cross validation: a step-by-step guide include:

- What is cross validation?

- Why is it important to split the dataset for cross validation?

- What are the different types of cross validation?

- How do you split the dataset for cross validation?

- What is the recommended ratio for splitting the dataset?

Answers:

- Cross validation is a statistical method used to evaluate machine learning models by dividing the data into subsets and repeatedly training and testing the model.

- Splitting the dataset for cross validation is important to ensure that the model is not overfitting or underfitting the data.

- The different types of cross validation include k-fold cross validation, stratified k-fold cross validation, leave-one-out cross validation, and holdout validation.

- The most common way to split the dataset for cross validation is to randomly divide it into two sets: one for training and one for testing. Another option is to use k-fold cross validation, where the data is divided into k smaller sets, and the model is trained on k-1 subsets and tested on the remaining subset.

- The recommended ratio for splitting the dataset depends on the size of the dataset, but a common ratio is 80% for training and 20% for testing.