If you’re a data enthusiast, you must have heard about Recurrent Neural Networks (RNNs) and their variations. One such variation that has been gaining popularity over the years is the Long Short-Term Memory (LSTM) network. LSTM is an RNN architecture that allows the network to remember past information for long periods, a trait that is particularly useful in sequence-to-sequence prediction tasks. In this article, we’ll dive into the Stateful LSTM network in TensorFlow and how it can enhance sequence modeling and prediction accuracy.

The conventional LSTM network resets its hidden state after processing each batch of training data. This reset might be advantageous in some cases, but the model doesn’t take advantage of any previous learning from the earlier batches. This limitation could result in the model’s reduced efficacy when dealing with long sequence prediction tasks. Stateful LSTM in TensorFlow addresses this issue by allowing the model to retain its hidden state across all batches in the same training epoch. By doing so, the LSTM network can retain crucial hidden states and use them while processing subsequent batches. The result is an improvement in training time and increased model performance on complex sequence prediction tasks.

In conclusion, the Stateful LSTM network in TensorFlow is an invaluable tool for anyone dealing with complex sequence prediction tasks. Its ability to retain previous hidden states across various batches within the same training epoch enhances the model’s predictive capabilities, resulting in improved output accuracy. Interested in learning more about Stateful LSTM networks? Read on to discover how this fantastic network can improve your sequence modeling skills!

“Tensorflow: Remember Lstm State For Next Batch (Stateful Lstm)” ~ bbaz

Introduction

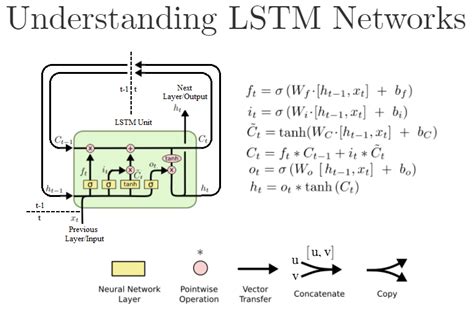

Artificial Intelligence has come a long way in the last decade, and Recurrent Neural Networks (RNNs) have proven to be an essential element in improving the sequence learning tasks. While RNNs can remember the previous output, their performance declines when dealing with longer sequences. To overcome this, researchers have introduced Long Short Term Memory (LSTM) units that can store the previous state or cell memory over extended periods.

What is Stateful LSTM?

The traditional LSTM network updates its state across every epoch for each training sequence, regardless of its length. However, in Stateful LSTM, the state of the LSTM from the previous batch of data is used as the initial state of the current batch. This ensures that the LSTM can remember the previous state when working on a new sequence of input data. The state information is kept through each batch during training and even while making predictions later.

Advantages of Stateful LSTM

Stateful LSTM architecture provides several advantages over traditional LSTMs. Some of them are:

Memory Efficiency

In Stateful LSTM, we can use large batches of training data that would not fit in traditional LSTMs. This minimizes memory usage and speeds up processing time, allowing us to train and work with larger datasets more efficiently.

Better Performance on Long Sequences

Stateful LSTM performs much better than traditional LSTM when working with long sequences. Their ability to maintain the memory of long sequences helps create better predictions for complex data such as speech recognition and language modeling.

Improved Learning from Training Data

Stateful LSTM has an intuitive memory mechanism that allows the model to learn from past iterations of input data. This ensures that the model performs better on subsequent batches of input data because it has adapted and understood the pattern from previous observations.

Disadvantages of Stateful LSTM

While Stateful LSTM has many benefits intrinsic to it, there are a few limitations that must be considered:

Higher Resource Requirements:

Stateful LSTM requires more computational resources than traditional LSTMs. This is because Stateful LSTM stores the hidden state from the previous batch, which becomes the initial state for the current batch, hence the longer memory requires more storage and computation power.

Inferior Performance on Short Sequences

While Stateful LSTM outperforms traditional LSTMs on longer sequences, its performance is average while working with short sequences since it’s more memory oriented and takes time to store the previous state of short sequences, just like it would for long ones.

Stateless vs. Stateful LSTM Comparison

Here is a comparison between Stateless and Stateful LSTM:

Data Efficiency

Stateless LSTM eliminates batch interdependence allowing the model to execute any sequence at any point of time, making it make the best use of available memory without disrupting historical information of previous inputs. In contrast, Stateful LSTM requires history and often batch interdependence, sacrificing such data efficiency for accuracy concerning longer sequences related tasks.

Memory Requirements

Stateful LSTM requires a lot of memory to maintain states during training and evaluation. This can lead to increased data movement when doing parallel computations, making parallelism more difficult to achieving desirable performance since they require synchronization between threads. On the other hand, Stateless LSTM uses less memory and can easily parallelize computation across longer sequences, providing better speed and memory efficiency.

Conclusion

In summary, Stateful LSTM allows the model to remember previous states and perform better on longer sequences’ related tasks, and Stateful LSTM runs parallel computations. Meanwhile, traditional LSTM is more suitable for short sequences and requires less computational power.

It’s essential to consider the task’s requirements while choosing between these two LSTMs, treading a fine line between accuracy and speed.

Thank you for visiting our blog and learning about Stateful LSTM in TensorFlow. We hope that our explanation of how stateful LSTMs work has made this topic more accessible and understandable to you.

Remembering the LSTM state is an essential technique for improving the sequencing of data. It allows models to maintain a memory of previous inputs, which can be incredibly valuable for time series data or any scenario in which there is a sequential relationship between inputs. The ability to preserve state across batches is particularly important for scenarios where the target data is not available ahead of time, such as in language modeling.

We believe that stateful LSTMs have enormous potential as building blocks for machine learning applications, and we encourage you to experiment with them yourself in your own projects. TensorFlow provides streamlined support for stateful LSTMs, making it easy to incorporate them into your workflow. As always, please reach out to us with any questions or feedback.

As people learn more about LSTM (Long Short-Term Memory) networks in TensorFlow, they may come across the concept of Stateful LSTM. Here are some common questions that people might have about Stateful LSTM and their corresponding answers:

-

What is Stateful LSTM?

- Stateful LSTM is a type of LSTM network in which the hidden state from the previous timestep is used as the initial state for the current timestep. This allows the network to remember information from previous timesteps and use it to improve the accuracy of predictions.

-

How does Stateful LSTM differ from regular LSTM?

- In regular LSTM, the hidden state is reset after each batch or sequence. This means that the network has to re-learn the context of the sequence at the beginning of each batch. In Stateful LSTM, the hidden state is preserved between batches, allowing the network to maintain its context and improve the accuracy of predictions over time.

-

What are the benefits of using Stateful LSTM?

- Stateful LSTM can be particularly useful for applications that require long-term memory, such as speech recognition or language modeling. By maintaining the network’s context over time, Stateful LSTM can improve the accuracy of predictions and reduce the likelihood of errors.

-

Are there any downsides to using Stateful LSTM?

- One potential downside of Stateful LSTM is that it requires careful management of the hidden state between batches. If the state is not properly reset or initialized, it could lead to errors or incorrect predictions. Additionally, Stateful LSTM may not be suitable for applications that require frequent changes in context, as the network may struggle to adapt to new information.