Amazon S3 is a highly scalable and reliable cloud storage service offered by Amazon Web Services (AWS). It allows users to store and retrieve any amount of data from anywhere on the web. The service offers incredible durability, availability, and scalability at a low cost, making it a top choice for many businesses and developers worldwide. In this article, we will guide you through the step-by-step process of uploading files to an S3 bucket with Boto, a Python library that enables working with AWS services.

Boto is an easy-to-use, powerful, and reliable Python interface to AWS services. With Boto, developers can create, configure, and manage AWS services such as S3, EC2, SQS, and more programmatically. Upload files to an S3 bucket using Boto is a straightforward process that involves creating an S3 client object, specifying the bucket name, and the file path to upload. Once you have the right permissions and credentials configured in your AWS environment, you can use Boto to upload files to one or multiple S3 buckets easily.

If you are looking for a complete guide to help you upload files to an S3 bucket with Boto, you are in the right place. In this step-by-step tutorial, we will cover all the necessary steps, including installing and configuring Boto, setting up your AWS environment, creating an S3 bucket, and uploading files using Python code. By the end of this article, you will have a clear understanding of how to use Boto to upload files to an S3 bucket, and you will be equipped with the skills you need to work with AWS services programmatically. Let’s dive into the details and start our journey to upload files to an S3 bucket with Boto!

“How To Upload A File To Directory In S3 Bucket Using Boto” ~ bbaz

Introduction

Uploading files to S3 Bucket with Boto is quite a common task. You might need to do that when you want to store media files and other types of data to the S3 bucket for backup, distribution, or even sharing with others. In this article, we will discuss how to upload files to an S3 bucket with Boto without any hassle.

Background on AWS S3 Boto

First, let’s talk about AWS S3 Boto. The AWS SDK for Python, known as Boto, is an open-source software development kit that allows developers to create applications that integrate with Amazon Web Services. It provides interfaces for creating and managing AWS resources, including S3 buckets. S3 is an object storage service that provides unlimited storage for your files, which can be accessed from anywhere at any time through the internet.

Prerequisites

Before we dive into the steps to upload files to S3 bucket with Boto, there are several things you need to prepare:

- Amazon Web Services (AWS) account

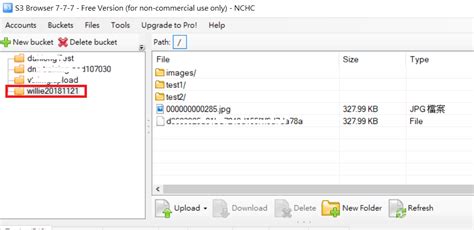

- S3 bucket created in the AWS console

- AWS access key ID and corresponding secret access key with proper permissions

- Python and Boto3 installed on your local machine

Setting up AWS Credentials

To use Boto, you need to authenticate your AWS account. There are two ways to provide AWS credentials to Boto:

- Hardcode the credentials in the script

- Configure the credentials outside of the script

Hardcoding the Credentials in the Script

Hardcoding your AWS credentials in the script is not a recommended way since it is less secure. However, if you choose to use this method, you can set your AWS access key ID and secret access key in the script using the following code snippet:

import boto3s3 = boto3.client('s3', aws_access_key_id='YOUR_ACCESS_KEY', aws_secret_access_key='YOUR_SECRET_KEY')Configuring the Credentials Outside of the Script

A more secure way to provide your AWS credentials to Boto is by configuring them outside of the script. You can do that in one of the two ways:

- Configure AWS CLI on your machine then run ‘aws configure’ command

- Set the AWS_ACCESS_KEY_ID, AWS_SECRET_ACCESS_KEY, and AWS_DEFAULT_REGION environment variables

Uploading Files to S3 Bucket

Now that you have set up your AWS credentials, you can upload files to an S3 bucket with Boto using the following code:

import boto3s3 = boto3.resource('s3')bucket_name = 'your_bucket_name'object_name = 'path/to/your/file'file_path = 'path/to/local/file's3.meta.client.upload_file(file_path, bucket_name, object_name)Comparison: Boto vs. AWS CLI

There are two ways to upload files to S3 bucket, with Boto and with AWS CLI. There are several differences between the two:

| Boto | AWS CLI | |

|---|---|---|

| Programming Language | Python | Shell Scripting |

| Maintenance | Requires installation and configuration of Boto and its dependencies | No installation necessary since AWS CLI comes pre-installed in most systems |

| Flexibility | More flexible since it allows for programmatic interactions with S3 objects | Less flexible compared to Boto |

| Cost | N/A | Can incur data transfer costs if uploading large files to S3 from EC2 instances outside of the same region |

Conclusion

In conclusion, using Boto to upload files to S3 buckets is quite a common task. AWS S3 Boto is an open-source software development kit that allows developers to create applications that integrate with Amazon Web Services, specifically with S3 buckets. Preparing the AWS credentials and configuring Boto to interact with your S3 bucket might be a bit tricky; however, once it’s done, uploading files to your S3 bucket becomes straightforward. There are two ways to upload files to S3 bucket, with Boto and with AWS CLI, each with their pros and cons.

Thank you for visiting our blog and for taking the time to read our step-by-step guide on uploading files to S3 Bucket with Boto. We hope that you found this guide helpful and informative, and that you were able to follow the instructions easily.

As you have learned, uploading files to S3 Bucket with Boto is not as complicated as it may seem. With just a few lines of code and some basic knowledge about AWS, you can upload files to your S3 bucket in no time. This method is not only fast and efficient, but it also provides a secure way to store and manage your files online.

If you encounter any problems or if you have any questions about uploading files to S3 bucket with Boto, feel free to leave a comment below. We would be more than happy to assist you with your concerns. Also, don’t forget to subscribe to our blog to get the latest updates on tech-related topics and tutorials.

Once again, thank you for choosing our blog as your source of information. We hope to see you back here soon for more helpful articles and guides.

Here are some common questions people ask about the step-by-step guide for uploading files to S3 bucket with Boto:

-

What is S3 bucket?

S3 bucket is a cloud-based storage service provided by Amazon Web Services (AWS). It allows users to store and retrieve files of any type or size, from anywhere in the world.

-

What is Boto?

Boto is the official Python software development kit (SDK) provided by AWS. It enables Python developers to write software that makes use of services like S3, EC2, and others offered by AWS.

-

What are the steps involved in uploading files to S3 bucket with Boto?

- Create an S3 bucket on AWS Console

- Install Boto library using pip command

- Write Python code to connect to S3 bucket using Boto credentials

- Upload files to S3 bucket using Boto API methods

-

How do I check if the file has been uploaded successfully?

You can use the Boto API method ‘head_object’ to check if the file exists in the S3 bucket. If the method returns True, then the file has been uploaded successfully.

-

What are the advantages of using S3 bucket for file storage?

- Scalability: S3 buckets can store unlimited amounts of data, and can handle millions of requests per second.

- Durability: S3 ensures that your data is stored safely and redundantly, with multiple copies in different locations.

- Accessibility: S3 allows you to access your files from anywhere in the world using a simple API.