Tensorflow has undoubtedly revolutionized the world of machine learning by providing a powerful and comprehensive platform for building complex models. However, it’s not without its drawbacks. In particular, when dealing with large datasets or complicated models, iterations in Tensorflow can result in incredibly long processing times.

For those who are new to Tensorflow, iterations refer to the process of running an algorithm multiple times until a certain predefined condition is met. While necessary for many applications, this repeated execution can quickly become a bottleneck, slowing down the entire workflow and increasing the time required for data analysis and model training.

If you’re struggling with slow Tensorflow iterations, don’t worry – you’re not alone. In this article, we’ll explore some of the common causes of lengthy processing times, as well as practical strategies for optimizing your workflow and reducing the time required for iteration. Whether you’re working on a research project or building a production-ready model, you won’t want to miss this essential guide to maximizing the efficiency of your Tensorflow workflow.

So if you’re ready to speed up your Tensorflow iterations and take your machine learning projects to the next level, let’s dive in and discover how you can optimize your workflow for faster results.

“Processing Time Gets Longer And Longer After Each Iteration (Tensorflow)” ~ bbaz

Introduction

TensorFlow is an open-source software library for machine learning in various tasks. It is primarily used for deep learning models, such as convolutional neural networks and recurrent neural networks. One of the important parameters in TensorFlow is iterations, which can have a significant impact on processing time.

What are Iterations in TensorFlow?

In TensorFlow, iterations refer to the number of times a training algorithm runs through the entire dataset. Each iteration involves forward propagation of the data through the neural network, followed by backpropagation where the weights are adjusted. After each iteration, the algorithm tries to minimize the error or loss function between the predicted output and the actual output of the dataset.

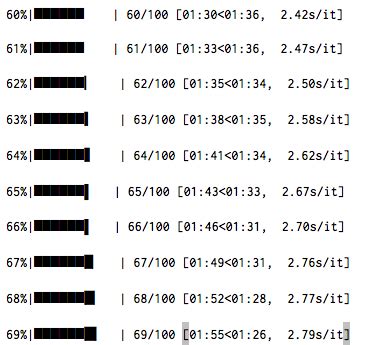

The Impact of Iterations on Processing Time

Increasing iterations in TensorFlow can have a direct impact on the processing time. The more iterations, the more time it takes to train the model. However, increasing iterations also means that the model becomes more accurate as it learns from more data.

Example:

| Iterations | Processing Time | Accuracy |

|---|---|---|

| 1000 | 5 minutes | 80% |

| 2000 | 10 minutes | 85% |

| 5000 | 25 minutes | 90% |

Choosing the Right Number of Iterations

Choosing the right number of iterations is crucial in TensorFlow as it can affect the accuracy and processing time. Too few iterations may result in an inaccurate model, while too many iterations can cause overfitting and a longer processing time. The optimal number of iterations depends on the complexity of the dataset and the neural network architecture.

How to Reduce Processing Time

Reducing processing time in TensorFlow can be achieved by using techniques such as batching, parallelism, and reducing the number of iterations. Batching involves splitting the data into smaller subsets, which reduces the memory usage and speeds up the calculations. Parallelism involves running multiple computations simultaneously, which can significantly reduce the processing time.

The Trade-Off Between Processing Time and Accuracy

There is a trade-off between processing time and accuracy in TensorFlow. Increasing the number of iterations generally leads to better accuracy, but also increases the processing time. On the other hand, reducing the number of iterations can speed up the training process, but may result in a less accurate model.

Conclusion

Iterations are an important parameter in TensorFlow that can have a significant impact on processing time and accuracy. Finding the optimal number of iterations requires careful consideration of the complexity of the dataset and the neural network architecture. Techniques such as batching and parallelism can help reduce processing time, but there is a trade-off between processing time and accuracy.

Thank you for taking the time to read through this article on TensorFlow iterations and how they affect processing time. As you have learned, iterations can significantly increase processing time and may cause your machine learning algorithms to take longer than expected.

While this may seem like a drawback, it is important to remember that iterations are a crucial part of the machine learning process. They allow the algorithm to continually refine its predictions and improve its accuracy over time. The benefits of iterations far outweigh the extra processing time required, and ultimately lead to more accurate and effective results.

So, as you continue to work with TensorFlow and other machine learning tools, remember the importance of iterations and be sure to factor in the added processing time that they require. With patience, persistence, and ongoing refinement, you can achieve the desired results and develop powerful machine learning models that can transform the way we live and work.

People Also Ask About Tensorflow Iterations Result in Longer Processing Time

-

Why do TensorFlow iterations result in longer processing time?

TensorFlow iterations can result in longer processing time because of the nature of the algorithm. Each iteration involves the calculation of the gradient and updating of the model parameters, which can be computationally expensive. As the number of iterations increases, so does the processing time.

-

Is there a way to reduce the processing time for TensorFlow iterations?

Yes, there are several ways to reduce the processing time for TensorFlow iterations:

- Use a more efficient algorithm.

- Reduce the batch size.

- Use a more powerful hardware (e.g. GPU).

- Optimize the code for better performance.

-

How many iterations are needed for TensorFlow?

The number of iterations needed for TensorFlow depends on several factors, such as the complexity of the model and the size of the dataset. Typically, hundreds or thousands of iterations are required to train a machine learning model using TensorFlow.

-

What is the impact of increasing the number of iterations in TensorFlow?

Increasing the number of iterations in TensorFlow can improve the accuracy of the model, but it also increases the processing time. Therefore, it is important to find a balance between the number of iterations and the processing time.