If you’re interested in Natural Language Processing (NLP), then you should know how tokenization works. Tokenizing string sentences with NLTK is essential in NLP because it allows you to break down a sentence into smaller components known as tokens. These tokens can then be used for other NLP operations, such as sentiment analysis or named entity recognition.

This guide will walk you through the basics of tokenizing string sentences using NLTK. We’ll cover the concepts behind tokenization and show you how to use NLTK’s tokenizer to break down sentences into individual tokens. You’ll also learn about different types of tokenizers and how to use them effectively. By the end of this article, you’ll have a clear understanding of tokenization and how to apply it in your NLP projects.

Whether you’re a seasoned NLP professional or just starting out, this guide is perfect for anyone who wants to explore the world of tokenizing string sentences. It’s an easy and comprehensive guide that will give you the foundation to build upon as you delve deeper into NLP algorithms and techniques. So, let’s get started!

In conclusion, if you want to gain a better understanding of tokenization and learn how to use NLTK’s tokenizer effectively, then this guide is for you. Through step-by-step instructions and examples, we’ll help you grasp the concept of tokenization and explore the benefits it offers in NLP. By the end of this guide, you’ll be on your way to incorporating tokenization into your NLP processes and unlocking the potential of your text data. So what are you waiting for? Let’s start!

“How Do I Tokenize A String Sentence In Nltk?” ~ bbaz

Introduction to Tokenizing String Sentences with NLTK

When analyzing text data, it is often necessary to separate text into smaller components called tokens. In natural language processing (NLP), tokenization is the process of splitting text into individual words or phrases called tokens. This forms the foundation for further analysis such as sentiment analysis, named entity recognition, and more. One of the most popular tools for performing tokenization in NLP is the Natural Language Toolkit (NLTK) library, which provides a variety of functions for text preprocessing.

Types of Tokenization

Word Tokenization

The most common form of tokenization is word tokenization, which segments text into individual words. NLTK provides a function called word_tokenize() that uses regular expressions to split text into individual words based on whitespace and punctuation boundaries. For example, the sentence The cat sat on the mat. would be tokenized into [‘The’, ‘cat’, ‘sat’, ‘on’, ‘the’, ‘mat’, ‘.’].

Sentence Tokenization

Sentence tokenization splits text into individual sentences. This is useful for tasks that require analysis at the sentence level, such as summarization and parsing. NLTK provides a function called sent_tokenize() that uses an unsupervised machine learning algorithm to identify sentence boundaries in text. For example, the text I went to the store. Then I went home. would be tokenized into [I went to the store., Then I went home.].

Tokenizing with NLTK

Installing NLTK

Before using NLTK, you must install it on your system by running the following command in your terminal:

pip install nltk

Importing NLTK and Downloading Resources

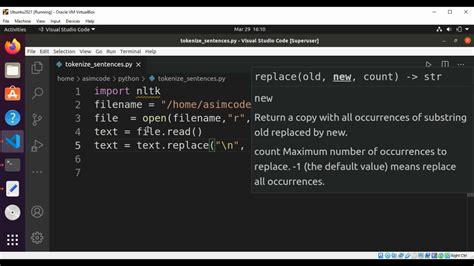

To begin using NLTK, you must first import it into your Python script:

import nltk

You will also need to download any resources needed for tokenization. NLTK provides a convenient function called download() that can be used for this purpose:nltk.download('punkt')This command will download the necessary files for word and sentence tokenization.

Tokenizing Text

Once NLTK is installed and the necessary resources are downloaded, you can use the word_tokenize() and sent_tokenize() functions to tokenize text. Here’s an example:

from nltk.tokenize import word_tokenize, sent_tokenizetext = I went to the store. Then I went home.sentences = sent_tokenize(text)words = [word_tokenize(sentence) for sentence in sentences]print(words)# Output: [['I', 'went', 'to', 'the', 'store', '.'], ['Then', 'I', 'went', 'home', '.']]

Comparison of Tokenizers

In addition to NLTK, there are other libraries and tools that provide tokenization functions. Here’s a comparison of some commonly used tokenizers:

| Library/Tool | Word Tokenization | Sentence Tokenization | Languages Supported |

|---|---|---|---|

| NLTK | ✅ | ✅ | Multiple |

| spaCy | ✅ | ✅ | Multiple |

| Stanford CoreNLP | ✅ | ✅ | Multiple |

| Pattern | ✅ | ✅ | Multiple |

| Gensim | ✅ | ❌ | Multiple |

| TextBlob | ✅ | ✅ | English |

Conclusion

The ability to accurately tokenize text is a crucial component of NLP applications. NLTK provides a powerful set of tools for tokenization and preprocessing, including word_tokenize() and sent_tokenize(). While other libraries and tools also provide tokenization functions, NLTK remains one of the most popular and widely used libraries in the field of NLP.

Thank you for taking the time to read this guide on tokenizing string sentences with NLTK. We hope that you have found this article informative and helpful in your journey towards understanding natural language processing.

Tokenization is an important process in NLP that involves breaking down text into smaller units, or tokens, to better understand its meaning. Through NLTK, we can easily tokenize string sentences using a variety of methods, such as word tokenization, sentence tokenization, and regular expression tokenization.

The use of tokenization can have many practical applications, including text classification, sentiment analysis, and machine translation. By mastering this important aspect of NLP, you will be better equipped to solve real-world problems using natural language data.

Once again, thank you for reading and we hope that you will continue to explore the vast world of natural language processing through NLTK and other tools. Happy tokenizing!

Tokenizing string sentences with NLTK is a common task in natural language processing. Here are some common questions people ask about it:

-

What is tokenization?

Tokenization is the process of breaking down a text into smaller units called tokens. In the case of string sentences, tokens are usually individual words or punctuation marks.

-

Why is tokenization important?

Tokenization is an important step in many natural language processing tasks because it allows algorithms to work with individual words or phrases rather than treating entire sentences as a single unit. This makes it easier to perform tasks such as sentiment analysis, part-of-speech tagging, and named entity recognition.

-

What is NLTK?

NLTK (Natural Language Toolkit) is a Python library that provides tools for working with human language data. It includes modules for tokenization, stemming, lemmatization, part-of-speech tagging, and more.

-

How do I tokenize a string sentence with NLTK?

The simplest way to tokenize a string sentence with NLTK is to use the word_tokenize function from the nltk.tokenize module. Here’s an example:

import nltkfrom nltk.tokenize import word_tokenizesentence = This is a sample sentence.tokens = word_tokenize(sentence)print(tokens)This will output:

['This', 'is', 'a', 'sample', 'sentence', '.'] -

Can NLTK tokenize languages other than English?

Yes, NLTK supports tokenization for many different languages. You simply need to specify the language when importing the appropriate tokenizer module. For example, to tokenize French text, you would use:

from nltk.tokenize import sent_tokenizenltk.download('punkt')sentence = Ceci est un exemple de phrase.sentences = sent_tokenize(sentence, language='french')print(sentences)This will output:

['Ceci est un exemple de phrase.']