Eliminating duplicate rows in a Pandas Dataframe is crucial when you are working with large datasets. Imagine working with thousands of rows filled with identical values – it’s a nightmare! Fortunately, the Pandas library offers a simple solution to this problem.

In this article, we will focus on eliminating duplicate rows in a DataFrame with selective columns. This means that we can choose which columns to check for duplicates, leaving the rest of the data untouched. Not only is this efficient, but it also allows us to preserve data that may be important for analysis.

If you’re struggling with managing duplicate rows in your Pandas DataFrame, then you’ve come to the right place. We’ll be showing you step-by-step how to eliminate duplicate rows using Python’s versatile Pandas library. The process is quick and easy, and by the end of this article, you’ll be able to confidently handle duplicate rows like a pro!

If you want to optimize your data processing pipeline and ensure that your analyses are accurate, then you need to read this article. Eliminating duplicate rows is an essential step in any data preprocessing workflow, and with Pandas, the process becomes seamless. So what are you waiting for? Join us on this journey through Duplicate Row Hell and come out the other side with a cleaner, more efficient dataset.

“Remove Duplicate Rows From Pandas Dataframe Where Only Some Columns Have The Same Value” ~ bbaz

Introduction

Pandas is a widely used data manipulation library in Python. It offers various functionalities that allow users to work effectively with data. One of the important functionality is eliminating duplicate rows from a Pandas DataFrame. In this article, we will discuss how to remove duplicate rows from a Pandas DataFrame with identical values in selective columns.

What are Duplicate Rows in Pandas DataFrames?

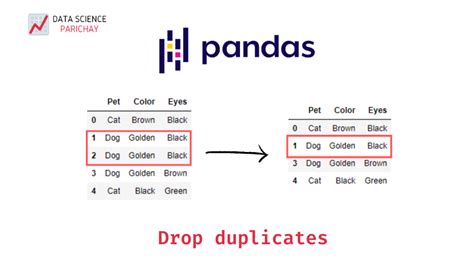

The term duplicate rows refers to the rows in a DataFrame that have same values in one or more columns. These duplicate rows may occur due to data ingestion issues, data entry errors or data retrieval issues. When working with large datasets, this can become problematic as it can cause inaccurate results or skews in data analysis. Therefore, removing these duplicate rows is essential for data cleaning and processing.

Selective Columns

Sometimes, we may face a situation where we want to eliminate duplicate rows based on selective columns only. This means that we want to ignore certain columns and only look at specific columns for identifying duplicates. This can be useful when dealing with large datasets with many columns, where some of the columns could contain irrelevant information for duplicate identification.

The Pandas DataFrame

The DataFrame in Pandas is a two-dimensional tabular data structure that consists of rows and columns, just like a spreadsheet. It can be thought of as a dictionary of Series objects, where each column represents a Series object, and the column name is the key to access that Series object. The DataFrame is a versatile data structure that offers several in-built functions to manipulate and analyze data efficiently.

Duplicate Rows Identification

Pandas provides several functions to identify and remove duplicate rows from a DataFrame. The most common function is ‘drop_duplicates()’. This function removes duplicate rows by comparing all columns in a DataFrame. However, to identify duplicates based on selective columns only, we need to use the combination of ‘subset’ and ‘keep’ parameters.

Subset Parameter

The ‘subset’ parameter is used to specify the columns to consider for identifying duplicates. It takes a list of column names as input. All other columns are ignored while identifying duplicates.

Keep Parameter

The ‘keep’ parameter is used to determine which duplicate values to keep. It has three options:

- First – (default) Keeps first occurrence of the duplicates and removes others

- Last – Keeps last occurrence of the duplicates and removes others

- False – Removes all occurrences of the duplicates

Example Data

Let’s take an example of a Pandas DataFrame and see how to eliminate duplicate rows with identical values in selective columns.

| Column 1 | Column 2 | Column 3 | Column 4 |

|---|---|---|---|

| 1 | John | Doe | 23 |

| 2 | Jane | Doe | 25 |

| 3 | Sarah | Jones | 29 |

| 4 | John | Doe | 23 |

| 5 | Jane | Doe | 25 |

Dropping Duplicate Rows

We can use the ‘drop_duplicates()’ function to remove duplicate rows from Pandas DataFrame. In this example, we want to remove rows with identical values in column 2 and 3. Therefore, we will use the ‘subset’ parameter and select columns 2 and 3 only.

Code:

“`pythonimport pandas as pd data = {‘Column 1’: [1, 2, 3, 4, 5], ‘Column 2’: [‘John’, ‘Jane’, ‘Sarah’, ‘John’, ‘Jane’], ‘Column 3’: [‘Doe’, ‘Doe’, ‘Jones’, ‘Doe’, ‘Doe’], ‘Column 4’: [23, 25, 29, 23, 25]} df = pd.DataFrame(data)df.drop_duplicates(subset=[‘Column 2’, ‘Column 3′], keep=’first’, inplace=True)“`

Results

The resulting DataFrame will look like this:

| Column 1 | Column 2 | Column 3 | Column 4 |

|---|---|---|---|

| 1 | John | Doe | 23 |

| 2 | Jane | Doe | 25 |

| 3 | Sarah | Jones | 29 |

Conclusion

The ‘drop_duplicates()’ function in Pandas is an easy and effective way to remove duplicate rows from a DataFrame. By using the ‘subset’ and ‘keep’ parameter, we can select specific columns for identifying duplicates and decide which duplicate row to keep based on certain conditions. This function is particularly useful when working with large datasets with many irrelevant columns.

Thank you for taking the time to read our article on eliminating duplicate rows in Pandas Dataframe with identical values. We hope that this post has provided you useful insights on how to deal with duplicated data in pandas.

The process of eliminating duplicate rows in a Pandas Dataframe might seem insignificant at first, but it’s an important step towards data analysis and ultimately making insightful conclusions. Identical values can confuse the algorithm and generate misleading results, rendering your analysis useless. Therefore, it is essential to have a sound understanding of how to eliminate duplicate rows effectively.

Finally, we would like to reiterate the importance of ensuring data quality by screening your data consistently. By doing so, you can minimize the risk of errors and provide reliable insights that are able to drive key business decisions. Remember, accurate data equates to meaningful results.

When working with Pandas dataframes, it is quite common to encounter duplicate rows with identical values in selective columns. In this scenario, it can be helpful to eliminate these duplicates to ensure clean and accurate data. Here are some common questions people ask about eliminating duplicate rows in Pandas dataframe with identical values (selective columns) and their answers:

- 1. How do I identify duplicate rows with identical values in selective columns?

You can use the `duplicated()` function in Pandas to identify duplicate rows in a dataframe. To select selective columns, you can use the `subset` parameter. For example:

df[df.duplicated(subset=['column1', 'column2'])]You can use the `drop_duplicates()` function in Pandas to eliminate duplicate rows in a dataframe. To select selective columns, you can use the `subset` parameter. For example:

df.drop_duplicates(subset=['column1', 'column2'], keep='first', inplace=True)Yes, you can use the `drop_duplicates()` function with one column as the `subset` parameter, while keeping other columns intact. For example:

df.drop_duplicates(subset=['column1'], keep='first', inplace=True)Yes, you can use the `drop_duplicates()` function with multiple columns as the `subset` parameter and set `keep` parameter to `’first’` or `’last’`. For example:

df.drop_duplicates(subset=['column1', 'column2'], keep='first', inplace=True)