Wiki Web page Rating With Hadoop

Goal

To rating the given wiki pages utilizing Hadoop.

Challenge Overview

One of many greatest adjustments on this decade is the supply of environment friendly and correct information on the internet. Google is the most important search engine on this world owned by Google, Inc. The center of the search engine is PageRank, the algorithm for rating the online pages developed by Larry Web page and Sergey Brin at Stanford College. Web page Rank algorithm doesn’t rank the entire web site, but it surely’s decided for every web page individually.

The web page rating will not be a totally new idea within the web search. However it’s changing into extra essential these days, to enhance the online web page rating place within the search engine. Wikipedia has greater than 3 million articles as of now and nonetheless its rising on a regular basis. Each article has hyperlinks to many different articles. That is the numerous consider web page rating. Every article has incoming and outgoing hyperlinks. So analyzing which web page is crucial web page than the opposite pages is the important thing. Web page rating does this and rank the pages based mostly on its significance.

Proposed System

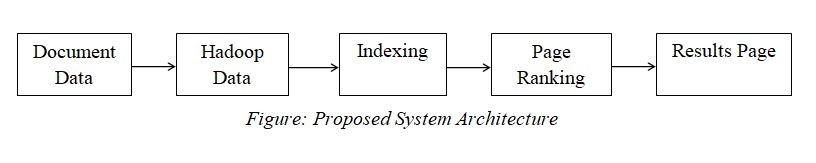

The proposed Wiki Web page Rating With Hadoop project system focuses on creating finest web page rating algorithm for Wikipedia articles utilizing Hadoop. The proposed system structure is proven within the determine.

All of the doc knowledge within the database are listed. The net search engine searches the key phrase within the indexing of the database.Then to look the information within the database, the online crawler is used. After discovering the pages, the search engine reveals the highest net pages which can be associated to the question.

MapReduce consists of a number of iterative set of features to carry out the set of search outcomes. Map() perform gathers the question outcomes from the search and cut back() perform performs the add operation. The wiki web page rating project utilizing Hadoop includes 3 essential Hadoop steps.

- Parsing

- Calculating

- Ordering

Parsing

Within the parsing step, wiki xml is parsed into articles in Hadoop Job. Through the mapping section, get the title of the article and outgoing hyperlink connections are mapped. Within the cut back section, get the hyperlinks to different pages.Then retailer the web page, early rank and outgoing hyperlinks.

Map(): Article title, outgoing hyperlink

Cut back(): Hyperlink to different pages

Retailer: Web page, Rank, Outgoing hyperlink

Calculating

Within the calculating step, the Hadoop job will calculate the brand new rank for the online pages.Within the mapping section, every outgoing hyperlink to the web page with its rank and complete outgoing hyperlinks are mapped utilizing map perform.Within the cut back section, calculate the brand new web page rank for the pages.Then retailer the web page, new rank and outgoing hyperlinks.Repeat these processes iterative to get the most effective outcomes.

Map(): Rank, outgoing hyperlink

Cut back(): Web page rank

Retailer: Web page, Rank, Outgoing hyperlink

Ordering

Right here the job perform will map the recognized web page. Then retailer the rank and web page. Now we will see prime n pages within the net search.

Wiki Web page Rating With Hadoop Advantages

- Quick and correct net web page outcomes

- Much less time consuming

Software program Necessities

- Linux OS

- MySQL

- Hadoop & MapReduce

{Hardware} Necessities

- Onerous Disk – 1 TB or Above

- RAM required – 8 GB or Above

- Processor – Core i3 or Above

Know-how Used

- Huge Knowledge – Hadoop

Supply projectgeek.com